For a little while, it looked like we’d solved a long-running problem: displaying video images in really high quality had almost become easy. Then, and quite by choice, we made it hard again.

Beyond radio itself, cathode ray tubes were the single most crucial enabling technology for television, and constant development kept them viable until the late 2010s. They were never great; heavy, electrically inefficient, and often curved in at least one direction, so the glass could withstand the vacuum inside. Then there’s the issue of making an electron beam move across the face of the tube in a straight line. Picture trying to draw a perfect rectangle on the wall with the water from a hosepipe from about twenty feet away. Trying to make three electron beams do that simultaneously could lead to mis-registration, rainbow fringing, and colour errors when the beam intended for the red phosphor might drift into the green or blue.

CRTs

CRTs also weren’t very bright; nobody was ever going to make an HDR CRT. In the same way that nobody really chose that green for Rec. 709 displays, nobody decided that TV should achieve about 100 nits. It was just what the technology did. It took liquid crystal display technology about three decades to become a plausible alternative to the CRT for general-purpose television viewing. When it did, though, it wasn’t that difficult to build displays which could exceed the brightness of TV. Crucially, an LCD doesn’t generate its own light as a CRT does. Up until the point where the LCD panel melts, you can have more brightness by firing more light into it.

We might think that HDR was inevitable at this point, on the basis that we could therefore we would make brighter displays. Of course, “dynamic range” doesn’t mean “brightness,” and it’s long been a common misconception that HDR means more brightness. What it actually means is more contrast, and one of the bigger problems LCDs had in their early infancy was milky blacks, which actually made them a poor candidate for HDR. Things have improved since, but conventional TFT-LCD displays still lack the dark blacks required for really nice HDR pictures.

Around the same time, plasma displays were emerging. The idea here is functionally very similar to a very large number of tiny neon-type tubes, and more or less the same technology as was found in those bright orange displays in laptops and portable computers of the 80s. The advantage is that a cell not intended to be emitting any light is off, so that the contrast ratio, no matter how dim the peak white, is vast. Practical concerns regarding the high voltages required to fire each subpixel often compromised contrast, though, and like CRTs, they suffered mediocre output. Pushing for brightness reduced longevity.

OLED

Which is also the problem with OLEDs. As with plasma, any OLED that we don’t want to emit light can simply be unpowered; unlike a TFT, the display isn’t struggling to block a powerful backlight. OLEDs are easier to drive than plasma, working at lower voltage. The problem they share is that if we drive the panel hard, for brightness, it can burn in and decay in output with the picture often drifting toward yellow. Sony effectively gave up on high-output OLED having spent what must have been a vast amount of money on a manufacturing plant for the thoroughbred new technology. Now LG makes panels for many of the world’s TVs, although it adds a white emitter to the RGB for brightness – at the cost of colour accuracy.

Projection

So what else is there? Projection has improved massively since three-tube CRT projectors. The best of those can be superb, and the technology still has dedicated fans who run what were very expensive CRT projectors bought cheap and refurbished. Driving smaller screens than they were designed for, performance can be excellent, but the’re a pain to set up, and as a mainstream technology it’s decades in the grave. LCD projectors, whether they fire the light through the LCD panel or mount it on a mirrored heatsink and bounce the light off, have seen so much development that they remain a mainstream technology despite DLP.

DLP is a chip covered with tiny micromirrors which hinge slightly, bouncing light either onto the screen or not. Contrast is excellent, although most projectors, even cinema projectors, can only achieve what we might call cinema HDR, with dimmer peak whites than we generally expect from an HDR reference monitor. The other big issue with contrast in projected images is controlling bounce from the room. The screen is white; the darkest black we can achieve is a white surface being illuminated by the ambient light. Anyone wearing a white shirt in the front row can noticeably fog up the blacks on screen.

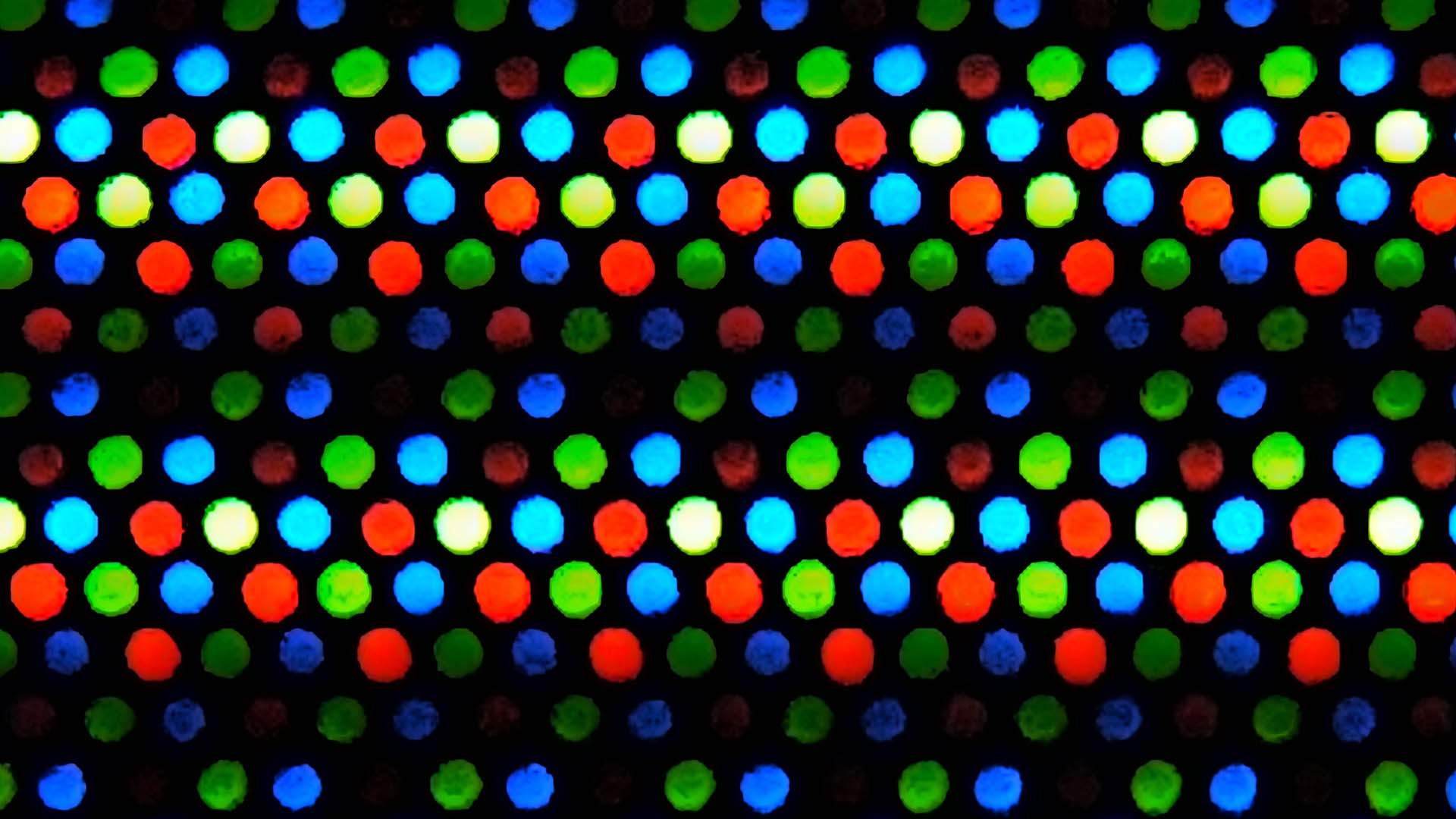

Micro-LED

Possibly the best images are currently made by LED and micro-LED displays like the ones used in outdoor advertising, or in the form of Sony’s CLEDIS technology, which have more brightness, contrast and colour range than anyone knows what to do with. Since 2017, Samsung has promoted this as “The Wall”. The minimum size for a given resolution is large, the price is well into the six figures, and it isn’t being promoted as a precision reference display. Experiments with dual-layer LCD, the better to achieve high contrast, have shown some promising results, and effectively form the replacement for the best OLEDs, but it’s still far from affordable.

So, right now, we barely have a technology that can actually fulfil the specification, let alone at a consumer pricepoint that’ll fit into someone’s lounge without literally becoming part of the building.

Tags: Technology Monitors

Comments