Not yet a household name, but that could soon change

Not yet a household name, but that could soon change

CPUs and GPUs are great for certain processes. But what happens if you need more grunt than any current CPU offers but you can't make efficient use of a GPU either? Xilinx is developing a solution.

We have a problem: computers have all but stopped getting faster, while the demands of moving picture work have accelerated through HD, 4K, high dynamic range, and beyond. All of these things demand more computing power, but the huge progress of CPU performance that kept us going through the 90s and 2000s has all but dried up. Moore's Law has ground to a halt, but take heart: the people at Xilinx may have a solution.

Xilinx is famous mainly as a manufacturer of FPGAs, programmable logic devices which are often used in things like cameras and flash recorders. They behave, broadly speaking, as a large (sometimes very large) number of extremely simple logic devices that can be connected together in complex ways using configuration software. This instinctively sounds somewhat similar to the way a GPU works, with a large number of fairly simple individual processors clocked at high speeds, but it doesn't quite work like that.

An FPGA could be configured to create a large single CPU core, or a large number of smaller cores, or even to create a device capable of one specific mathematical job. FPGA is powerful because it has something of the speed of hardware while being configurable in software. It's possible to put a big FPGA on an expansion card and plug it into a workstation – this is a large part of what many video I/O boards do – but Xilinx's new approach seems intended to sit somewhere inbetween the worlds of traditional hardware and FPGAs.

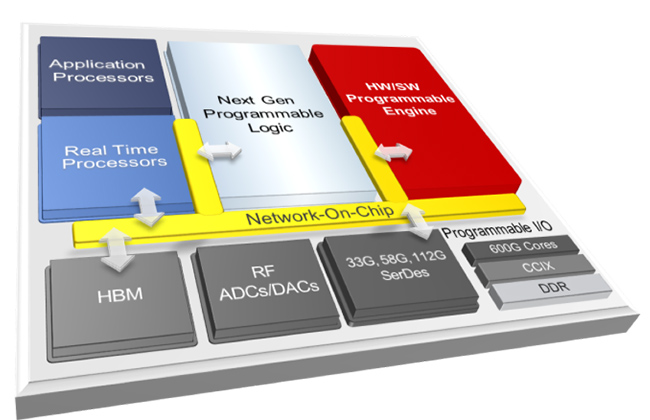

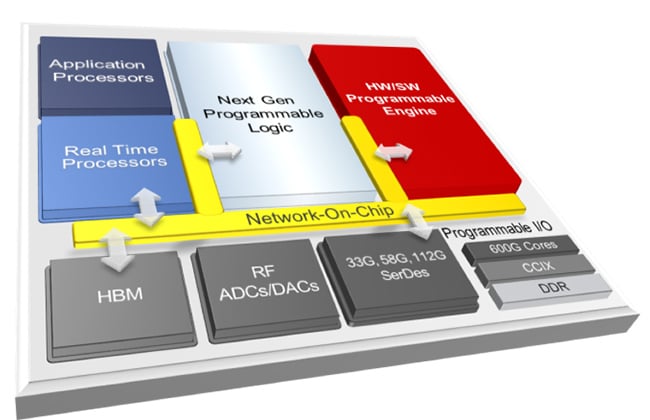

Xilinx's approach to its new concept

Hybrid approach

The company calls it the adaptive compute acceleration platform, ACAP, and has codenamed the first devices Everest. Details are slim, but it sounds like a hybrid approach. FPGAs have long included static, non-configurable features designed to do common tasks, such as high-speed serial input-output devices which could be used to create, say, SDI or HDMI inputs or outputs, or entirely different fast serial devices such as USB or a SATA storage interface.

Xilinx describes the new approach as having some degree of static resources, including digital signal processing, various types of memory, analogue to digital and digital to analogue conversion, general-purpose input and output and a high speed data bus connecting everything together. Reading between the lines, the idea seems to be to combine the characteristics of both FPGA and more conventional devices in a way that can be reconfigured on the fly.

This is potentially a very interesting concept. Take, for instance, the job of H.264 video encoding. It doesn't work very well on GPUs, because (to describe part of the process rather approximately) blocks of picture are encoded in diagonal rows, each being dependent on some of the results of the blocks to the top and left of it. This means that even on 4K video, only a few hundred blocks can be considered at once, whereas a GPU really wants thousands of simultaneous tasks to work well. This is speculation, but a sufficiently configurable device could be set up with exactly the right amount of individual processors, each specifically designed to do that specific task quickly. The new devices are to be manufactured on TSMC's high-performance seven-nanometre semiconductor process.

We have no choice

Ultimately, we don't have many other choices. Adding more cores to existing CPUs is tricky because it's often difficult to split tasks up into things that can all be done at once. It's hard to put ten chefs on the job of making one cake, when most of the work involves mixing things together in just a single bowl. There are arguments even now that software engineers still don't really have the ideal tools to make the best of multiple-core CPUs. The enormous power of GPU computing gave us something of a boost, but again, it only does a specific set of things well – things that can be split up not just into a handful of separate, parallel tasks, but into hundreds or thousands. For everything else, particularly things like video compression and certain types of neural network which often don't work particularly well on either CPU or GPU, we really do have a problem.

Naturally, this is all very early days, but Xilinx's new concept is very much an idea for the moment, and it seem to be serious – reportedly it has spent over a billion on it in the last four years.

Tags: Technology

Comments