Moore’s law isn’t running out of steam: it's running out of relevance.

For the past few decades we’ve been relying on Moore’s law - a loosely defined observation of the way ever-smaller components are packed onto the surface of a chip. Tinier components mean more components. Which means more performance. That’s all very simple and - up to now - easily observable.

But now the tide is changing. There is a real and very obvious limit whose effects are already being felt. That limit is that you can’t make components much smaller as they approach the size of molecules and atoms themselves. There’s still a way to go yet but some interconnects are only 18 atoms wide!

It’s very hard to be meaningful at all when you enter the realm of atomic scale. Saying that “interconnects are only 18 atoms wide” implies that atoms are obliging little snooker balls that line up willingly and which are solid and homogeneous matter. The point is that they’re not matter itself, but the building blocks of it, and so all bets are off when working at those scales, unless you’re a particle or quantum physicist, and these are very special people indeed.

So while technologies like Quantum Computing are showing promise, there’s no immediate reason to think you’re going to be seeing Quantum Computers inside your smartphones any time soon. Just the fact that these incredible systems have to be cooled to near absolute zero suggests that any forthcoming versions of the Apple Watch are going to have to rely on something else for speed gains in at least the near future.

But there are other reasons to think that increases in processing power are going to accelerate rather than diminish.

The rise of the GPU

For some time now, it’s been clear that the lack of speed improvements in CPUs has been largely offset by the incredible rise of the GPU. As more and more applications tap into this “compute” resource, the benefits are pretty abundant. Massive performance with video processing - and also with AI and Machine Learning (both technologies that are are already giving a big boost to the overall capability of technology).

Without wishing to say that anything to do with designing GPUs is easy, what GPU designers do have in their favour is that GPUs work by using massively parallel processing. And that’s good because to add more of that, you just need to replicate the computing elements on a bigger chip. In that sense, there’s no new raw inventions needed: it’s just more of the same. (Again - with apologies to the GPU designers, this is a huge over-simplification, for illustrative purposes only).

So GPUs have kept up the exponential progress that we’ve seen since the start of the Moore’s Law era. But now they have a helping hand, in the form of the rather user-unfriendly acronym FPGAs.

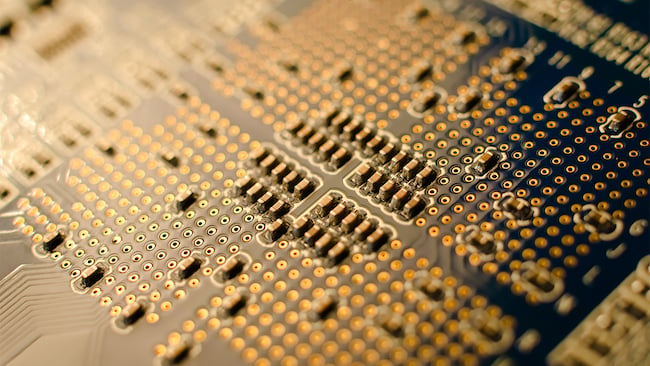

Shutterstock

Field Programmable Gate Arrays

These devices, Field Programmable Gate Arrays, are exactly what they say they are. They’re huge fabrics of interconnected logic gates that can be programmed in the field (i.e. while they’re “on the job”) to behave like a totally bespoke processor.

I’ll explain what that means and why it’s so significant. And I’ll also show you later in this article why it’s not just that there are FPGAs that’s important, it’s where you put them that matters. Get all of this right, and you’ll have processing speeds that are astonishingly fast.

Another way to think of FPGAs is as a software algorithm that's made out of hardware. Imagine having a process - let's say one of encoding uncompressed video into ProRes - and designing software to do that. If you run it on a CPU, it will work but it's not going to be the fastest way. The software has to fit around the architecture of the CPU. But what if the processor was built to fit the algorithm? What if the processor was laid out like the algorithm itself, such that every muscle and sinew in the processor was optimised for that specific software code?

It would be fast, wouldn't it? How much faster? This depends on dozens of things, from the nature of the FPGA to the nature of the software. But I've heard of speed increases of around a hundred times compared to software running on a bare CPU.

Lets have a closer look at how this is even possible.

A typical FPG will have an array of unconnected logic gates. Think of these gates as the atomic structure of a processor. In an FPGA, they exist, unconnected, unconfigured and unable to do anything - in the device's initial state. But all it takes to change all of this is software that's been written to process - something - and which has been written specifically for the FPGA. Well, I say "all": you really have to know your stuff to program FPGAs effectively but the point is that it can be done. And when it is, you have the power to run software for real-time processing at previously unimaginable speeds.

And because FPGAs are "Field Programmable", it means your equipment can load up different operating code every time it boots up if it needs to. It can be a ProRes encoder one minute, and a de-Bayer-er the next.

Vast processing power increases

If FPGAs become part of computer standard chipsets, this could represent an increase of three orders of magnitude in processing power - but of course, only for specific jobs.

It's one thing to have FPGAs on the same motherboard as a conventional CPU. It's quite another to have them on the same chip. But we're seeing progress in this area too. Often, on a big circuit board, different areas of the design talk to each other through serial protocols like PCIe, which, indeed, is very fast. But it's not fast enough. When you consider the overhead in serialising data from (let's say) an CPU, passing it over PCIe to an FPGA (via deserialisation) you can see that there will be delays involved compared to having an FPGA as part of the same chipset, using the same DMA controller and RAM. In the latter case, moving data between the CPU and the FPGA is as simple and elegant as passing memory pointers. No data actually moves between the two devices.

And so access to data is at memory speed rather than serial bus speed, which makes a fast FPGA go even faster.

These are good times for applications that need fast processing. And it's exactly what we need to sail safely through the coming era of extremely high resolution video and AI.

And don't believe anyone who tells you that technological progress is slowing down.

PS: in December 2015, Intel bought Altera: one of the two biggest FPGA manufacturers.

Title image courtesy of Shutterstock.

Tags: Technology

Comments