Personal computers are now thousands of times faster than the fastest super computer 30 years ago, which took up a whole room. But modern processors come with their own flaws. Problems that go back the same amount of time.

Thirty-four years ago, a supercomputer built in Japan achieved a then-staggering performance of 800 million floating point operations per second. Going online in May 1984 at the Large Scale Computation Center at the University of Tokyo, the Hitachi S810/20 was a contemporary of Cray's X-MP/2 and the Fujitsu VP-100. This was heady stuff, although the S810/20 is slightly outperformed by Intel's 18-core i9 Extreme Edition, which achieves a full teraflop (one trillion floating point operations per second) and fits in a reasonably upscale desktop workstation. It is literally thousands of times faster than the 1984 supercomputer. Much has changed in the last third of a century, but, rather alarmingly, a few things really haven't.

Cray X-MP supercomputer, early 80s. The famous Cray towers were, in fact, highly parallel processors.

This realisation arises from a 1984 edition of The Computer Chronicles which is available (legally, it seems) on YouTube and discusses some issues of the time which are interesting, specifically because exactly the same issues are being discussed now.

Skip about ten and a half minutes in and there's a wonderful interview with someone who knows all about the difference between scalar and vector computing. Those terms refer, respectively, to the idea of doing things on the computer's processor, which does complex things one at a time, and doing things on a modern computer's GPU, which does a lot of simple things all at once.

Intel's Core i9-7980XE, released last year, is an eighteen-core, 4.2GHz monster. Courtesy Intel Corporation

CPUs vs GPUs

In 1984, this was a topic of interest to people doing calculations for physics. Analysing the flow of things moving through pipes involves lots of simple calculations all done at once. Great. It's now very popular to do that sort of thing with GPUs and it's absolutely stunning how applicable this 1984 conversation is to the modern day. It's a bit unfair to say that CPU performance is entirely stagnating, but many recent improvements have been focussed on improving performance per watt of energy consumed and by fitting more cores on the same chip. That Core i9 with the teraflop capability is fast, but those resources are split up across its 18 cores, which creates the same problem: we have to figure out how to split up our tasks across it.

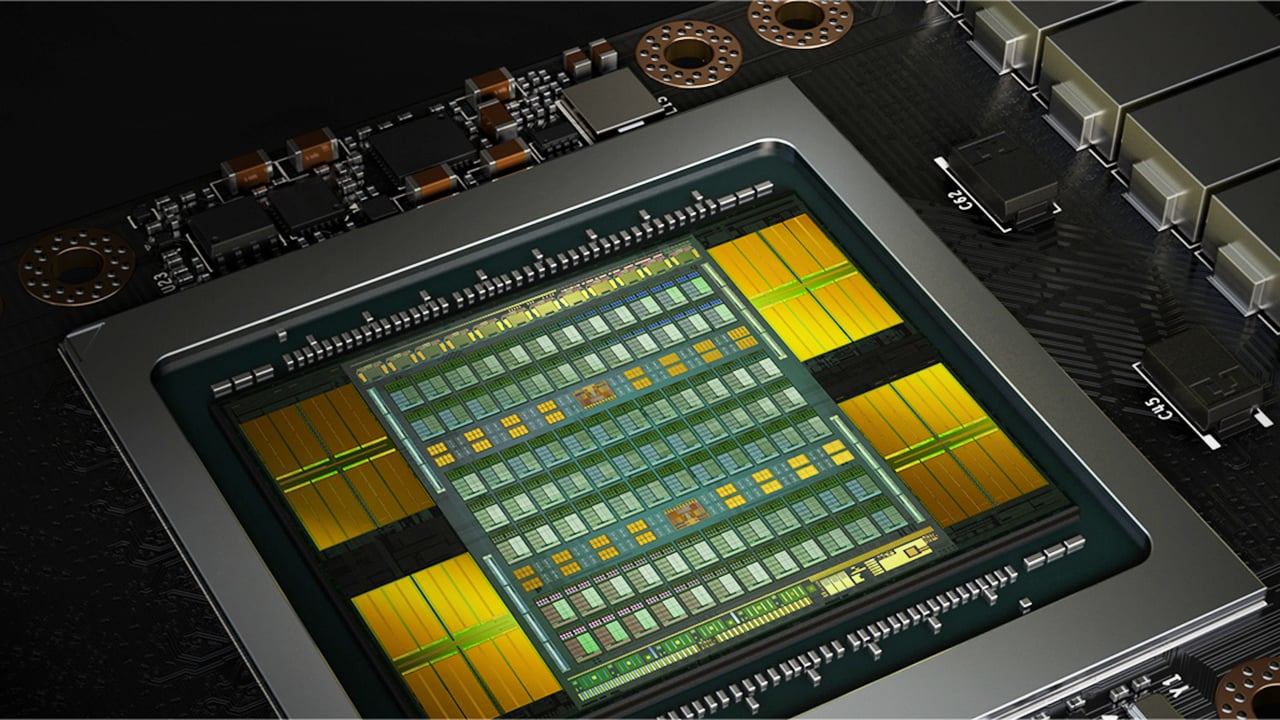

Nvidia GeForce GTX-1080. Courtesy Nvidia

Our 1984 commentators go on to express exactly the same concerns that are still being raised today. “Battle” probably isn't the right word to describe the relationship between two groups of engineers who are trying very hard to cooperate. Still, if there's any sort of conflict, it's between the hardware people, who turn to multi-core designs because that's what can be built, and the software people, who have tasks they want to do that don't necessarily break up across lots of cores very well. Software people want hardware which can effectively run code the way they want to write it. Hardware people want code that makes the best of their hardware.

No solution

The fact that this conversation is still happening a generation and a half later is slightly concerning. Not because overall performance hasn't improved – it has, out of all recognition – but because we've had some of the best brains in computing on this problem for more than three decades and we still don't have a very good solution. Tricks do exist – Apple's Grand Central Dispatch, released in OSX 10.6, does its best to take the work of managing separate tasks out of the hands of the developer, saving work and ideally making better use of resources. Microsoft has something vaguely comparable in the Task Parallel Library for .NET, albeit at a rather different level, and Java thread synchronisation has some relevance too. Intel has developed Threading Building Blocks which can operate on software written in C++ and the MIT Cilk project has created a new programming language, based on C, in an attempt to address this problem.

But in the end, solutions to the issues of doing lots of things at once are limited by the nature of what we're doing. Get ten friends in to help paint a room and they can paint the room quickly, but then they all have to wait for the paint to dry between coats. There is no fixing that. Finding your ten friends something else to do in the meantime is still a useful solution to the problem, though, and that's where the state of the art is currently focussed. It seems likely that hardware and software techniques will both need to change so we can build technologies that legitimately solve these problems. Let's just hope a solution emerges before current preschoolers are pushing middle age.

Tags: Technology

Comments