Replay: ACES high. With the technology looking set to feature prominently at NAB, Red Shark Technical Editor, Phil Rhodes, delves into the complexities of the Academy Color Encoding System (ACES). Part One looks at the problems it sets out to solve.

We haven't talked about the Academy Color Encoding System (ACES) very much before, which is a bit of an omission as I have been rather opportunistic in the past in my use of Red Shark to complain about the lack of standardisation in colorimetry for film and TV workflows. Given that the Academy has just announced the long-awaited version bump to 1.0, however, there's no time like the present.

As we've discussed before, we now have cameras and displays which store images in various ways. At the most basic level, many technologies use red, green and blue primaries to store colour images, but lots of them have different ideas about what red, green and blue mean. We encountered this recently when discussing the Dell UP2414Q quad-HD display, which is supplied configured to Adobe RGB.

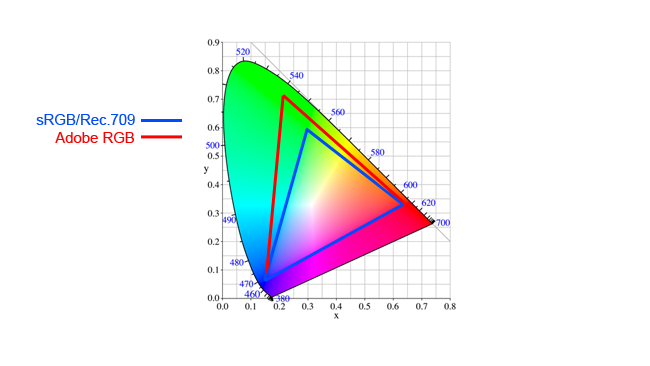

What difference does this make? Well, Adobe RGB uses a deeper, more saturated green than more normal sRGB desktop display in order to provide a greater colour range. The difference is most obvious when expressed on a CIE diagram, which shows all the colours visible to humans in a horseshoe-shaped area of a graph. RGB colourspaces define a triangle, with the red, green and blue at the corners, so that all the colours within the triangle are “in gamut” for that colourspace. Although this familiar chart is rather non-linear, exaggerating the benefit, we can see that Adobe RGB is considerably more capable in the green (which is a well-known Achilles heel of sRGB and Rec. 709, which use the same RGB colours).

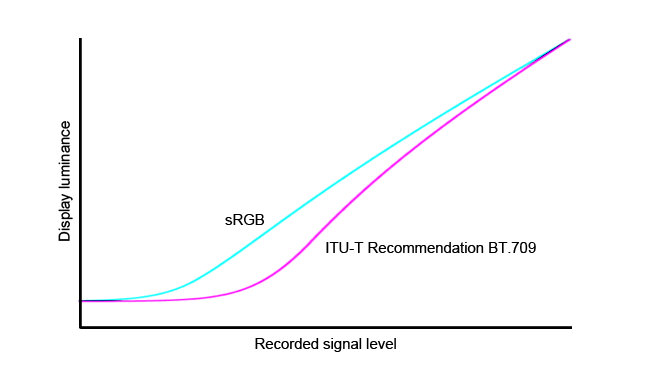

There are also varying interpretations of luma encoding – in many situations, if we encode a pixel value of, say, 100, and compare it to a pixel value of 200, the latter won't actually look twice as bright on everyday displays. We can graph the relationship of the signal sent to a display to the luminance created by that display. Adobe RGB and sRGB each use different relationships, but let's compare sRGB, as used on computer monitors, and ITU-T Recommendation BT.709, as used in HD television. The red, green and blue are the same, but the Rec. 709 curve is much less linear - “darker” - at the bottom than the sRGB curve, because sRGB is designed for viewing in bright, office conditions, not the dim environment usual for TV viewing.

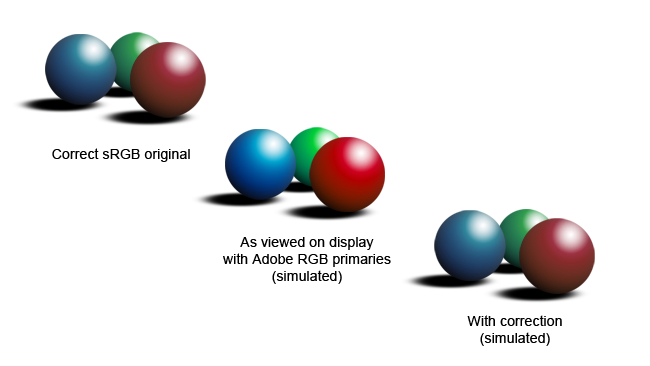

In general, because of all this, it's easy for cameras, displays and software to get the wrong idea about how an image should look, especially once we factor in the desire of users to modify the image for creative purposes or to make it easier to store a wide range of luma information. Efforts to unify all these things must necessarily involve mathematical ways of converting image data encoded using one particular set of RGB primaries and luma encoding into another.

The Dell monitor we mentioned above, for instance, has the ability to behave as an sRGB display. It can't physically alter the colour of the red, green and blue filters which form a physical part of its display panel, so it solves the problem mathematically, by (broadly speaking) slightly desaturating the input image, moving the red, green and blue values of each pixel very slightly toward one another. The result is an image that simply doesn't use the outer reaches of saturation of which the display is capable, creating results similar to what the display would have looked like if it'd had sRGB primaries in the first place.

This comparison of sRGB and Adobe RGB shows there's at least two complex things to keep track of in any attempt to ensure colour accuracy. The problem with this is that we are required to know what's coming out of (say) a camera, and what a monitor expects. It isn't encoded into the signal - we must usually make the selection manually.

This is one of the problems with using computer monitors as video displays: cameras, by default, often send out pictures in the ITU's Rec. 709 colourspace, whereas monitors expect sRGB. It's possible to use a device such as a Blackmagic HDLink, loaded with the appropriate mathematical transformation, to turn the Rec. 709 picture into something close to sRGB, but we still have to know exactly what the camera is putting out, and exactly what the monitor expects. Add to this the fact that most cameras and many monitors have extensive colorimetry options internally, and we need to know a lot about the setup before we can be sure that what we're seeing is accurate.

Crucially, it is easy to create a configuration that is wrong but doesn't too obviously look it. Over the course of an entire shooting and postproduction workflow, involving the complexities of camera setup, on-set monitoring with calibration and creative looks, proxy generation, monitoring in the edit, VFX, grading and generation of deliverables, this situation can become extremely complex.

Tags: Technology

Comments