Coming hot on the heels of two massive announcements last year, last week Nvidia and Cerebras showed yet again that the pace of computing is still accelerating.

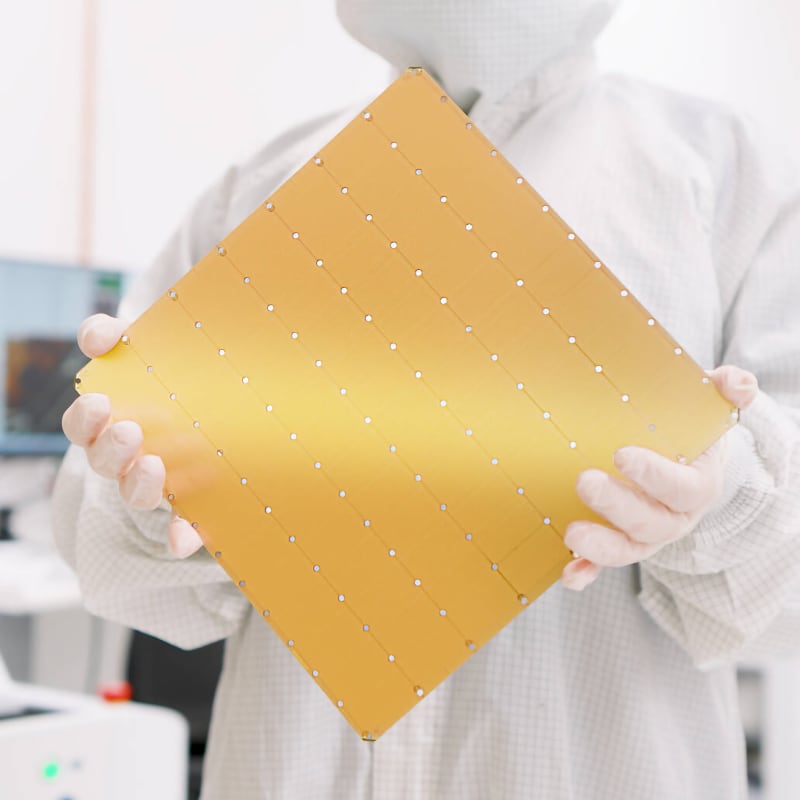

The first CS-2 based Condor Galaxy AI supercomputers went online in late 2023, and already Cerebras is unveiling its successor the CS-3, based on the newly launched Wafer Scale Engine 3, an update to the WSE-2 using 5nm fabrication and boasting a staggering 900,000 AI optimized cores with sparse compute support. CS-3 incorporates Qualcomm AI 100 Ultra processors to speed up inference.

Note: sparse compute is an optimization that takes advantage of the fact that a multiplication by zero always results in zero to skip calculations that could include dozens of operands, one of which is a zero. The result can lead to a huge speedup in performance with sparse data sets like neural networks.

Cerebras has updated its SwarmX interconnect to support up to 2048 CS-3 systems that can be configured with up to 1200 terabytes of external memory and an aggregate computational throughput of up to a quarter of a zettaflop, or 250 exaflops.

Impressively, according to Cerebras all of that extra computing power doesn't add any power draw. Cerebras says that it's twice as fast as CS-2, yet has the same power draw and the same price as CS-2.

The Condor Galaxy 3 will be the first CS-3 based AI supercomputer to become fully operational, schedule for Q2 of 2024.

What sort of speed bump are we going to see? Let’s put it like this: Meta’s Llama2-70B Large Language Model took approximately a month to train on Meta’s GPU cluster. Cerebras reckons that a full cluster of 2048 CS-3s delivers 256 exaflops of AI compute and can train Llama2-70B from scratch in less than a day.

I am AI: 2024 Edition

Meanwhile, last week Nvidia held its first in-person Game Technology Conference since the pandemic began, with showman and CEO Jensen Huang kicking it off with his trademark keynote presentation at the SAP arena because the attendance was too big for its usual venue.

Last year Nvidia introduce the Grace Hopper, powering a shared memory GPU that delivered a whopping exaflop of computational throughput, rivalling the most powerful supercomputers in the world by teaming Grace processors with 72 ARM Neoverse cores with a monstrous Hopper GPU.

This year Nvidia introduced Hopper's successor Blackwell, consisting of two GPUs packaged together, each of them as big as TSMC's largest possible single die, with a fully coherent 10 TB/s interconnect and 192 GB of embedded HBM3 memory enabling them to function like a single, enormous GPU.

Grace Blackwell combines a Grace processor with 72 cores with two of the newly minted dual-core Blackwell GPUs and a new Quantum Infiniband mesh network and an upgraded NVLink with an aggregate throughput of 230.4 TB/s, with up to 576 GPU modules per NVLink network.

In addition to transitioning to a custom 5nm fabrication node at TSMC and major architectural improvements, Blackwell also has an enhanced transformer engine. Taking advantage of the fact that AI does not always require high precision math, AI transformer engines reduce precision where they can in order to boost computational throughput, and Blackwell adds the seemingly bizarre 4-bit float to the mix. A 256-bit vector can accommodate 4 64-bit floats or 8 32-bit... and 32 4-bit floats.

Expect more detailed and accurate weather forecasts and fast drug discover as a result of Blackwell. AWS, Google Cloud, Microsoft Azure and Oracle Cloud have already pre-bought systems.

Nvidia is also pushing very hard on Omniverse; CEO Jensen Huang views it as the operating system for AI; Omniverse hosts the massive, detailed simulations that provide training grounds for AIs, and can be used to train self-driving cars, robotic factory workers, and so on. Omniverse can also now stream scenes to Apple Vision Pro via OpenUSD files and Nvidia’s own Graphics Delivery Network, which might give a few more people a reason to splash out $3500 on a unit.

Huang finished in bravura fashion by inviting two (mostly cooperative) autonomous robots onto the stage to demonstrate what its technology is enabling in the field of robotics and has established Project GROOT (Generalist Robot 00 Technology) to make it happen. He reckons that what he refers to as a ‘ChatGPT moment’ for robotics could be just around the corner, which will be interesting.

Singular acceleration

Some stats to finish off.

Fujitsu’s Fugaku supercomputer maintained the top spot on the Top 500 supercomputer list for three years, until the HPE Cray Frontier went online with 8,699,904 AMD EPYC processors with AMD Instinct GPUs and rated at 1.194 exaflops.

The Grace Hopper based DGX GH200 released last year reaches 1 exaflop.

The Cerebras Condor Galaxy 3 will be able to deliver 250 exaflops.

A single Grace Blackwell rack delivers 1 exaflop. A fully outfitted datacenter can deliver as much as 768 exaflops.

When Ray Kurzweil predicted the Singularity it seemed far off, still science fiction. When OpenAI released ChatGPT, the Singularity looked like it was a lot closer. Now it seems that we are inside the event horizon.

Tags: Technology

Comments