A new micro-imaging lens system, called Neural Nano-Optics, has been developed by Princeton University, giving rise to a host of potential practical applications.

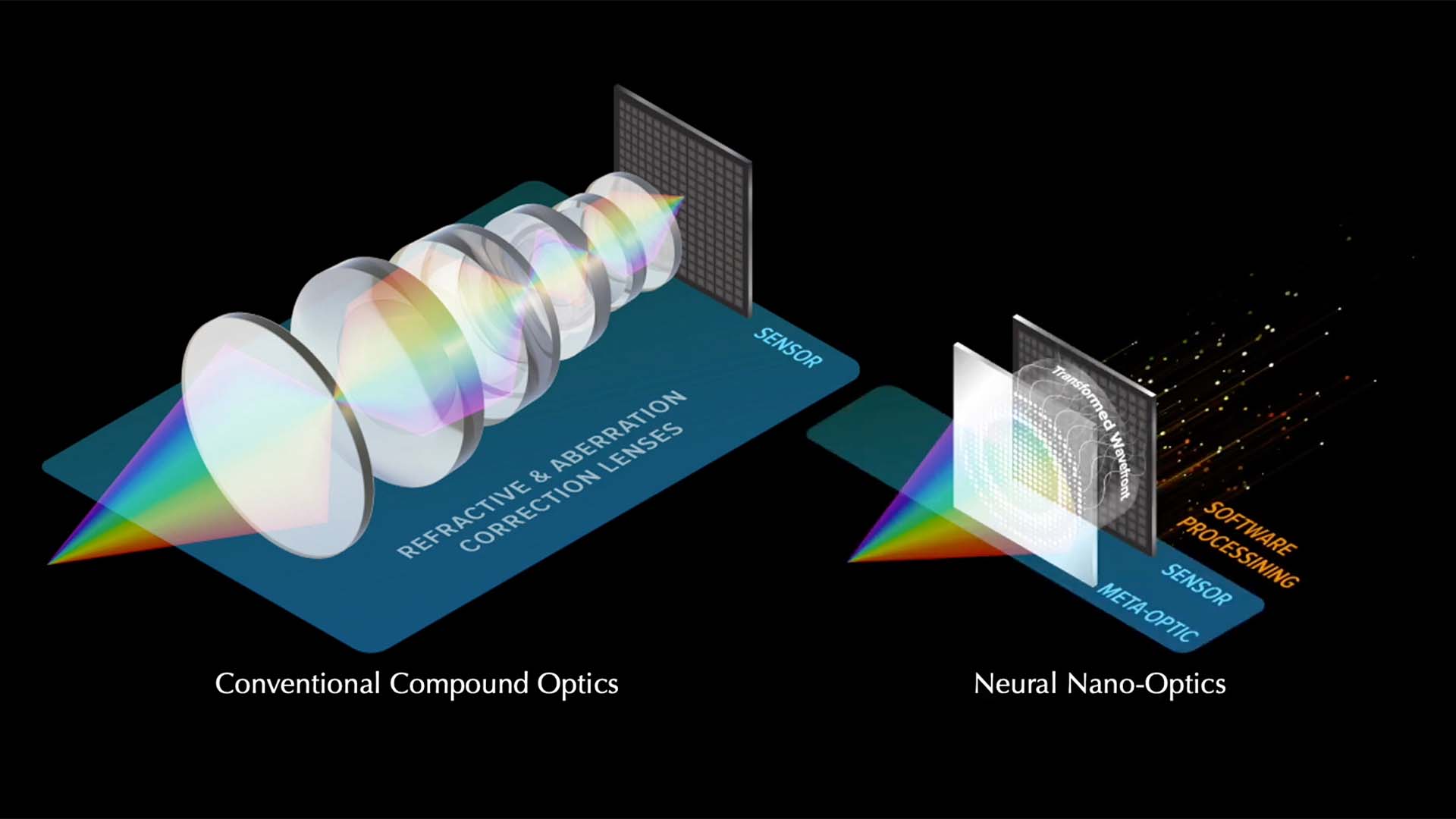

We've remarked before, often in the context of lightfield arrays, that there hasn't been a fundamental change in how cameras worked since cameras were invented - and that's a while ago. Unfortunately, it's also been a while since lightfields were being seriously pushed for film and TV camerawork, and the incumbent approach, with conventional lenses and sensors, seemed reprieved from any realistic prospect of change.

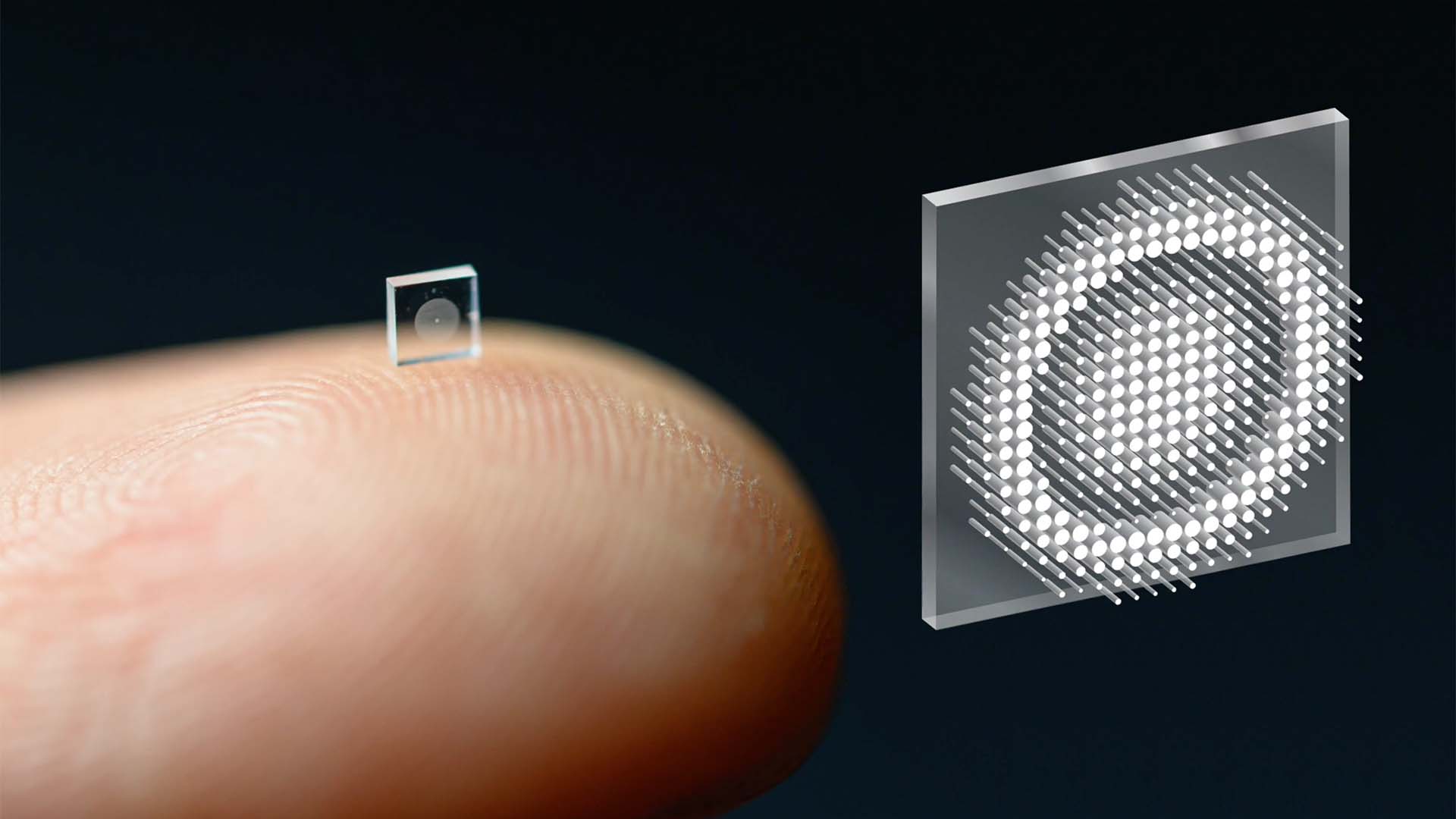

It'll take a lot to alter that, but one such prospect is the work recently published by Ethan Tseng, Shane Colburn and James Whitehead and their team at Princeton. In stark contrast to the staggeringly giant X-ray sensor recently featured on a prominent YouTube channel, Tseng et. al. seem to be interested in making absolutely the smallest possible camera, and achieved a lens with an effective stop of f/2 with an aperture of - wait for it - 0.5mm.

This is not entirely new. Really small imaging sensors aren't the problem; using photosites that tiny might limit performance, but it's certainly possible. Trying to make such small conventional lenses doesn't work very well, though, and for a while people have been toying with the idea of using tiny structures sized to suit the wavelength of visible light to act as a sort of submicroscopic antenna. Because they need to interact with light on the wavelength scale, some of them only worked with monochromatic light - of a single wavelength - which is not only difficult to set up, but means full colour imaging is impossible.

Image: Princeton University.

Neural Nano-Optics

Solving those problems has involved some cleverness around both the design of the lens element itself and the application of what's referred to as "Neural Nano-Optics". If anyone else's buzzword detector has activated its most deafening warning klaxon at the inclusion of the word "neural," sorry, and that's entirely understandable, though this application seems reasonable. There have been some genuine and interesting applications of machine learning (which we shouldn't necessarily think of as synonymous with artificial intelligence) in image reconstruction, and it's not that surprising to find it in a situation like this.

What we can glean from this is that the images cast by the optical assembly are not directly viewable; rather, they represent a pattern of information from which a more conventional image can be reconstructed. The optical element itself - the lens - is sometimes drawn as a pattern of rod-shaped protrusions whose diameter varies according to what looks at least a bit like a two-dimensional Ricker wavelet pattern; as such, the variation in rod size does describe something that looks at least a bit like a conventional lens, although the specifics are buried inside the trickier mathematics of the paper.

Reportedly, though, the design will have taken a lot more design effort than simply making a pretty shape; while the sketches show a hundred or so rods, the real device has millions, designed to generate optical effects in the specific knowledge that those effects can be decoded efficiently. The authors refer to "inverse design techniques," which allow these optical components to be developed based on knowledge of the techniques that will later be used to construct a viewable image. What's new is doing this in such a way that the resulting optics have good performance for a broad range of visible light and can be used to create approximately normal-looking colour imaging.

Image: Princeton University.

Applications

The application for extremely small cameras is, in the first instance, medical; swallowable cameras of more conventional construction are already used to make gastro-intestinal observations. There's hope that these lenses should be inexpensive to produce, being manufactured using conventional semiconductor photolithograpy techniques, with some mention of the shortwave ultraviolet process used to achieve the very smallest feature sizes in common microelectronics. That's in short supply, right now, although it's something that's likely to ramp up significantly in capacity over the next few years.

What application has this to cinematography? Well, in a world where we fetishise huge sensors and classic lens designs, instinctively, not a lot, although there has been some fairly qualified comment around the idea of using them for cellphones. Whether that's because the design has some intrinsic suitability (other than small size) or because there's simply a keenness to address a vast market is hard to tell. The idea of using very large numbers of very small cameras to build lightfield arrays with extremely small inter-lens distances is a tantalising bit of speculation.

This is something that's still at the very earliest stages of being proven in the lab, and not even beyond the stage of guessing wildly at potential applications. Still, even a trial run of something like this would have required expensive access to a semiconductor foundry and as such there's clearly some confidence in its usefulness. All we can do is, once again, wonder at a future in which the front end of all cameras is a blank slab of optical input area, and one of the menus lets us choose which lens we'd like to simulate this week.

See the Neural Nano-Optics system in action in the video below.

Tags: Technology Futurism Cameras

Comments