In the quest to make machines that can think like a human or solve problems that are superhuman, it seems our brain provides the best blueprint.

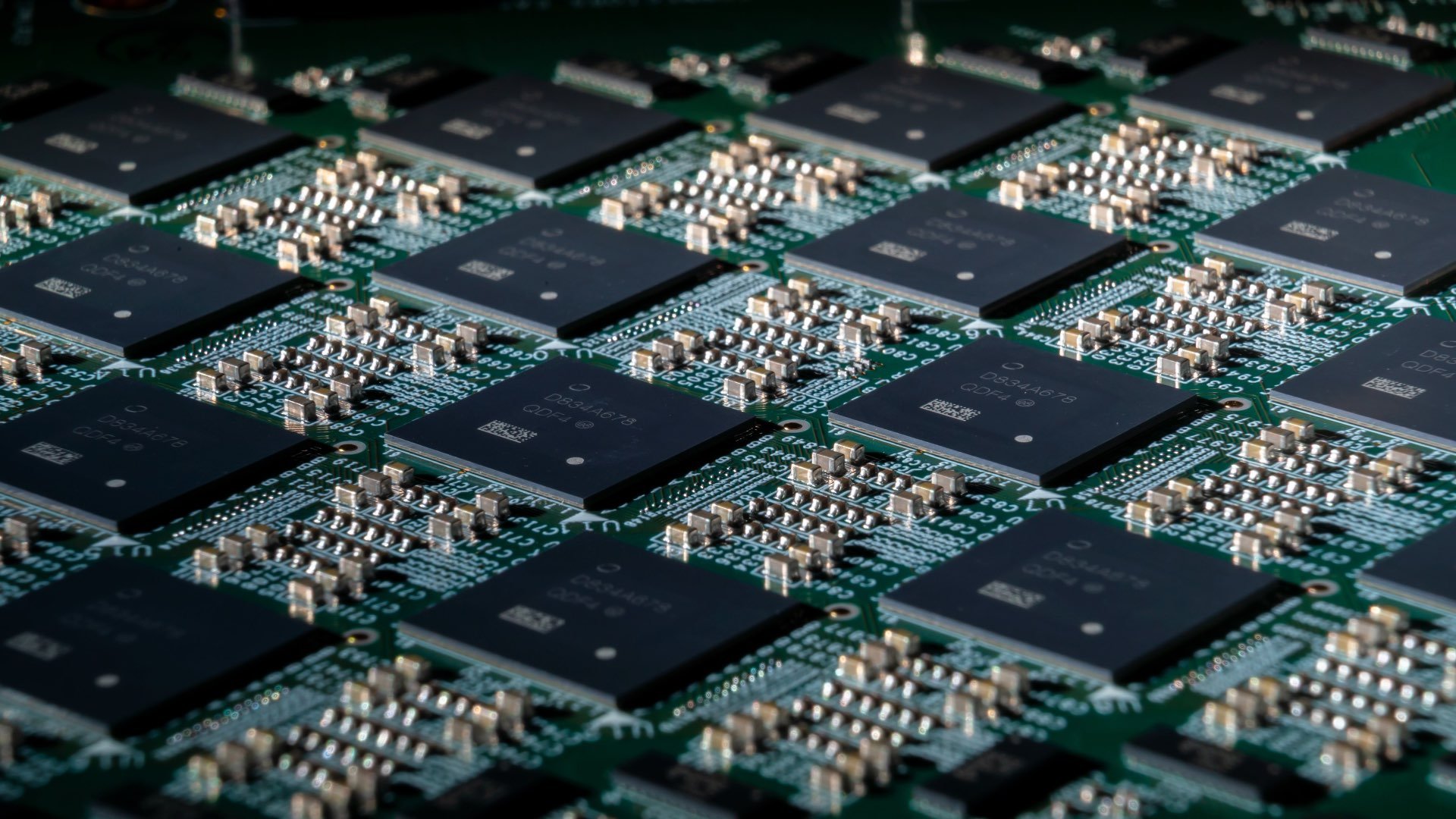

Scientists have long sought to mimic how the brain works using software programs known as neural networks and hardware such as neuromorphic chips.

Last month we reported on attempts to make the first quantum neuromorphic computers using a component, called a quantum memristor that exhibited memory by simulating the firing of a brain’s neurons.

Going a more Cronenbergian route, Elon Musk (and others) are experimenting with hard wiring chips into a person’s neural network to remote control technology via brainwaves.

Now, computer scientists at Graz University in Austria have demonstrated how neuromorphic chips can run AI algorithms using just a fraction of the energy consumed by ordinary chips. Again, it is the memory element of the chip which has been remodelled on the human brain and found to be up to 1000 times more energy efficient than conventional approaches.

As explained in the journal Science current networks of long short-term memory (LSTM) operating on conventional computer chips are highly accurate. But the chips are power hungry. To process bits of information, they must first retrieve individual bits of stored data, manipulate them, and then send them back to storage. And then repeat that sequence over and over and over.

At Graz University, they’ve sought to replicate a memory storage mechanism in our brains called after-hyperpolarizing (AHP) currents. By integrating an AHP neuron firing pattern into a neuromorphic neural network software, the Graz tesam ran the network through two standard AI tests.

The first challenge was to recognise a handwritten ‘3’ in an image broken into hundreds of individual pixels. Here, they found that when run on one of Intel’s neuromorphic Loihi chips, their algorithm was up to 1000 times more energy efficient than LSTM-based image recognition algorithms run on conventional chips.

A second test, in which the computer needed to answer questions about the meaning of stories up to 20 sentences long, the neuromorphic setup was as much as 16 times as efficient as algorithms run on conventional computer processors, the authors report in Nature Machine Intelligence.

As always, we’re on the outskirts of the breakthrough making a real world impact. Neuromorphic chips won’t be commercially available for some time but advanced AI algorithms could help these chips gain a commercial foothold.

“At the very least, that would help speed up AI systems,” says Anton Arkhipov, a computational neuroscientist at the Allen Institute speaking to Science.

The Graz University project leader Wolfgang Maass speculates that the breakthrough could lead to novel applications, such as AI digital assistants that not only prompt someone with the name of a person in a photo, but also remind them where they met and relate stories of their past together.

Tags: Technology

Comments