Anyone who wants a laptop with a twenty-hour battery life, raise your right hand. And stop snickering, recent Macbook owners.

Let's start with the basics: there are laptops out there which will match or exceed the performance of anything Apple has; they just won't do it for more than about eight hours in a row. Midrange M1 in a Macbook has performance broadly similar to a decent mobile Intel Core i7. It has considerably better performance as a GPU than the sort of integrated graphics found in most laptops, although not as good as the higher-end Nvidia mobile GPUs. In general, that makes most current Macbooks very well-specified for the sort of uses to which most laptops are actually put. Unless you're doing some extremely heavy lifting, it will do the job nicely, and probably better than many Windows laptops, at least those types without branded GPU provisions.

The big benefit, though - the one that made it worthwhile for Apple to spend the certainly eight, possibly nine-figure sum to have M1 made - is the low power consumption, and that's something which other laptop owners might look upon with hungry eyes. Given the drive for power efficiency in general, certainly in the context of laptops, even with workstations but even more for server farms to support cloud computing, the demand for something like M1 for the mass market is clear.

The question, then, is whether that's possible - or at least, given it's clearly possible, what the barriers are, what we're likely to get, and when we'll get it. It's been tried before more than once, although there's been some difficulty in leveraging extant semiconductor manufacturing processes to create ARM cores of sufficient individual performance for a modern workstations. That's meant high core count designs, and it can be challenging to get the best out of those from a software engineering perspective; if the star of the show is the power consumption, one key enabling advancement of M1 is that it has enough per-core performance for a part with fairly conventional core counts and should not require massive changes to software to be effective.

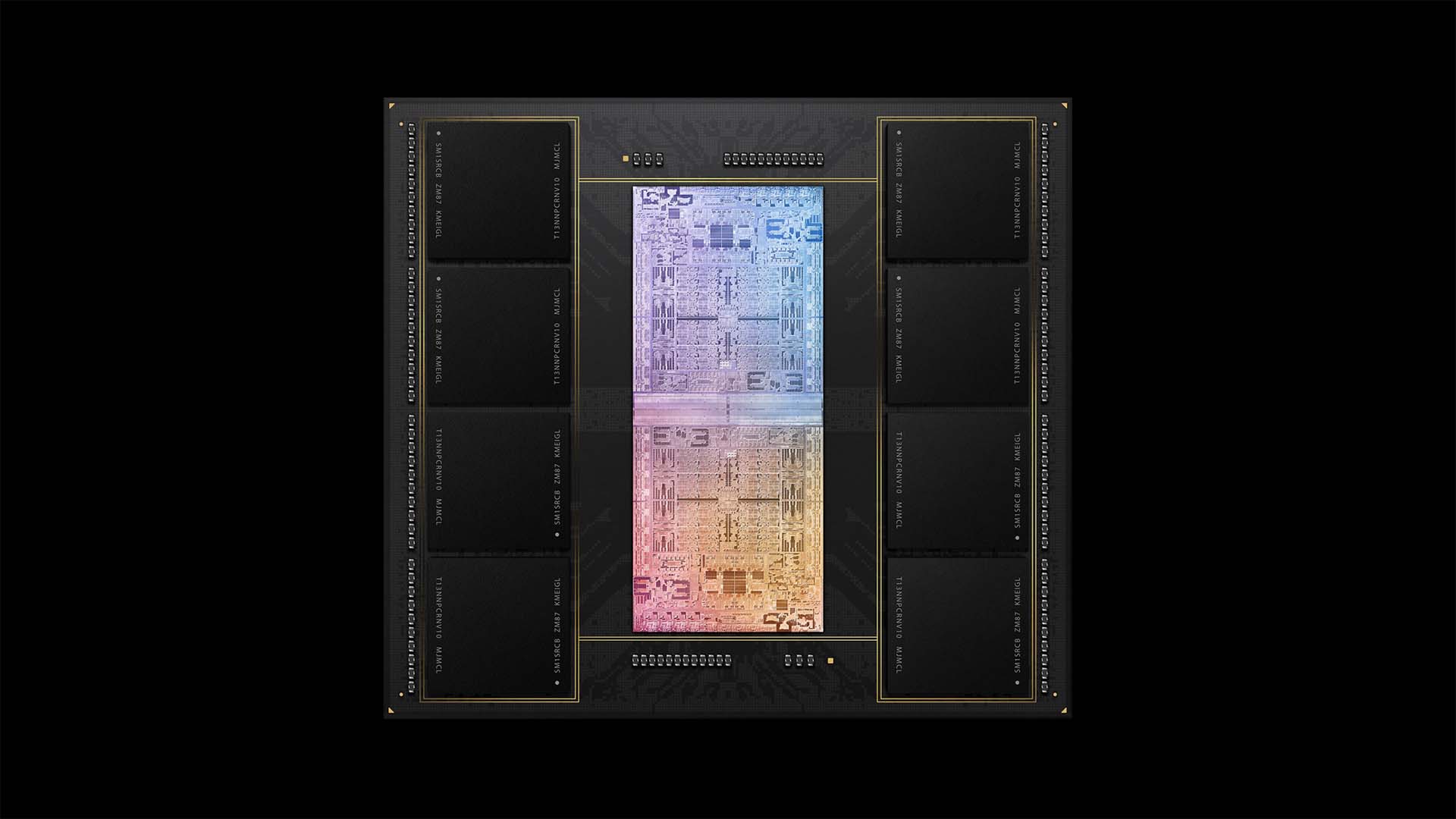

With those subtleties in mind, let's look at what M1 actually is. Depending on the binning, the CPU region contains between two and four high-efficiency cores (fewer on the high end parts) and between four and eight high-performance cores (more on the higher end parts). These are fundamentally just ARMV8-series CPU cores wearing a backwards baseball cap and a dollar-sign medallion, although likely descended from some quite advanced work being done at Apple on 64-bit ARMs over the last nine or ten years (look up the A7 SoC and its Cyclone microarchitecture). Yes, it's a fair bet the company has leveraged its experience in making phone processors to make M1, which might hint at potential manufacturers (hint: not Intel).

The GPU

The GPU part of the device is harder to characterise, much as it's hard to directly compare AMD Radeon to Nvidia RTX based solely on core counts. The best estimations are that many M1s sit somewhere inbetween a very high-end PCIe GPU and the feeble integrated graphics of a low-cost laptop, though probably two thirds of the way up that range; it's enough for some moderately heavy lifting, as so many GPUs now are, but it's not a high-end option.

Another very significant way to technologically differentiate an M1-based laptop from one built around Intel's finest, though, is something that's perhaps least often discussed: the fact that M1 is a system-on-chip with a unified memory architecture. Briefly, what this implies is that the GPU and CPU both work from the same pool of memory. Sometimes this has been done to reduce costs on simple machines - other operating systems have run happily on shared-memory devices for years - but it's something of a double-edged sword.

Most significantly, it means that video frames captured from any DMA device, such as a capture device that's capable of writing directly to memory without CPU involvement, do not need to be copied to the GPU's memory before the GPU can get to work. This is how phones work, and it can be a boon to performance, conserving both video and main memory bandwidth and reducing bus traffic. The flipside is that any pool of memory only has so much bandwidth, and thus the CPU and GPU can conceivably end up in contention for that bandwidth depending on the tasks assigned to either (that's been a factor at least since, hilariously, the Commodore 64). Practical considerations such as size, cost, power consumption and ease of manufacturing intrude significantly here and it's hard to summarise, but there are certainly big upsides and big downsides which depend heavily on the task at hand.

Finally, M1 is made on TSMC's 5nm process. The controversy over Intel's foot-dragging over bringing its 7nm process to market is of course relevant here; making process sizes smaller will tend to make a device less power-hungry, assuming it can be made to work on that process. TSMC will presumably sell that process to other people too and eventually Intel will presumably corral all the gremlins.

The questions therefore become quite straightforward. Can other people make small-process-size chips in the 5-7nm range? Sure. Can other people make ARM cores? Absolutely, that's the business model ARM uses and it's been going on for years. Can other people make a system-on-chip with a shared memory architecture? Absolutely; every phone has one. If there's a bone of contention it's that designing a competitive modern GPU is a nontrivial task.

Intel's Arc Alchemist is perhaps as fast as an RTX 3070 and thus probably faster than an M1, though again, it's hard to be definitive. The problem for Intel is that there's some question over whether the company's x86 architecture can ever reasonably be expected to be power-consumption-competitive with ARM's designs. Nvidia and AMD both have some experience with GPUs, although of the two only Nvidia has ever developed anything including an ARM core. Snapdragon, though, is an interesting example, being an ARM-cored SoC with GPU and memory embedded. Remind you of anything? Really big Snapdragons are already being used to make laptop-adjacent tablets.

So, to the future. Bing-bing-bing-bing - Qualcomm inside?

Tags: Technology computing

Comments