It's often the last mile that's the hardest. But even though it might take a thousandfold increase in power to make computer-generated humans convincing - that's exactly what we're getting every ten years or so. So maybe this will help us solve the unusually intractable problem of the Uncanny Valley.

It's not really surprising that the final part of almost any project is the hardest. Maybe it's because it's when you have the least physical or creative energy. Perhaps it's because you've been putting off the hardest bits until last. Sometimes it's the moment when you realise that however close you find yourself to finishing, cumulative mistakes earlier in the journey mean that you're never going to land in the right spot.

Often it's hard to finish because of perfectionism. I write music in my spare time. There's no pressure: I'm not working to a deadline or even to a standard. There's no one but me (and my long-suffering family and friends) to please. But I rarely finish a piece. There's always something I think could be better, even if it takes as long to achieve as writing the whole piece. I'm sure this feeling is familiar to artists in all fields. How many films have ended up being edited the night before release? Quite a few, I'd imagine.

But in the field of scientific and technical progress, the last part is sometimes the hardest because it has to be done to a very high standard to cross the threshold of acceptability. And more and more, that standard is "is it as good as a human would do it", or, sometimes, "is it good enough to fool a human".

This matters to filmmakers because new virtual techniques mean that, increasingly, what you see on screen is not what you think it is.

When is good enough, good enough?

To illustrate this, let's take an example away from the film industry: self-driving cars.

Vehicles have been slowly gaining autonomous features for years. For example, my car will apply the brakes if it thinks I'm going to run into the back of another vehicle at a roundabout. I'm not entirely sure how it does it, but it's probably ultrasound or radar and the result of some physics calculations that exceed a pre-determined threshold. At the other end of the scale, there are already cars on the road that drive themselves from one city to another without any human intervention. By law, these still require a driver to pay attention and to be able to take over at any time, but, nevertheless, what they can achieve autonomously is impressive.

There are plenty who say that they would never trust an autonomous car. I'm the opposite: as soon as they're good enough, I'd trust an autonomous vehicle over a fallible human driver any day. I would do this when statistics show that self-driving cars are safer than humans. I'm sure insurance companies are already watching these calculations very closely. I think people forget that an autonomous car doesn't have to be perfect. It just has to be better than a human driver.

But it's not a linear thing: as we get closer to the threshold of acceptability, it's getting harder. That's partly because of "edge cases": essentially, as we get to solve the easier problems, it leaves the harder ones still to fix. With cars, some of these more challenging problems include reading road signs obscured by poor visibility or an overgrown tree. Even harder is understanding the intentions of pedestrian adults, children and animals.

Sentience

That little word "intentions" hides a lot of complexity. More than you might think. In fact, "intention" might very well be what defines an entity as being self-aware (sentient) or not. I believe it is also behind the last mile in making computer-generated characters look human.

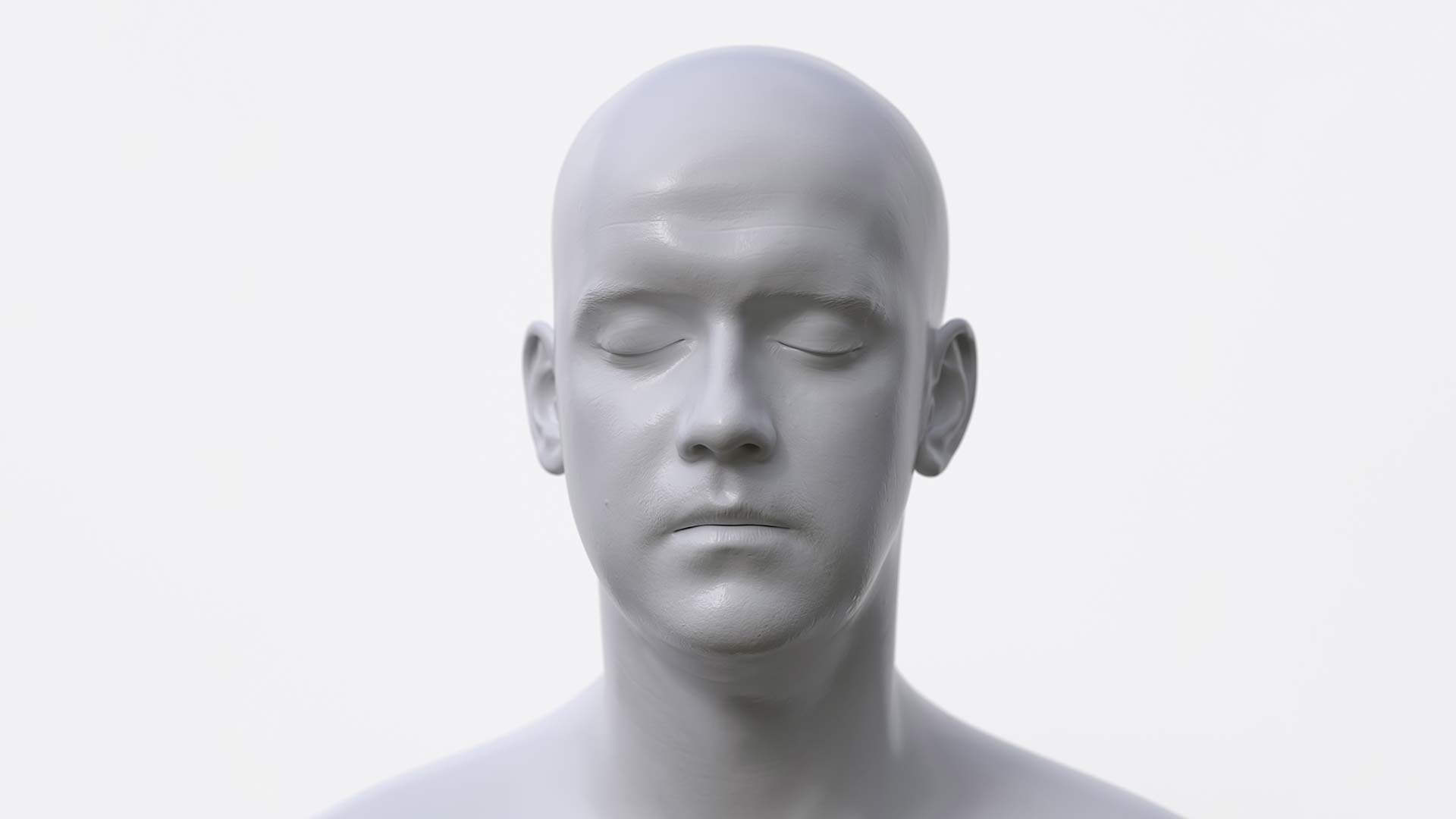

We still haven't seen a film in which a computer-generated actor plays a central role that can be mistaken for a human being. For the acting profession, that's probably a good thing. But from a technical point of view, why hasn't this been possible so far? It is absolutely possible with still images. Computers - especially AI programs - can generate photorealistic still images of humans that have never existed, ad nauseam. It's no longer an issue. So why is it that when we try to make these images move, the illusion breaks down? It's as if there's something that we can sense that isn't right.

I'm not talking here about motion capture. While this is getting better all the time - and real-time "virtual" cameras help directors and actors get a better feel for their "surroundings" and are therefore able to bring more realism into their captured performances - it hasn't cleared the last mile. Specifically, to date, there has always been something unnatural or "uncanny" about these performances.

Up to now, the way around this has been to stylise the images; make them look cartoon or alien-like. If we're not expecting them to look natural, then these artificial images are taken as exactly that. But the moment you try to pass an animation off as a photorealistic human, it all breaks down. However hard we try to avoid it, we feel like we're looking at zombies, albeit picture-perfect ones.

Ultimately it comes down to body language. Not the obvious stuff (shaking a head to indicate "no" or holding up the palm of a hand to signal "stop here") but the micro gestures and tics that are almost as subtle as language itself.

It's at this level that people living with autism struggle. One of the characteristics of this complex condition is that it makes it hard to understand other people's intentions. It's almost as if there is a visual language of intentions that's not only hard to understand but also hard to simulate. As a result, autism has been explained in the past as "mind-blindness": an inability to understand what people are thinking or even that other people are thinking at all.

Maybe it is at precisely this level of communication that the problem with simulating human beings lies. If we're ever going to have realistic and useful humanoid robots, solving this issue will be crucial.

Tags: Post & VFX Technology Futurism AI

Comments