Replay: HDR is here, but not everything is rosey. Technology still has a way to go before giving us truly reliable, and versatile HDR in a consumer setting. But with challenges come solutions, and it could mean that before long everyone's TV could be reference quality.

Since the middle of the 20th century, with TV ascendant, cinema has been in an almost perpetual search for new technology with which to tempt audiences. Until recently, TV standards changed more slowly since it was considered dangerous to make the whole viewer base buying new equipment. Then it was realised that making the whole viewer base buy new equipment was profitable and since probably the mid-90s, the moving picture industry has been throwing new ideas at us as fast as they can think them up.

Leyard Planar 0.8mm pitch video wall. LED video walls are currently becoming sufficiently high-res to potentially replace larger LCDs, but they're still too expensive to do so universally

Success has been mixed but in HDR we have something that’s widely considered a good thing. Only we sort of don't, well, have HDR. It's so difficult to make good HDR displays that even the professional market, with professional budgets, is struggling for good solutions. Crucially, this is the opposite situation to the one we usually find ourselves in, where someone’s researched a new technology and is trying to persuade us it’s a good idea so they can sell it to us. With HDR, we’re aware it’s a good idea and the manufacturers are desperately trying to make the gear to satisfy the demand.

Asus ProArt PA32UCX display, with LED backlight. This sort of thing is likely to be a very good interim solution.

Asus ProArt PA32UCX display, with LED backlight. This sort of thing is likely to be a very good interim solution.

What we want

In the simplest sense, the phrase “high dynamic range” refers solely to contrast, but it's generally expected that HDR displays will have higher than average peak brightness too. The thing is, some laptops and tablets have 500 or 600 nit output in order to be viewable in a brightly-lit office or outdoors in the sun, and it's not a great day when an HDR monitor struggles to out-spec that battery-powered portable device. That’s sometimes the case with less wonderful, domestic “HDR” TVs.

There are essentially three approaches for making HDR displays which can achieve accurate colour and that very deep black level plus, let's say, 1000 nits of peak brightness. All of them, however, suffer caveats.

What we can have

Sony's larger-sized OLEDs are based on the same technology as some home TVs, which is very good but limits performance compared to the absolute highest end displays.

Sony's larger-sized OLEDs are based on the same technology as some home TVs, which is very good but limits performance compared to the absolute highest end displays.

OLEDs, by default, are good at black. Switch a (sub)pixel off and it's off, with zero emission, although some less-expensive OLEDs reveal a barely-visible bit of fizz in theoretically black areas. It can only be a glitch in the drive electronics and they're still generally better at black than similarly priced LCDs.

Only Sony ever made an OLED panel that could exceed 1000 nits and it's effectively discontinued because it wore out too quickly. It also couldn't display full power white over its entire area. That was rarely necessary, but given the five-figure price of a so-called top-emission OLED, it's difficult to justify that sort of caveat. The main problem, anyway, was that restricted lifespan. Early monitors sometimes drifted toward yellow as the blue elements aged first.

Consumer OLEDs can approach 1000 nits (well, 700 or 800) using a white-emitting sub-pixel that’s added as brightness peaks. It works but means that as things get very bright, they also get less saturated. A key ability of HDR is to achieve bright and saturated output simultaneously, so the white pixel is not an ideal workaround. LG's giant OLEDs subjectively look very nice and represent a huge advance in domestic TV picture quality and the company should be congratulated, but they’re not completely ideal yet.

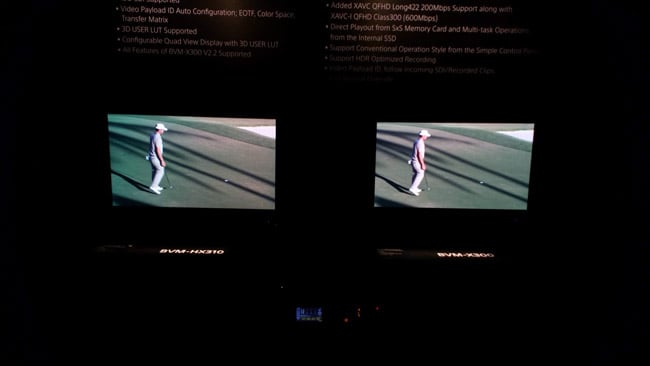

Dual-layer LCD (left) and high-output OLED (right) compared on the Sony booth at NAB 2019. Due to metamerism, the photo makes them look more different than they are in reality.

Dual-layer LCD (left) and high-output OLED (right) compared on the Sony booth at NAB 2019. Due to metamerism, the photo makes them look more different than they are in reality.

It's hard to say if OLED technology overall will go the way of plasma screens and someone in a lab somewhere is probably hard at work on further improvements. Still, right now, OLED is unlikely to bring 1000-nit HDR to either lounges or grading suites.

The technology we might loosely call dual-layer LCD places a single monochrome LCD pixel behind each group of RGB sub-pixels, modulating the backlight brightness separately for every pixel on the display. It's very effective. LCDs traditionally don't have wonderful contrast performance, but layering them helps enormously. Peak brightness is limited only by the power of the backlight.

A 1000-nit backlight does create problems of its own, of course. Consumers expect TVs an inch thick that are quiet and low-powered. A 1000-nit backlight won't be, requiring more power, extra depth for heat-sinking and probably fans that won't ever be zero-noise. LCD displays waste at least half their energy in a polariser and another two-thirds of what's left in the colour filters, even assuming every other part of the system is perfect, which it isn't.

HDR on HDR - sony have used the CLEDIS display to present at various trade shows.

HDR on HDR - sony have used the CLEDIS display to present at various trade shows.

Dual-layer LCDs are a new technology and as such are essentially cost-competitive with high-output OLED. The technology doesn't currently promise much for anywhere other than the high end, though it's possible to imagine it being reduced in cost in the future, once they work the glitches out of the production lines.

If there's a perfect solution, it's LED video walls, which represent what HDR ideally should be. Absolute-zero blacks; more brightness than they can reasonably use, good colour gamut – and a price tag. Until recently, they relied on the brute-force but effective approach of soldering vast numbers of individual LEDs to a circuit board which isn't a very practical way to make, say, an 8K display requiring around 100 million individual sub-pixels.

The solution – we hope – is microLED. Some might consider Sony’s stupendous CLEDIS to be a microLED display, but the difference really is in the manufacturing process. The underlying light emitter is an LED, which is brighter and has far fewer issues with lifespan than OLED. Techniques for making microLED displays inexpensively are under feverish development and there's a big reward for anyone who can exceed the price-performance ratio of competing technologies.

But in the middle of 2019, that’s another technology which is still some way from being commercialised, at least at the consumer prices we'd all prefer to pay. If that does happen, it'll raise a terrifying spectre for the high-end monitor manufacturers of the world, but it'll be great if everyone's home TV is a reference-quality display.

Tags: Studio & Broadcast

Comments