We have a problem. The progress at which editing computers are developing and increasing in speed isn't necessarily keeping pace with the ever increasing resolutions of video. Will we ever reach a perfect nirvana of truly affordable computers that can handle 8K and above with ease when CPU speeds have seemingly stalled?

During most of the history of computers handling video, computers were getting better, and they were improving ahead of the rate at which video footage got bigger and harder to handle. The problem is, in 2019, moving image production is starting to push beyond 4K, but computers are not pushing much beyond 4GHz. They might be improving at five per cent per generation, if we're lucky, and even then it's dependent on the code we run.

When people first started using computers to handle the video footage, as opposed to using them as edit controllers, it hung on the hairy edge of what computers could do. That was the early 90s, the days of hardware MJPEG codecs like VLab Motion on the Amiga. Things like that operated so close to the limits of performance that they often needed hard disks specially formatted just to store the JPEG frames. The 1990s was a period of massive advancement in computer technology, though, and by the turn of the century, it was possible to cut standard definition video on more or less any desktop computer with very few compromises. The technology got out of the way. Great, wasn't it?

And then HD came along, and we saw that it was good.

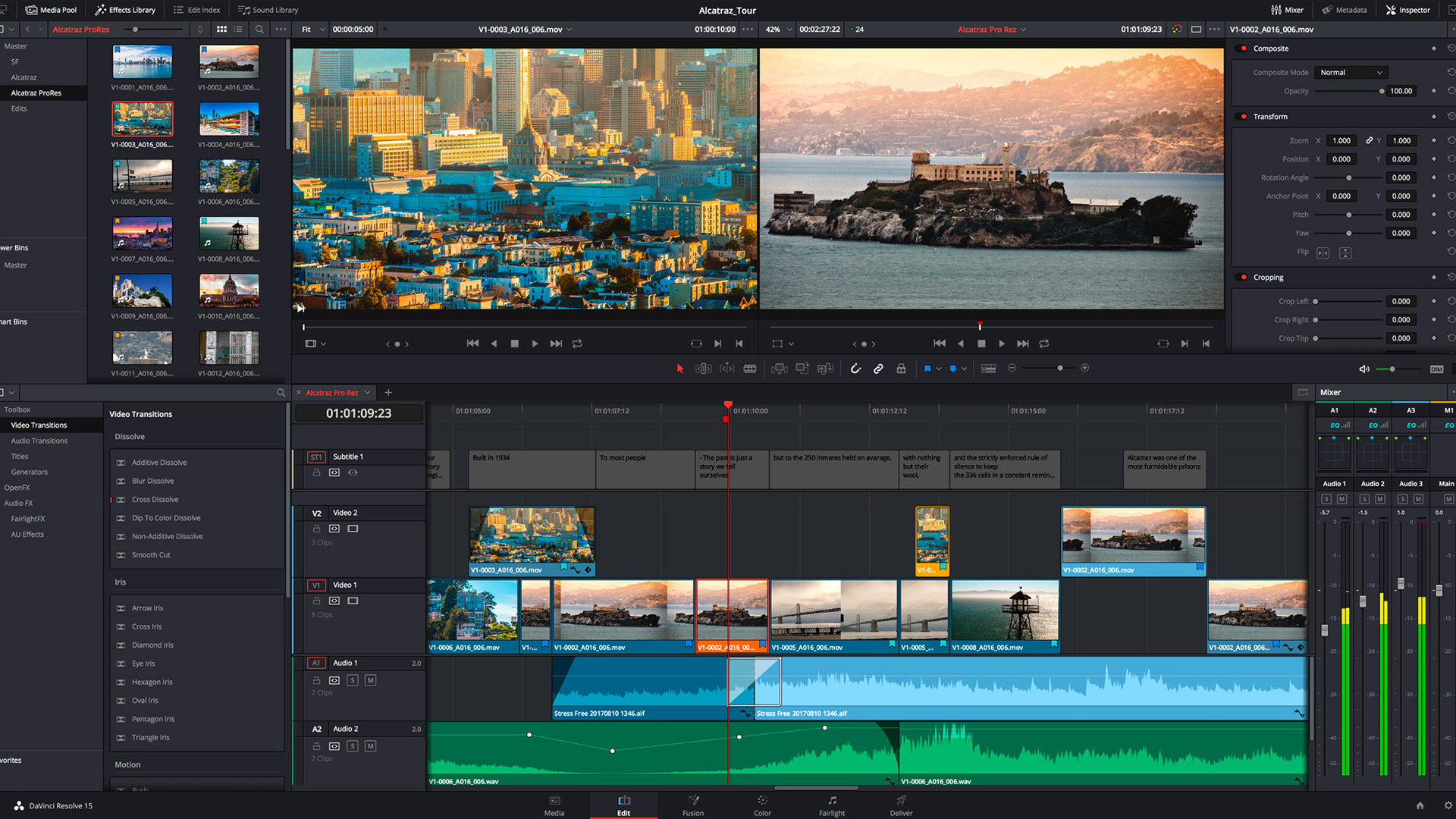

It's also four times the data to store, and four times the pixels to render. That was OK, because we hadn't quite hit the limits of processor speed that we're bumping up against now. HD was like going back to the mid-90s, waiting ten seconds to render a simple dissolve on an affordable desktop PC. In the end, though, everything was fine, because this more or less coincided with the realisation that the hardware designed to render 3D graphics for games could be pressed into service bashing pixels for video. Once again, we could throw timelines together without constantly tripping over render times.

So, in the late 2000s, it's 4K you want?

Fine. Increasing resolution mainly involves doing more of the same things. That's exactly the sort of task that works well with modern computers. They can't make the chips go faster, so they put more chips in so they can do more at once. That's parallel processing, and it works well on video. Modern GPUs often have thousands upon thousands of processing cores, each capable of simple calculations of exactly the type needed for this sort of thing, and it's reasonably easy to add more of them. In some cases, it's even possible to just plug more graphics cards in and enjoy an immediate boost in capability.

8K? HDR, HFR?

If 4K was pursued because it comfortably exceeded the resolution of most 35mm photochemical distribution, 8K has been pursued because... yeah, anyway. But in all seriousness, people have found that sitting half a screen height from the display during a sports match does create an agreeable sense of immersion without the need for a cumbersome VR headset which may fall off embarrassingly during celebrations of someone else's sporting prowess. So, we're probably going to need systems to handle 8K, and all the other things that make for heavier lifting.

Does parallel processing continue to help us? Well, sure, to an extent. At any resolution, though, a problem quickly becomes apparent once we start loading a system heavily. We can split that workload up and spread it among a lot of parallel resources, but at some point a piece of audio visual media is a monolith; it has to pass through the aperture of a network stream, an encoder, a file writer. That's broadly why h.264 encoding (for one example) has not traditionally been a great target for GPU processing. Audio visual media doesn't happen in parallel, it happens as a stream over time, and that limits at least some aspects of performance.

But it doesn't seem likely that we're actually anywhere near the theoretical limits, yet. What's much more common now is that an old piece of code might have been written with the idea that two or four CPUs was a lot. Huge amounts of that sort of code exists and is in mainstream use. Considerations around multi-processing are fundamentals; part of the lowest level core of software. Modifying and improving multi-processing behaviour represents a huge amount of work. It's a ground-up rewrite, often, and something that software houses are not greatly motivated to spend time on.

It's unlikely that this is going to create any really enormous problems, at least not yet. What it does mean is that the nirvana of, say, 2001, when standard-definition material was still mainstream and computers were fast and still getting faster, isn't something that we're likely to get back to soon. Workstations capable of handling 8K material fluidly – capable of handling that material and getting out of the way while they do so – are likely to remain expensive for a while.

Issues around stalled per-core CPU performance are well-known and they affect many industries. Still, the stage seems set for some significant advancements. That might be hardware, or it might be software, perhaps in the form of tools designed to make parallel-processing code easier to write. It's being discussed. But at some point, we're going to need something, before people start talking about 16K.

Tags: Production

Comments