Not all codecs are created equal...

Update: a few people have commented that this article doesn't really reflect the title sufficiently. Fair enough. I've commissioned another article based purely around uncompressed capture and workflow. Hopefully we'll have this soon. Meanwhile, please do read on - it's a fascinating piece about codecs.

Even though flash storage is becoming very cheap very quickly, it'll probably be some time before most productions can store images completely uncompressed, especially as the drive for higher picture quality continues. We may no longer have any real need to push for more resolution, but the current interest in better pixels, embodied as wide colour gamut and high dynamic range, still implies more data.

As such, we're likely to remain dependent on compression algorithms for quite some time and we should take a keen interest in the most effective codecs — the best ways of compressing pictures without distorting them too much. The problem with this is that simply performing tests and deciding that a particular codec is best, is fraught with complication, especially in devices which must be affordable, portable, run from batteries, and encode video in realtime as it's being shot. Because of this, it's not necessarily the case that more sophisticated, more recent codecs work better than older designs.

For instance, on paper, the more recent H.265 standard is better than H.264. H.265 was designed to produce the same image quality at half the bitrate, which immediately sounds like a triumph — we can record pictures that are just as good for twice as long on the same flash card. It certainly will do that under at least some circumstances. Fantastic.

Testing H.264 vs. H.265

Let's verify this by looking at some example images based on the same frame encoded using H.264 and H.265, both at a requested bitrate of 1Kbps. This is a very low bitrate, but it serves to emphasise the differences between encoders — at high bitrates, they'd be much more subtle, at least until the material had been re-encoded a few times through grading and distribution. The contrast has been increased to make sure we can see the compression artefacts. This is the original image, showing the area we're interested in.

Test image showing region of interest

First, H.264:

Test image encoded in H.264 at 1000kbps

And now H.265, which we would expect to look far better given its improved quality-to-bitrate capability:

Test image encoded in H.265 at 1000kbps

Most people would agree that the H.264 frame looks better, or at least more detailed. Well, that's amazing, and you heard it here first, folks: H.265 is a less effective video compression codec than H.264. Of course, that's not actually true. Both of these images were encoded with a version of the free FFmpeg encoding tool (version N-85469-gf1d80bc, which was recent at the time of writing). User-targeted downloads of FFmpeg include the x264 and x265 encoders, which are implementations of H.264 and H.265 respectively. It provides encoding presets ranging in speed-versus-quality from “ultrafast” to “veryslow.” The H.264 was encoded using the “veryslow” preset, although it still took barely more than real-time on this simple input material. The H.265 frame was encoded using the “ultrafast” preset, which also took about real-time. In general, H.265 should be a few times slower than H.264, because of the more advanced mathematics, although x265 does not represent the same level of maturity, in terms of sheer optimisation effort, as x264.

If we wind the H.265 encoder up to “veryslow,” it produces somewhat more convincing results:

Test image encoded in H.265 at 1000kbps with veryslow preset

Now, here is a huge minefield of caveats involved with this, which we'll discuss in a moment, but the key thing to understand is that both H.264 and H.265 are specified in terms of their decoder. The encoder can be anything which produces data the standard decoder will read. This means that it's possible to do more or less any sort of video encoding either well (which may mean slowly) or badly (which may mean quickly.) Really well-done H.264 is, as we see here, better than poorly-done H.265. In the same way, really excellent MPEG-2 can outperform a poor H.264 encode. There are both technical and commercial pressures to do things badly and quickly, for reasons of power consumption, size, weight, cost and time to market.

Encoding software determines results

Those caveats we discussed cluster mainly around the specifics of the software used for this test. Despite their similar names, there is not necessarily any relationship between “veryslow” as defined for H.264 and “veryslow” as defined for H.265. They both set the encoder to ignore huge parts of the codec standards which take a lot of CPU effort to encode, in order to work more quickly, but the specifics of that are hard to compare. The names of the presets are inventions of the FFmpeg software and the two codecs have very different capabilities and therefore different features which can be switched on or off to either improve quality or save time.

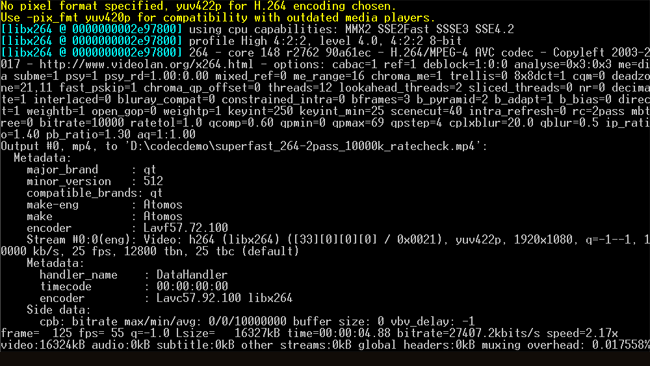

Even beyond that, the x264 encoder is famous for having had absolutely enormous amounts of very exacting optimisation done on it during the decade of its existence, targeted at both improving encoding speed without sacrificing quality and improving quality without sacrificing encoding speed or bandwidth. Things considered “slow” for x265 might not be so slow for x264. To get a better idea of the intricacy that this involves, have a look at this screenshot of the information provided by FFmpeg about the encoding options it is using:

Test image encoded in H.265 at 1000kbps with veryslow preset

No, I don't know what psy_rd=1.00:0.00 means either, but there are a lot of things to tweak in video encoding, and some of the things listed there represent some of them. Every device which uses this sort of compression (including things like XAVC cameras) has a configuration that's functionally equivalent to this. The decisions made by the manufacturer in this area and others affects output picture quality. The other issue is exactly how we assess picture quality. It's a very subjective thing and measures such as signal-to-noise ratio, which we can calculate simply by adding up the differences in pixel values between the before and after image, tend to favour cleaner, blurrier images over sharper ones which contain more artefacts. The H.265 encoded result certainly looks blurrier, albeit slightly more accurate in its low-res glory; ultimately, it's a matter of opinion.

Conclusion

All of this brings us back to the idea that more recent codecs aren't necessarily always the best, especially in a cost- or power-constrained implementation. The design goal was that H.265 would need no more than three times the work to decode in comparison to H.264 while providing the same image quality at half the bitrate. This is immediately a bad deal from the perspective of quality versus computing horsepower, but worse, it specifies the decoder, not the encoder. There is no restriction on how difficult H.265 would be to encode, which is, of course, the primary concern for a camera manufacturer.

The reality of all this comes to the fore in designs such as Canon's original DSLR video camera, the 5K Mk II. It recorded at (up to) 50 megabits per second, which is a reasonable minimum bitrate for a camera where video is supposed to be the second fiddle. The fact that the pictures often fell apart in low light was simply because the codec was using a fairly basic approach to encoding. The difference, though, is that while FFmpeg could have done a much better job with those 50 megabits per second, it can only do that in real-time when it's running on a computer that consumes as much power as half a dozen Alexas.

All codecs are not created equal, even if they're called the same thing.

Tags: Post & VFX

Comments