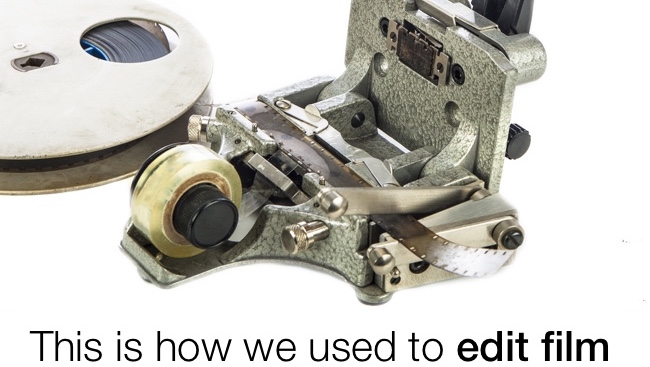

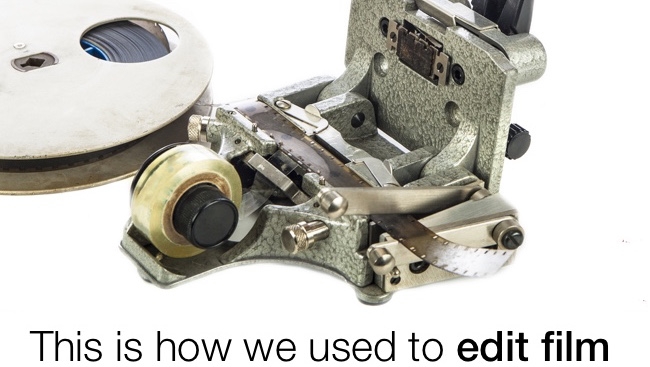

A guillotine tape splicer: how we used to do it

A guillotine tape splicer: how we used to do it

RedShark Replay: Roland Denning profiles the way that technology has changed the editing process from the times when machines like the tape splicer above were cutting edge.

Technical innovations change the way we make films — usually, but not always, for the better. The search is always on to make films faster and cheaper with higher picture quality and more spectacular effects, but technology also has an influence on the kind of films we find ourselves making. In a future part I’m going look at all this in relation to cameras, but firstly, some examples in the world of editing. I’ll start with a history lesson.

Just one piece of equipment can change the way we edit. Take something, for instance, as simple as the guillotine tape splicer, introduced by CIR of Italy in the late 1960s (by tape splicer, I mean a splicer for joining film using transparent sticky tape, not a splicer for joining audio tape, although they existed too).

Before the tape splicer, every time an editor made a cut using a cement splicer, even though it was on a cutting copy that would never be screened to the public, two frames were lost. If you are only used to editing on a computer, it is almost impossible to imagine what that must be like for every cut you make to be irreversible. The tape splicer was a revolution - editors could simply peel the tape off and remake a splice or restore the frames they had just removed. The effect that had on editors at the time must have been dramatic - there’s a book to be written there on the effect it had on movies of the period. (The CIR splicer is still available, by the way, but it will cost you around £500).

Cutting copies would end up like a patchwork quilt of tape splices, but this didn’t matter as what would be screened to the public would be a print off the cut negative, joined with cement splices. In 16mm, the practice was to cut the negative into two A & B ‘checkerboard’ rolls - shots would alternate between the two rolls with black spacer the same length as each shot on the other roll, meaning all splices were made on the black spacer rather than the picture, hence they were invisible. The consequence of this was if you cut a shot in half, you would also always lose a couple of frames at the point where you cut it.

But not everything was shot on negative. Right up until the 1980s it was common practice for TV companies to shoot news and documentaries on 16mm reversal and to broadcast the edited, original film that came out of the camera. In this case, cement splices rather than tape splices were used and this meant once a cut was made, there was no going back, and those two frames on either side of the cut would be lost for ever. Editors in those days had to be decisive and equipped with strong nerves. And clean gloves.

The introduction of offline

It all changed again when offline video editing became common in the 1980s; for film editors, it was a retrograde step. Offline editing, usually done on U-matic 3/4” tape, was basically a case of recording from one machine to another. This meant it was a linear process — to change two shots around or to trim a couple of frames off a shot in a sequence, so easy to do on film, would mean reassembling everything or going another tape generation — which meant very often it just wouldn’t be done.

Film editors largely regarded video editing with contempt; it seemed a crude process compared to film editing, they saw it as assembling shots rather than making creative decisions (there’s an essay here about how different the engineering-based environment of television was from the more maverick world of film, but I’ll leave that for another time).

Video editing brought with its own advantages — you could repeat shots, you could slow stuff down (previously slo-mo was either something to be done in the camera or a very costly lab process) — all too obvious in the heyday of rock promos in the late 80s. If an effect is available, people will use it, as could be seen with the use of 3D digital effects, pioneered by Quantel’s Mirage, that became available around that time. Those effects, so impressive back then (and requiring a machine costing upwards of $250k), now look so dated. Okay, you can wrap your images around moving tubes and spheres - but do you really want to?

Unsurprisingly, digital non-linear editing, pioneered by Lightworks and Avid in the early 90s, was first embraced by film editors. The look and feel of the software, with its bins and tracks, owes much more to film than it does to video. There were no inhibitions to trying things out in many different ways and each version of an edit could be kept to be returned to later if necessary.

There was another cultural shift when film editing faded out of history. Film editing rooms were like workshops, generally a little scruffy around the edges, inevitably cluttered with trim bins and racks of cans. Screens were small and not really intended for group viewing; not the sort of place your client was encouraged to visit. When everything became electronic and tidier, the screens got bigger and leather sofas began to appear and the workshop became a lounge.

Then when Apple’s Final Cut and Adobe’s Premiere came in around the turn of the century, the editing suite was often no longer an address in town but a desk in your own home or, sometimes, a laptop in a hotel room. This all meant that over the last half century the process has lost a little mystique, but few would say that was a bad thing.

The liberation of non-linear

The introduction of non-linear editing was truly liberating - no editor wants to go back to film or linear editing processes, any more than writers want to go back to typewriters (though there will always be the odd exception, Ken Loach). Are there any drawbacks to modern computer-based editing system? I can only think of two, and they are more to do with the limitations of humans than the technology itself.

Firstly, because you can edit very quickly, the pressure is always on that you should. And some complex films really need time; you need space to think, to let the ideas settle in your mind. If you are pressed for time, it’s much easier to end up being formulaic.

Secondly, editing systems have become vastly more versatile and powerful whereas we humans have not. It’s easy to find yourself covering host of roles that would all be performed by different people — an off-line editor, on-line editor, sound editor, dubbing mixer, graphic artist, effects specialist and a grader/colourist. Now, I’m not asking for all those roles to be resurrected for every project, but if you’ve ever worked with a first-class colourist or dubbing mixer, you’d never want to try it yourself again.

Today we take the ease and low-cost of editing for granted, but our digital editing software bears the legacies of the analogue systems that preceded it - the very principle of parallel tracks and bins of clips, has everything to do with the past and little to do with digital technology itself. It probably still hampers the way we make films - only it will take a future generation to see it.

Main graphic: shutterbox.com

Tags: Post & VFX

Comments