Colourlab Ai represents an entirely new way of looking at the grading process, where creativity is king. The possibilities for the future of the technology are also endless.

It’s very clear that we live, currently at least, in a very different world to the one we inhabited only a year ago. When innovation happens it is always a good thing, but sometimes innovation happens to come around at just the right time. This is the point at which you end up with a step change in how things are done actually solving a very real and present problem.

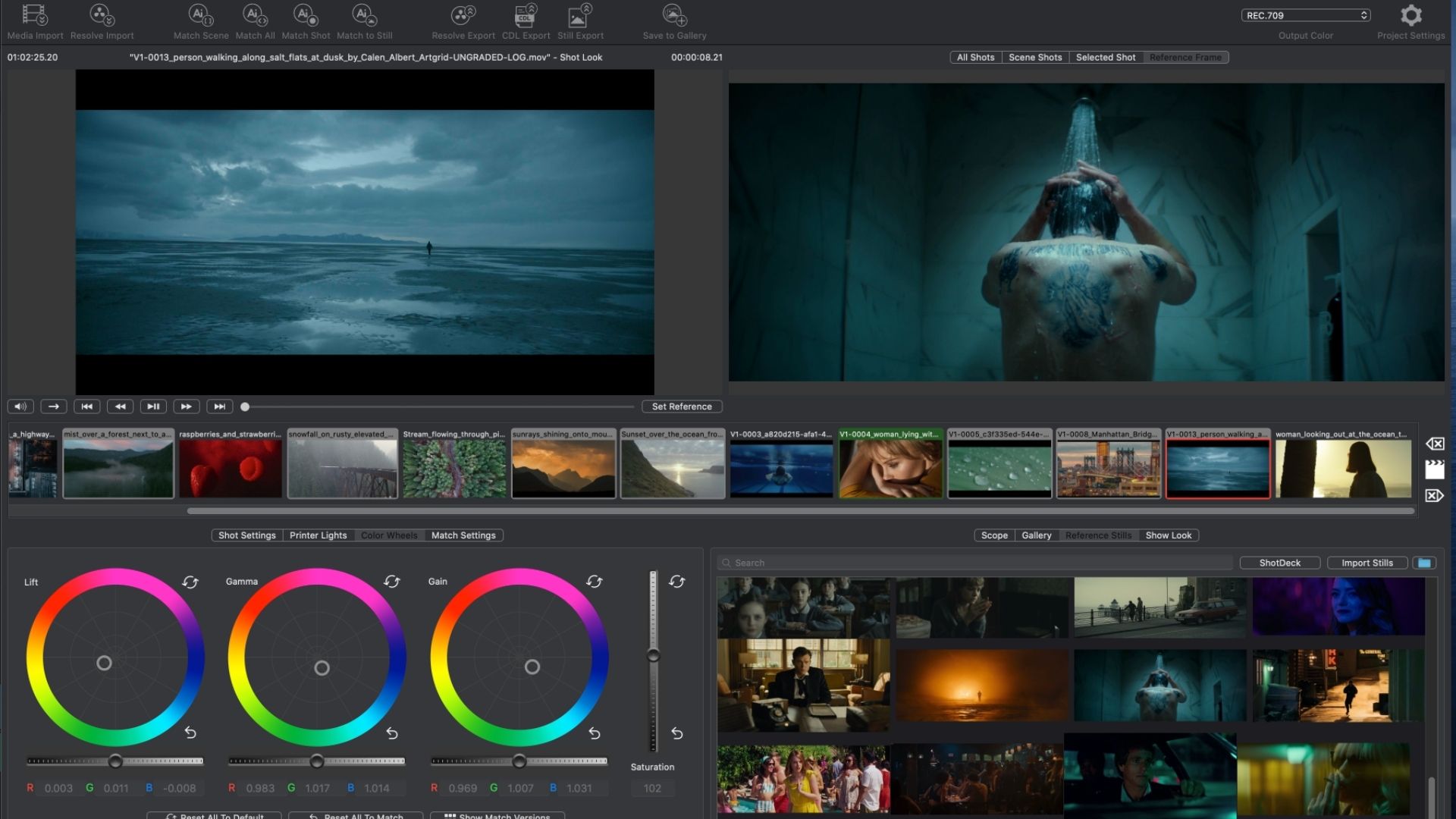

When Colourlab Ai initially announced its new colour grading software we commented here about how it was a revolution in how grading will be done going forward. There were some sceptics, as you’d expect. Some have misunderstood the software entirely, wrongly thinking that it’s somehow dumbing down the grading process or taking control away. However this isn’t the case at all, as anyone tackling any number of high end jobs who has been testing out the software will tell you. In fact it is completely the opposite.

Indeed one of the main impetuses behind the software is to give creatives much more control, with much more ease, while removing the technical barriers. Those of us who are mainly shooters and editors probably know our way around the basics of grading and colour correction. But there have probably been times where you’ve had some footage and seen, for example, a photograph that you really like the look of. You have it inside your head how you’d like your footage to have that same look, but you have no idea how to achieve it. And if you could somehow recreate it, you then have the added problem of making it look consistent across all of the shots in your edit.

Colourlab Ai was the brainchild of Dado Valentic, an experienced colourist in his own right. He's the man behind the colour science of Netflix's first HDR programme, Marco Polo. He's worked on more than 60 feature films, and hundreds of commercials. The company is headed up by Valentic, technologist Mark L. Pederson, and industry veteran Steve Bayes.

Colourlab Ai takes the idea that you could take that photo and then have the software intelligently recreate the look on every shot you want to apply it to. Don’t like the look? Well, you can change it to another one, as well as experimenting with variations along the way. The best of it is that all of this is carried out in such a way that when you go back to your edit in, say, DaVinci Resolve, you can see all your colour corrections right there in non-destructive form. You’ve still got access to be able to tweak and perfect the grade using all the tools that Resolve gives you.

You see, Colourlab Ai isn't necessarily for creating the absolute final grade. What it does do, and does really well, is that it does all the laborious stages of the grading process for you, and then lets you apply the overall graded look, matching all the shots in a scene in the process. You can then go back into Resolve to finish it off by adding those final flourishes.

You don’t have to be creating extreme looks. Colourlab could be used to get all of your shots in an edit matched scene by scene in one click of a mouse. It sounds like voodoo, it looks like voodoo, and it probably is some sort of voodoo. But once you’ve seen it in action and tried it, you make the realisation that it’s actually pretty hard to accept going back to a traditional grading method.

Image: Colourlab Ai.

Colourlab Ai progress

Colourlab Ai has made a lot of progress since its initial announcement, with the developers taking on feedback from early users. The developers liken the software to the autopilot in a Tesla car, taking over important functions while allowing you to direct it. But I would go further and say that what the software achieves is that it removes the technical barriers to grading and instead lets the user focus on the creative aspect. Yes, the software does automate many things, but the user is always in control of the direction it takes.

However in the current climate the software has taken on some added importance and significance. There is the fact that a large percentage of people are now having to do their grading work from home. Where once they had a nicely set up studio to go to, now they are having to re-adjust to having perhaps less capable setups. Then there’s the fact that HDR is becoming much more of a necessity when it comes to delivering the final video. These two factors create both a technical and a creative challenge. The advent of Dolby certified phones like the iPhone 12 has made HDR incredibly important now, with the need to make video look as good as possible on that device.

This isn’t just a desire for high end producers, either. YouTube content creators, too, want to produce more HDR content, but often come up against the traditional technical barriers that prevents them from achieving it with any degree of success. Indeed anyone who has investigated shooting and delivering HDR will know that it can be a minefield of technical knowhow.

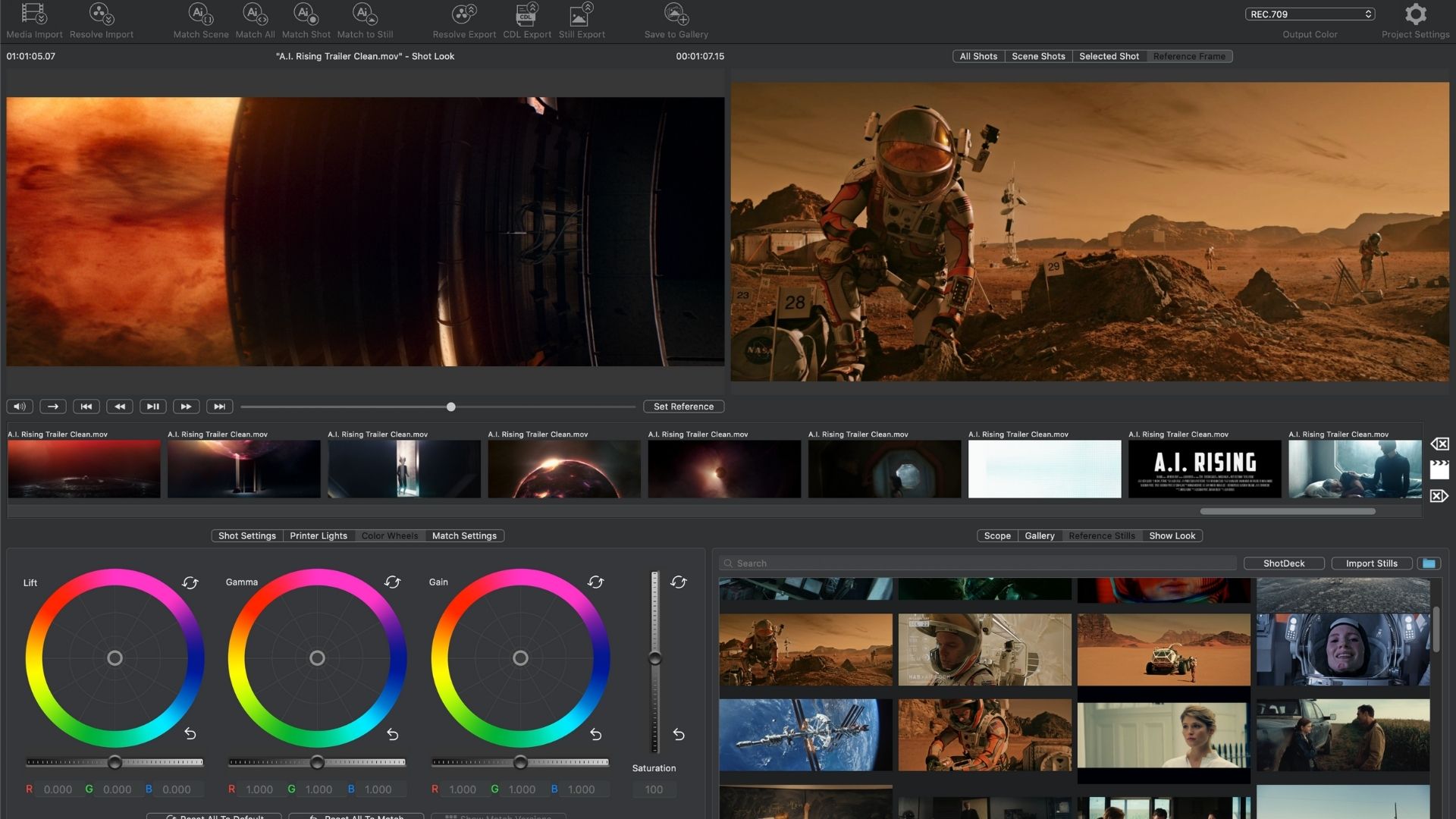

Image: Colourlab Ai.

HDR made easy

Colourlab Ai tackles the problem head on. The company’s CEO and founder, Dado Valentic, told us “From the outset we have made HDR one click of a button. It doesn’t matter whether you are working in HDR or SDR, you can decide what you are producing with one click. Before, people had struggled with HDR with software needing complicated settings, modes, and outputs setting up, but now it’s just done with one button.

… It means people can now export HDR all the time because there’s no extra effort in doing it. When you start to deploy technology in a smart way you really can simplify things that, technically, have been slowing us down.”

What separates the way Colourlab Ai performs its magic from the way that traditional grading systems work is that Colourlab actually knows what it is looking at in a scene. It knows what sky is and it knows what a person looks like for example. But it’s when it comes to achieving total consistency across an edit that the system really comes into its own.

An example is in the way LUTs can be made to work. Traditionally a LUT is seen to be a sledgehammer approach to grading. You can apply a LUT to one set of footage, but generally it won’t work when it is applied to another. Colourlab Ai can employ the use of Smart LUTs. Dado described how a Smart LUT works as follows,

“It works a little bit like Shazam. In order for them to take a recording and so quickly match the song. They don’t store all the songs, but rather they store all the ‘finger prints’ for the songs. So you reduce the information that represents what the song is to a very small file. We did exactly the same thing in order to speed up the matching process. So in fact every time you create a look and say that this is your reference, we create a ‘fingerprint’ of that image. So what you can do now is have a LUT that is smart so you can actually match to it rather than just having it as a fixed preset. It’s a matching reference you can use.

So now I can use a LUT for one project, and then when I come to the next one, where previously my LUTs may not work so well because the footage is different, now it can all match. I can create a looks library more easily because I know that they can all be made to work and gives me really good results.”

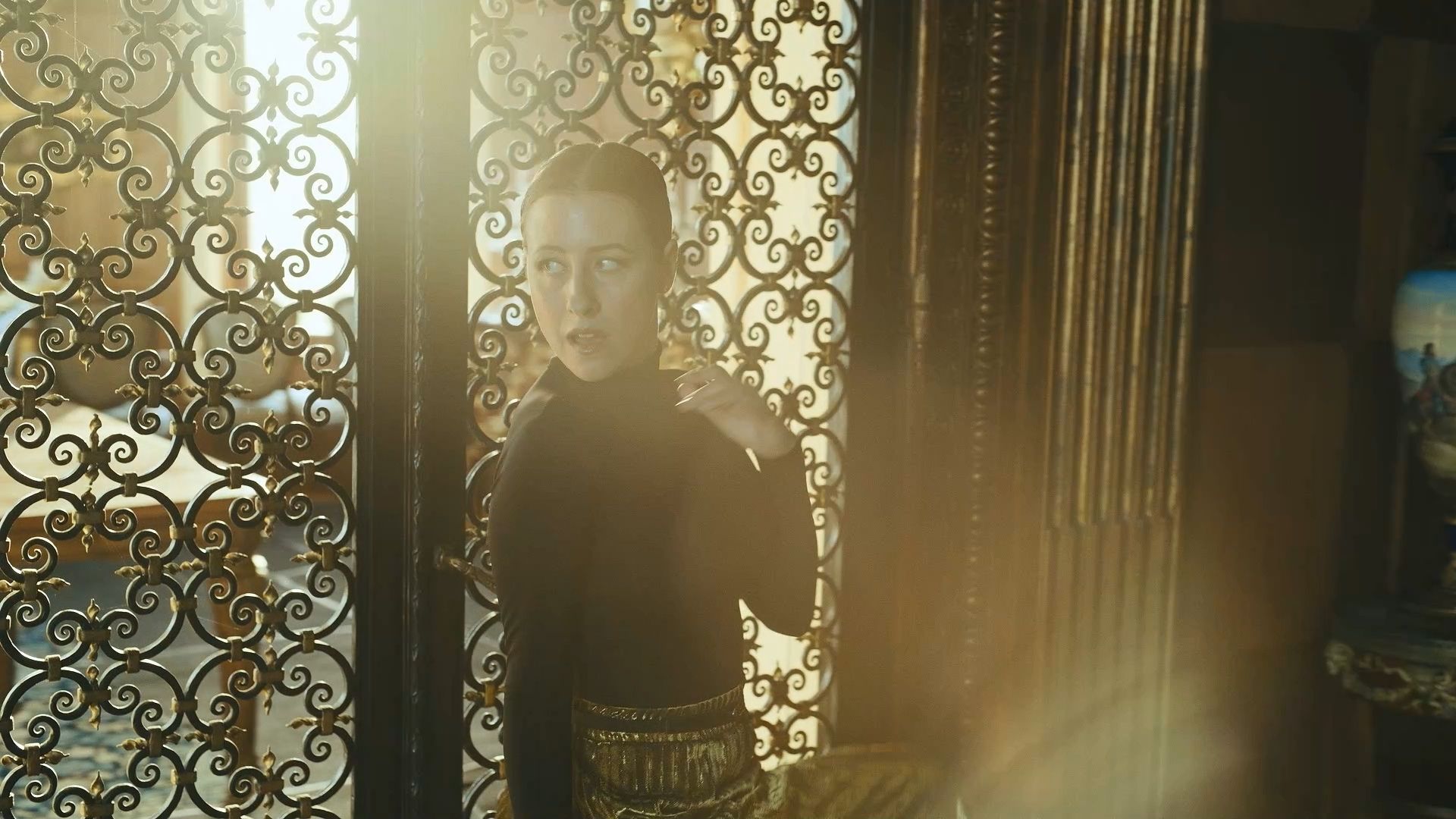

Software like Colourlab gives people the freedom to experiment and try things out. It’s an opportunity to allow your creativity to rule your workflow rather than your technical knowledge. In fact we’re at a point where this kind of technology may no longer be restricted to post software in the near future.

Redefining the future

“We’ve almost reached the point where we’ve hit the limits of what we can do in-camera, and now a lot of what we see is down to what we do with the footage afterwards. A lot of the advancements we see are coming from computational cinematography. The reason why modern phones look so good isn’t because of the sensor, but because of the processing.

Likewise, cinematographers are realising that they want to learn about the grading processes because it’s an integral part of the image they want to create. If I have a tool that isn’t too technical and I have in my mind a look that I want to create, and a tool that can translate it for me, I’ll use it.

You’re going to see cinematographers becoming much more involved in post production than before. It’s becoming obvious that if I want to improve my cinematography it has to be done in post. But there’s no reason why in the future all this technology can’t be moved into a device on the camera and be performed in realtime. It’s only a matter of time.”

And this is an incredibly important thing to consider. Much of what affects footage can simply be non-destructive metadata. Imagine if your camera could analyse a scene you shot earlier, but make that footage match it, in realtime using data that can be passed directly to the post grading software. Dynamic LUTs that adapt according to what the camera is shooting. Perfect skin tones every time because faces are recognised and fine tuned accordingly.

And this is before we get to talking about capturing realtime depth information via LiDAR or some other method. It might sound as if I have gone off on a tangent, but this is the future that Colourlab has in mind, and will very likely be at the forefront of. It’s a complete step-change in the way we think about how the post process is done, and we have only just begun on that journey. It’s going to be an epic ride!

Tags: Post & VFX Editor

Comments