Virtualisation is something we don't think about often. The fact that it is possible at all is quite incredible, but it could unlock the doors to massive amounts of computing power.

It's tempting to think that the idea of "virtualisation" came from the optical science concept of a virtual image. The best definition - without getting into maths or optical diagrams - that I've seen is this one from Merriam-Webster online dictionary:

"An image (such as one seen in a plane mirror) formed of points from which divergent rays (as of light) seem to emanate without actually doing so". [https://www.merriam-webster.com/dictionary/virtual%20image]

I like the sense that something appears real, like it does in a mirror, but isn't actually there. In technology, it increasingly doesn't matter whether something is real or not, as long as, for its intended purpose, it does the same as the real thing.

This principle seems on the verge of being crucial to all of us in our personal and professional lives, except for one thing: It already is.

You could argue that virtual phenomena have been part of our everyday reality ever since radio was invented. Those voices on the valve radiogram in the 1950s? They're not real. Those people aren't in the furniture.

You can go back further, to the dawn of photography. A picture of a zebra is not a zebra. But from a distance, it's functionally the same. We should maybe even mention cave paintings.

Function equivalence

And words. Ever since we could write things down, a list of items, for example, is not the same as the items themselves, but, again, functionally equivalent to being shown physical objects to remember them.

It all hinges around that term "functionally equivalent".

Another way of looking at this: how many car drivers actually know how a clutch works? Quite a few, but by no means everyone. So, although these people who don't truly understand why they need to disengage the clutch learn to do it perfectly, without the faintest idea of why it's necessary. To them, the clutch might as well be virtual.

You could even build a car that was actually automatic, but which, for the sake of those who insist on changing gear, had a gear stick that made a synthesised crunching noise whenever the driver forgot to use the fake clutch pedal that's also provided.

The point of this preamble is to show that virtualisation is normal to us. Nothing is surprising or remotely difficult about it. But that doesn't make it any easier to understand the extraordinarily clever and accomplished ways that virtualisation is propelling technology forward even faster than conventional exponential frames of reference would suggest.

Let's park cave paintings and antique furniture here and fast forward to the present, give or take a year or two.

Apple Rosetta

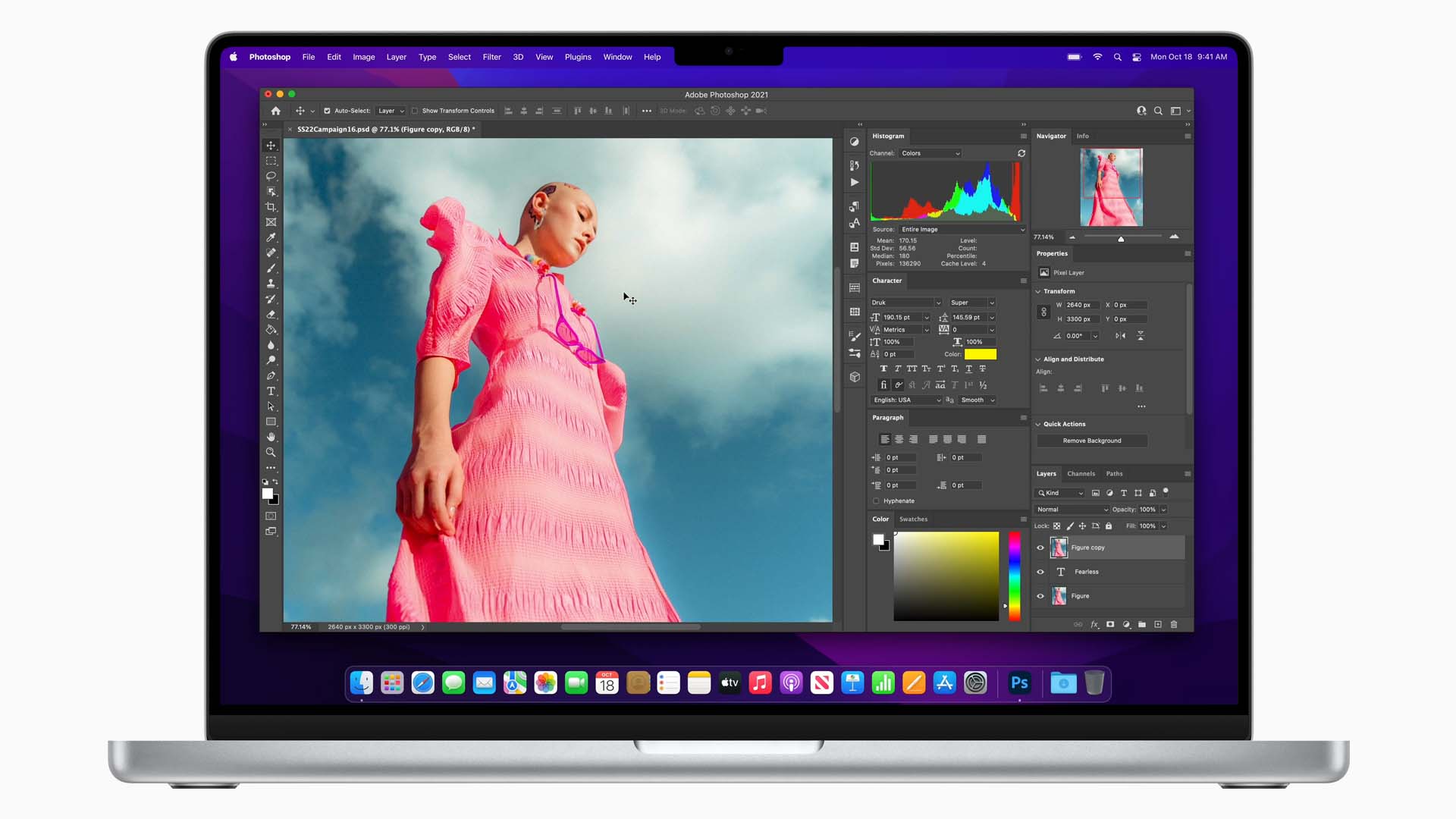

When Apple released its Apple Silicon-based laptops, it wasn't easy to know exactly what to expect. They were clearly faster, but the actual lived experience of a Mac user was much harder to predict because, in the early days, most existing software packages wouldn't run natively on the new Apple-designed chips.

My first opportunity to find out was when I bought a new M1-based Macbook Air to replace my partner's previous one. I offered to install all her software on her new computer. First up, I loaded Chrome. It ran pretty quickly, impressively so. Then I noticed that I had downloaded the Intel version.

You and I would be entitled to wonder how a software package written for an Intel platform could even run on such a "foreign" architecture. By rights, it shouldn't get past the very first instruction. But it not only ran but did so unexpectedly fast. But how?

It's because Apple has written a translation layer that makes software written for Intel "think" that it's running natively on an Intel chip. Essentially, Apple's built an Intel emulator - a separate software program that does run natively on Apple Silicon - whose sole purpose is to look like an Intel processor to programs that need that interface. Normally you'd expect a program running on an emulated processor to run more slowly than you're used to. But Apple Silicon is just so fast that the Intel version was a perky performer even under emulation.

This is a brilliant example of virtualisation.

To complete the story, I need to tell you that when I downloaded the Apple Silicon-native version of Chrome, it ran even faster; much faster. That was incredibly impressive, but what impressed me most was that Chrome ran in emulation easily fast enough. If that remained the only way to use it, that would have been OK for me. I wouldn't have minded, and I wouldn't have known how much faster it would have run natively. So I would have been happy that my Intel focused software was running on something completely different.

That principle - that we don't care what computing platforms underlie our programs - is profound and widespread. And you won't be surprised to know that this is an everyday experience for us - because of web browsers.

Early browsers weren't very much more than basic interpreters of a simple markup language. It's a bit different today when, this morning, I shared a video call with eleven other people, hosted in my browser. Crucially, that experience would have been essentially the same if I was on a PC, Mac, Linux, Android, IOS or any other platform that supported the same browser. The browser was a virtual machine.

The essential point is that virtualisation is everywhere, and it will increasingly make computing platforms both equivalent and invisible.

They'll be equivalent because they'll be so much faster than we need for everyday tasks that we won't mind the inevitable slowdown that comes with emulation. They'll be invisible because they are already. Do you know what kind of computer is hosting that Zoom call? What about your email? What about that song on Spotify? Or the film you just watched on Netflix?

It doesn't matter, and it will matter less and less in the future. Virtualisation is the gateway to massive increases in computing power because it allows us to run software anywhere, on almost anything. Couple this with the geographical ubiquity of the cloud and the vast bandwidth and low latency of 5G and beyond, and it seems likely that we will see computers differently in the future.

Instead of thinking of computers as distinct objects, we will think of "compute" - the rather prosaic term that IT professionals and engineers use for computing power almost as a fungible substance. You can split it up and divide it and distribute it as much as you like. It's a new way of thinking about a trend as old as cave painting.

Tags: Technology computing 5G

Comments