Replay: RISC processing is alive and well, and it makes modern CPU performance possible.

Now we’ve examined one technology which fell and one that endures, consider one that actively thrives: reduced instruction set computing — RISC. Whereas conventional CPUs use huge numbers of transistors to implement their functions, a RISC CPU uses a larger number of simpler instructions, hopefully – overall – going faster as a result. The idea was the darling of computer scientists mainly from the 80s and for a decade or so it seemed that RISC would succeed. Apple, IBM and Motorola collaborated to create the PowerPC architecture for Power Macs, Sun had been developing SPARC since the mid-80s and DEC introduced the Alpha line in 1992 to replace the prehistoric VAX. The biggest general-purpose computer platforms that weren’t Windows were going RISC.

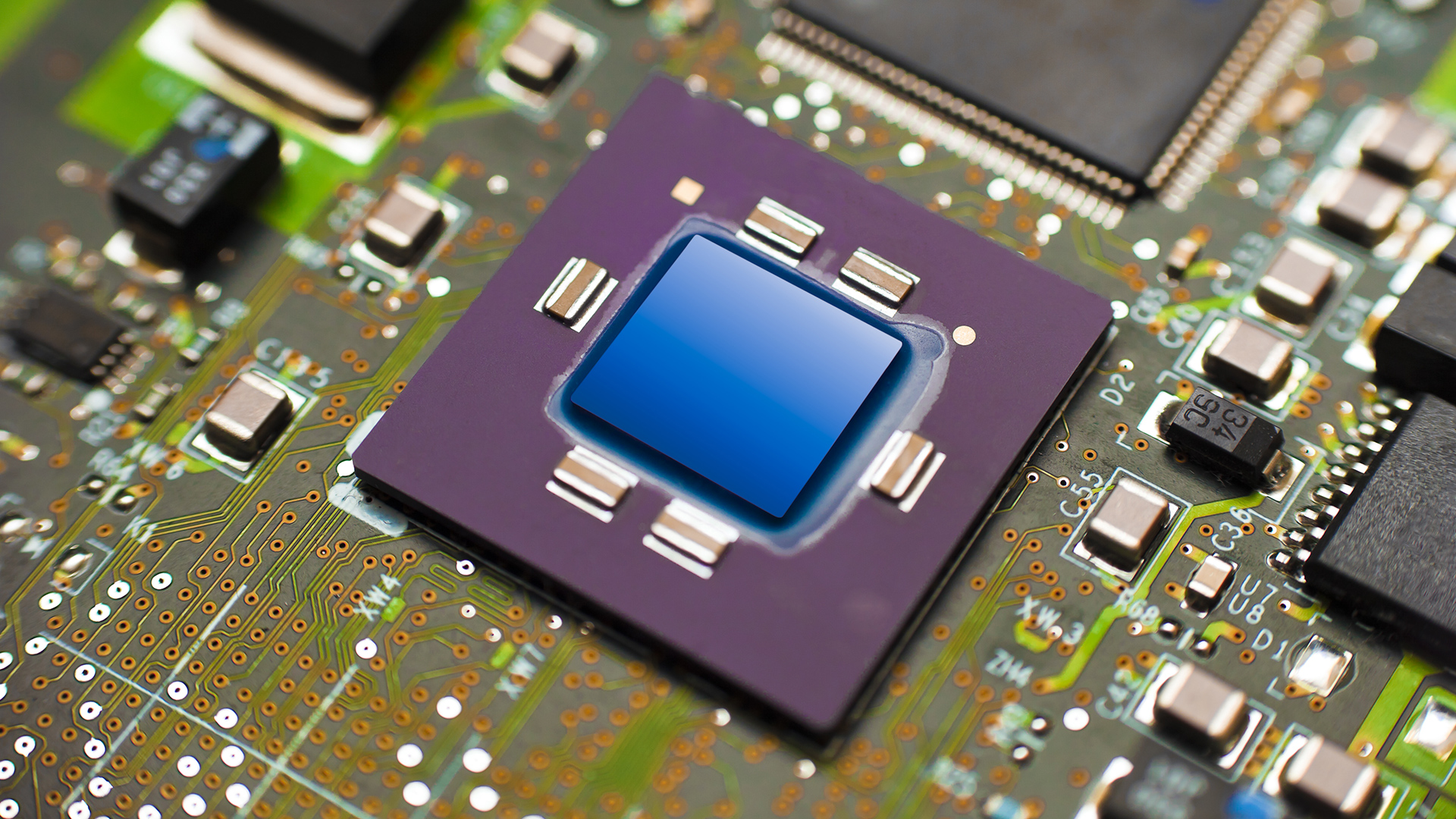

Many modern CPUs actually use a RISC core to - sort of - emulate the required instructions

From one point of view, the idea almost fell in the same way as plasma screens. Intel, having tried its own i860 and i960 RISC designs during the late 80s and early 90s, was able to take the development of the non-RISC CPUs that we still use (derivatives of) today way beyond what early RISC could do. The late 90s and early 2000s also saw extremely healthy competition between Intel and AMD, something that probably wasn’t a joy to experience from inside either organisation but which did the computer industry overall a lot of good.

By 1998, Compaq had bought DEC and ended development on Alpha, and Apple finally announced it was going Intel in 2005. SPARC lasted longer; Oracle, then the owner of what had been Sun Microsystems, ended the project in 2017. It would have been easy to assume that RISC was dead, killed by the march of Intel and AMD chips that could run Windows (and consequently, and more importantly, much of the world’s best software). Even Itanium, Intel’s pet project intended for the high-end, ultimately couldn’t hold a torch to the mainstream.

Look closer, though, and realise that RISC that made much of that possible. Modern CPUs that run x86 code often don’t run it directly; they’re often microcoded, which means that the CPU is actually implemented as a RISC core with extra circuitry to break down complex instructions into simpler ones. That’s the only way that modern CPUs can be pushed to the clock speeds they are and, yes, that has complicated implications for performance.

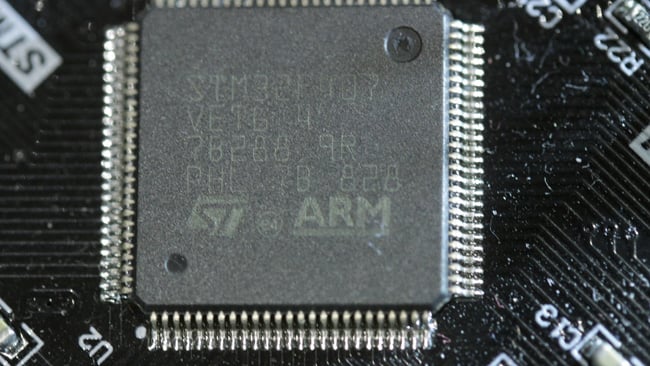

This STM32F407 microcontroller uses as its brain an Arm Cortex M4 CPU running at 168MHz. The development board cost £10. Weep, mid-90s computer users

But that’s not the main reason to think RISC is ascendant. Plasma screens failed and stacked RGB image sensors just about survive, but ARM CPUs, used in every phone and tablet on the planet, are a RISC design. Even though some of the giddier predictions of low-power consumption and high performance might have been a little hopeful, there is now a genuine prospect of Intel’s old architecture facing replacement. Small, but genuine, which shows that despite the sheer power of the incumbent, we should all keep on having interesting new ideas.

Tags: Technology

Comments