New neural network AI developments from Google look set to completely revolutionise hardware.

The world of computer hardware has been in something of a funk for some time. That's a deeply unwelcome, and somewhat depressing, situation for those of us who worked (or played Doom) through the white heat of the 1990s, a period when Moore's law was in full effect and clock speeds doubled and redoubled fast enough to make last year's video games look very anemic. Unfortunately, a combination of technological and market forces have conspired to slow that process to a crawl,

Tensor

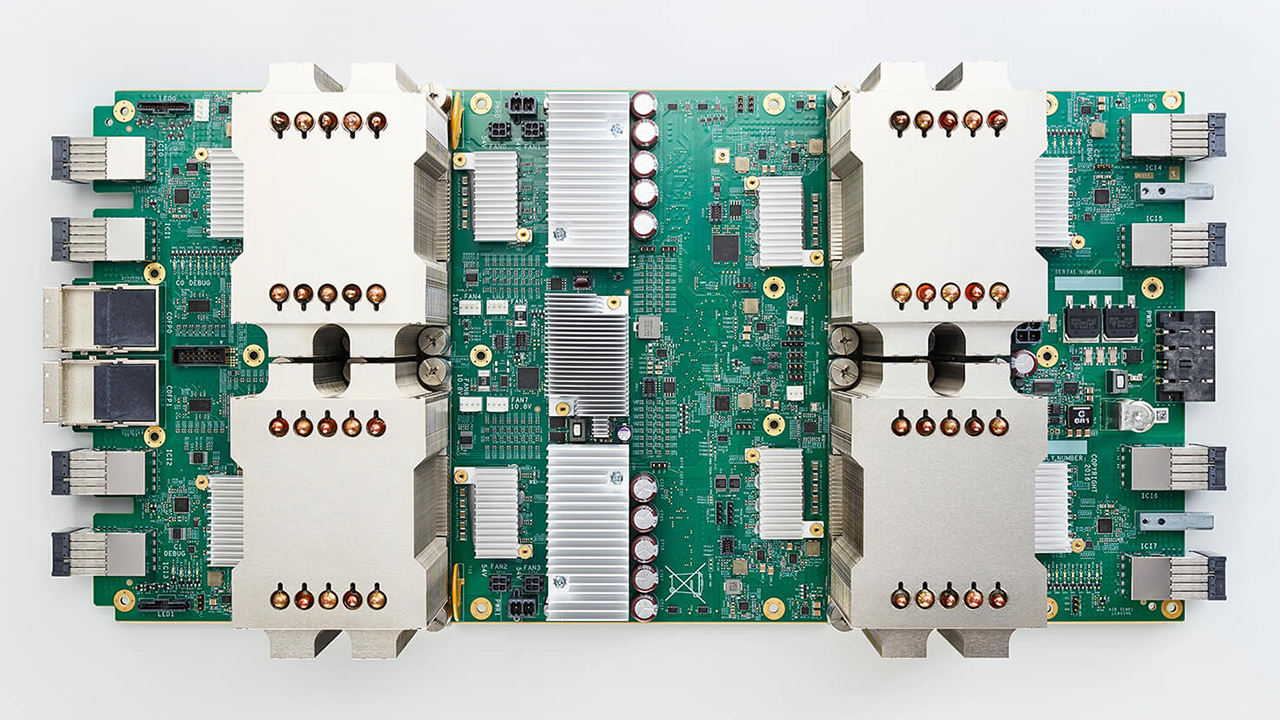

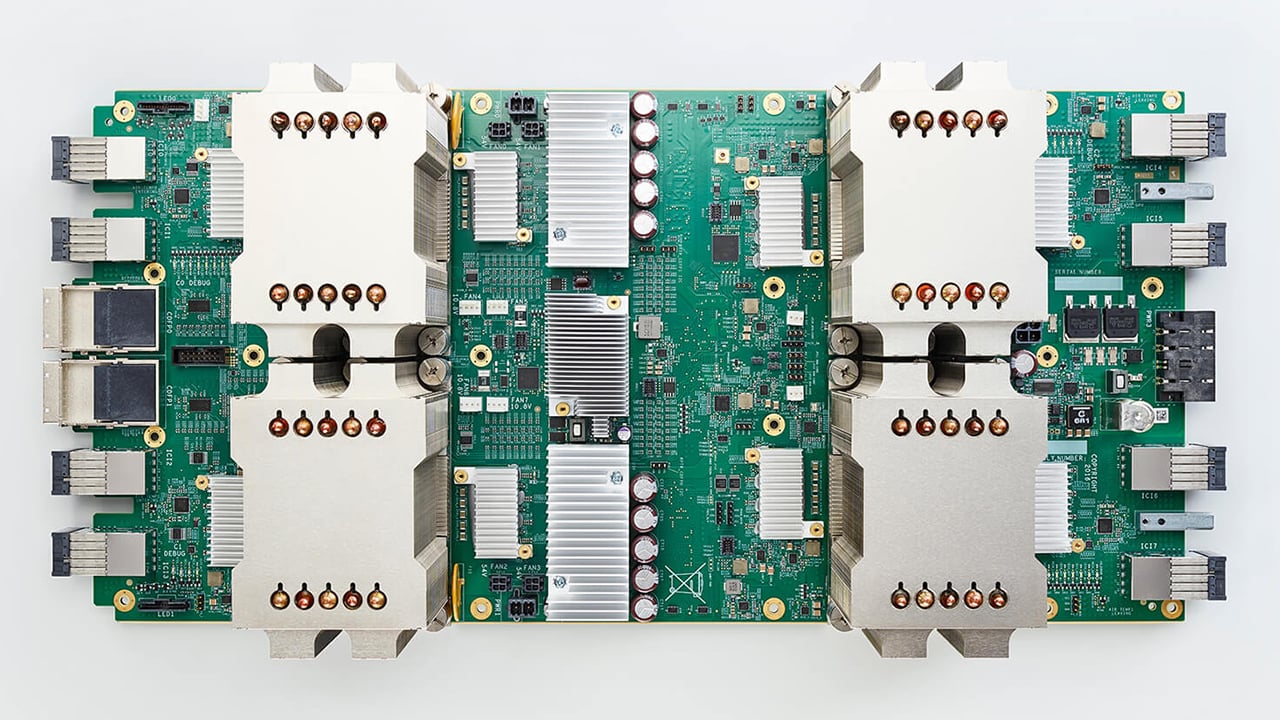

Earlier this month, however, Google announced the third version of TPU. What's TPU? A tensor processing unit. What's a tensor? Well, it's related to Google's artificial intelligence platform, Tensor Flow, so a TPU is a hardware device designed to help in the running of an artificial intelligence. That's a good idea: conventional computer processors will do it, but, as we've seen, they're hardly exploding in performance at the moment. AI, particularly neural networks (which we discussed here), have to do quite a lot of similar things in parallel, so they're quite amenable to being run on custom hardware.

So, can you buy a neural network accelerator board for your PC? Well, not yet. Google have so far kept their entire TPU line in the family, deploying it to datacentres to work as part of a cloud resource. The other question is whether you'd want to. Google specialises in scale – that is, doing things a lot, such as putting computers in warehouses a lot and calling it the cloud – and to succeed in this realm, a TPU doesn't particularly have to do things faster. It just has to do them in less physical space and using less power. The design goals of a Google are rather different to those of a workstation manufacturer, although in the end it's not really clear what a personal TPU could do for you either way.

Look at how TPUs are actually designed and deployed, and this becomes clear. The second generation devices – which Google have talked about in much more detail than their recent announcement – are used in four-chip modules assembled into groups of 64, for a total of 256 individual devices. It's all about parallelism and spreading the load, and doing it for less power. What's difficult is to really compare and contrast the performance of TPU to anything else. Google tell us that the third revision is to be eight times the speed of the V2.0, but it's hard to meaningfully relate that to anything else. The field is just too new.

IBM TrueNorth

Google's TPU project is not the first thing of its type. As far back as 2014, IBM's TrueNorth project produced a device containing (to abbreviate cruelly) 268 million programmable synapses. The power consumption is flexible down to a tiny 70 milliwatts (0.07W), comparing startlingly with the 100W plus of a conventional computer CPU even when it's sitting with its proverbial feet up. The key selling point of this technology is fairly clear.

It's almost always worth a quick note on safety. The scenario of the Terminator films is, reportedly, not on the cards; in any case, AI safety is a real, serious area of research. With the third version of the TPU, Google's project becomes capable not only of running an established neural network, but also of training new ones. The internals of neural networks are already a little obscure – we really don't know what they're thinking, or rather we do, but we can't make head nor tail of it – and the larger and more complex they become, the bigger a problem this potentially creates.

Whether it's part of a workstation or available as a cloud resource, though, this sort of thing might just represent a solution to the long established stalemate in computer performance. Neural networks can be strange, erratic things if applied sloppily, but they can also do amazing things that otherwise don't seem likely to become available any other way, at least, not with reasonable performance.

Stand by to welcome our new, quicker-thinking robot overlords.

Tags: Technology

Comments