The future is very shiny. What was once considered impossible is now reality, thanks to Nvidia's RTX series. But now a company has found a way to make realtime raytracing possible on other, mainstream, GPUs.

Many of the images which accompany this article are of public-domain demonstrations of various CG techniques; thanks go to the authors who are named where a name was given.

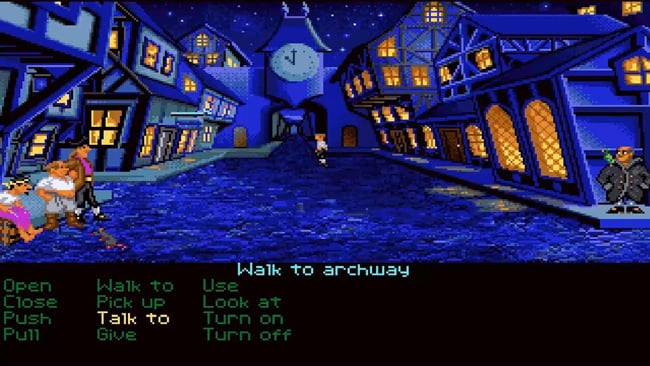

When LucasArts released The Secret of Monkey Island in 1990, it had long been obvious that video games were drawing strongly on the mise-en-scene of filmmaking. It wouldn’t be for ten or twenty years that computers started being able to render scenes which were at least passable imitations of big-screen entertainment, at least in a slightly stylised, Pixar-animated sort of way. Even so, anyone who’s played a video game in the last ten years will be familiar with the idea that some of them occasionally achieve startling flashes of reality.

The Secret of Monkey Island correctly executes a blue-and-amber look that won't become popular in filmmaking for a decade or so

The sort of rendering technologies that are used for big movies are different to the the realtime rendering intended for video games, but Nvidia has recently made some steps to close that gap with the RTX series of GPUs. These include a couple of different technologies for raytracing, a term “raytracing” famously referring to computer making a fairly literal simulation of individual beams of light as they reflect from various surfaces. It’s accurate, but it’s far too slow for many things, particularly games.

The rendering that’s done for video games aims works differently: it roughly simulates how light behaves in the real world, skipping the difficult bits. The simulated world is made out of thousands of triangles, and for each the computer projects out a two-dimensional screen representation of the triangle based on where its three points are in 3D space, where the camera is and what it’s looking at. It’s done pixel by pixel, hence scanline.

No, your Playstation 3 could not do this in 2007, at least not at the sort of resolution and with the same detail as Crysis could. Yes, the required PC cost more than the PS3

Texture can be simulated with an image, where there are numbers assigned to each point in a triangle which dictate which part of an image should cover the triangle. Lighting can be simulated by adding brightness to the polygon based on the distance, angle and colour of the light. This list of processes goes on and on, through bump and normal mapping, specularity, fog, per-pixel interpolated lighting, environment and reflection mapping, shadow mapping, ambient occlusion, and more. It’s an attempt to simulate real-world lighting without doing all the work of actually performing an accurate simulation of the physics.

Shadows

But the system we’ve described provides no way for objects to cast shadows on one another, and no way for them to reflect one another. Shadowcasting can be approximated by rendering the scene from the point of view of a light, and storing an image where grey values represent how far from the light various objects are; if a light is further from the object than the value of the relevant pixel in that image, it is shadowed. Reflections can be approximated by applying an image, a texture map, that represents what the world looks like from the position of the object in question. That image remains static as the object moves around; given appropriate artwork, the result can look very much like a reflection of the environment.

Screen space ambient occlusion, isolated. Simulates darkness in hidden corners similarly to a global illumination render, without the hard work. There are limitations

These techniques don’t deal with moving objects unless they’re recalculated every frame to take account of the motion. Often, only specific objects can cast and receive shadows, and those objects are usually defined at the design stage with things designed so that these limitations don’t really show up in average gameplay. Lighting that’s cast on fixed surfaces in video games is precalculated at the design stage and painted onto the world as a fixed image. Often, the only objects that even use the techniques we’ve discussed are the good guys and the bad guys who need to be able to move through different environments.

Enter raytracing

It might seem that we’re doing so much work to avoid doing it properly that we might as well start doing it properly, which is the point of raytracing. When we raytrace a scene, we do a much more literal simulation of real-world light. We calculate rays as they bounce around, modifying their colour and brightness with each bounce. It is hard work and often isn’t even used other than particularly important objects in the scene. Nvidia’s RTX architecture can do it, although even with the blinding speed of a modern GPU it uses an approach based on sampling random pixels then using noise reduction to reduce the resulting speckliness.

This is an example of environment reflection mapping at work. The colours on the surface of the object look convincingly like reflections, but really aren't

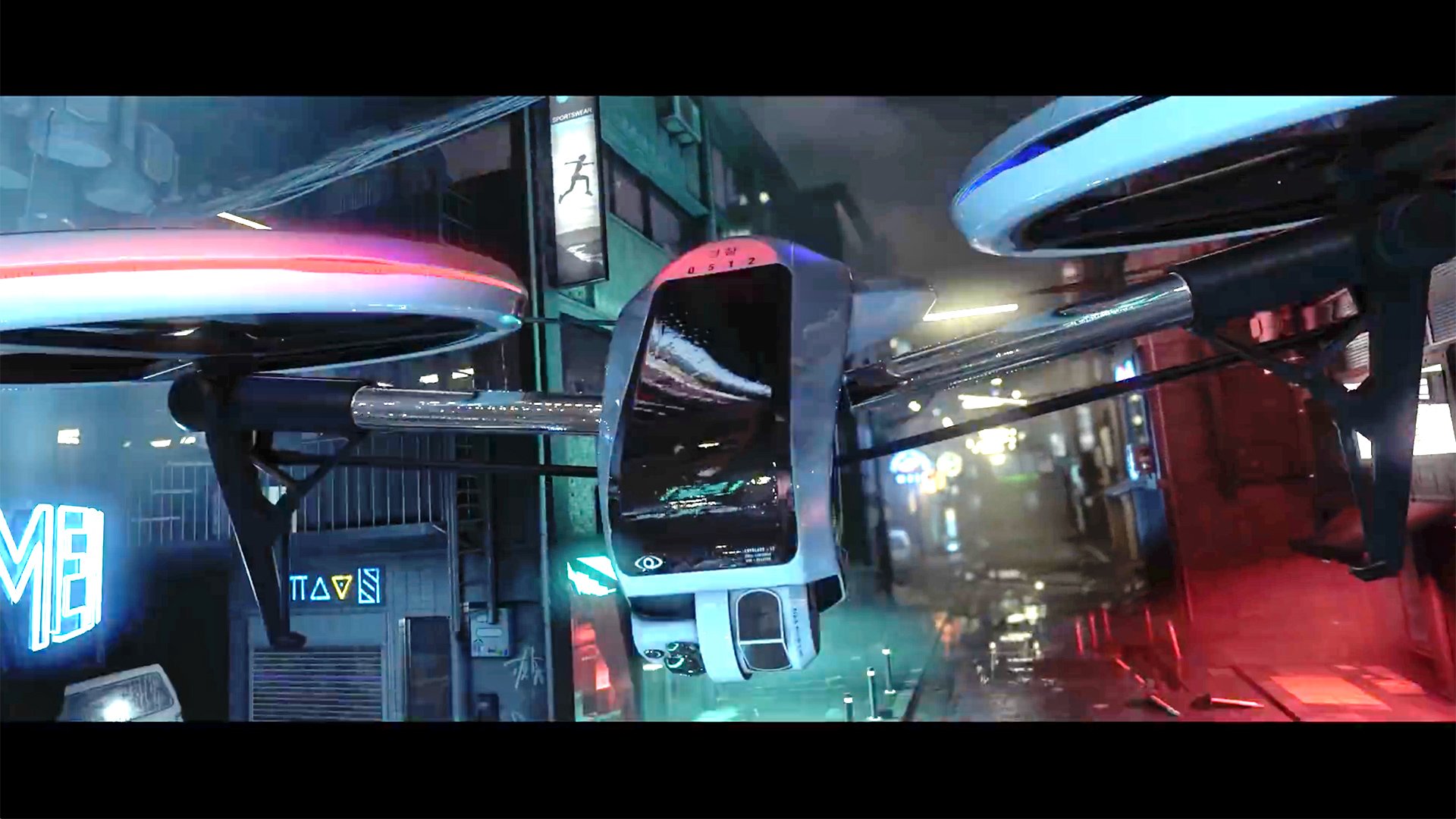

And it only works on Nvidia’s RTX-series GPUs. The development which prompted this article is by Crytek, the people who created the Crysis. Crysis didn’t use raytracing, but it did use every trick in the book and invented some new ones. In a move that goes some way to disturbing Nvidia’s vendor lockin on RTX, the company has done some hard work on alternative approaches to raytracing that work on various GPUs, and has produced the short film Neon Noir to demonstrate its new techniques. Things to look for include reflections of moving objects, particularly the drone as it flies around and casts light on things. The drone can reflect itself, with animated light on both the reflected object and the reflecting surface.

In a raytraced scene, reflections are accurately depicted. Example image by Wikipedia user Gilles Tran

The reflection of the shell casings lying on the puddle reveals that the reflections have a noticeably lower polygon count than the original object. A reflection is geometrically simple enough, so simulating a reflection involves re-rendering an object from a different point of view. It’s faster to render a lower-polygon version of that object.

This is not (or not entirely) a raytraced scene, but the red light on the drone reflects in its own body. Since the lights change, this cannot (easily) be simulated with environment mapping

So it works, and it works in a way that is scalable to the capability of the available hardware. It feels slightly as if RTX, and perhaps a compatible (or comparable) AMD implementation, might become fairly mainstream, though Crytek are presumably as aware of this as we are and may have engineered their technology so that the work can be handed off to hardware where possible. While there’s never been any sign of unification between realtime and offline rendering in the past, with the two techniques identifiably different, it’s now easier to imagine than ever. With the capability of realtime rendering going through the roof, the future looks bright. And slightly shiny.

Tags: Technology

Comments