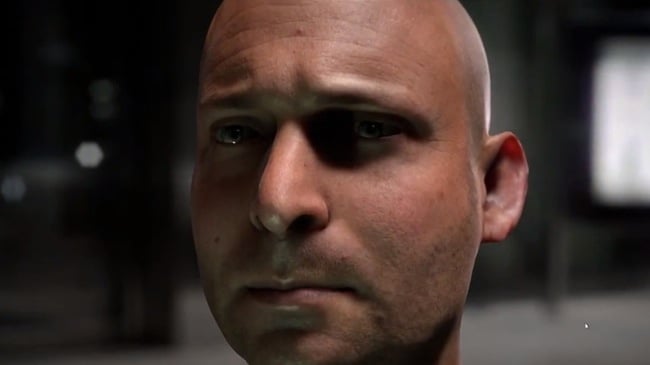

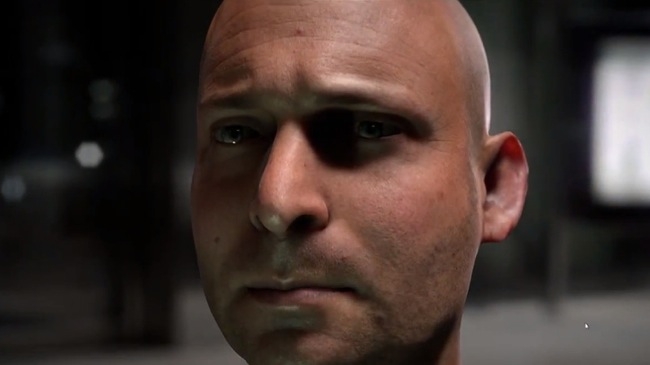

Nvidia Digital Ira

Nvidia Digital Ira

It's really, really hard to create a GGI human face that's convincing. If you know this, then you also know the reason why so many blockbuster movies (Antz, Bug's life, Cars, WALL•E etc) have been about things and not humans

The Adventures of Tin Tin was very good, but fell way-short of being convincing, and was even short of not being a little bit creepy. And as for The Polar Express…

All of these films have had the luxury of not having to be rendered in real-time. In fact, real-time rendering couldn't be further from the real circumstances in which they were made. The truth is that a single frame can take hours to render, which is not at all surprising when you look at the complexity in some of the shots, and when you think about legacy computing hardware.

Progress

Now, in RedShark, we often talk about exponential progress, and the idea that the curve showing the acceleration of technology is getting steeper all the time. What this means is that cleverer things happen more and more often, with less space in-between them. And at some point the curve will be so vertical that we will cease to be able to establish any trends whatsoever because everything ishappening so quickly. Things will happen unexpectedly.

There already?

There's increasing evidence that we may already be there.

What if we said that it's now possible to create a talking head that's petty convincing. That it's not creepy at all. That we've finally crossed the "uncanny valley".

That, in itself would be remarkable.

But wait, there's more.

What if we told you that this realistic animation was rendered in real time, on a single, commercially available GPU?

You really have to see this demonstration to believe it. Watch the clip, and in the last few minutes, you'll see an almost unbelievably good computer generated, real-time, human head.

You can almost forgive them for not giving it hair as well! (Hair is notoriously difficult to render. It is possible to do it nicely but it would have probably slowed this demo down enough to make it impossible in real-time).

What you're seeing here is a new technology from Nvidia called FaceWorks.

Skin shader model

It's based around several new ideas including photographic techniques that capture an actor's 3D face and expressions, as well as a multitude of elements needed to reproduce an actor's skin. Each pixel in the demonstration uses a shader model with over 8000 lines of code.

All of the expressions and skin elements create a huge amount of data that is then distilled into information that is more manageable. Not all of it is unique: some of it is derived from a pool of characteristics that are shared from person to person.

This could change everything. It almost hurts to think of all the possibilities. Imagine the new creative options that this creates, where you could use motion capture to map a "performance" onto another, completely realistic-looking, actor: again in real-time.

This is a 12 minute presentation by Nvidia's CEO, Jen-Hsun Huang. The amazing talking head, "Digital Ira" is towards the end.

And here's an article we wrote in January about the Uncanny Valley

Tags: Technology

Comments