Major CGI Skin Advancement

Major CGI Skin Advancement

In advance of Siggraph 2015, a team of researchers from the University of Southern California details a process for making CGI skin more realistic, taking us one step closer to finally bridging that 'Uncanny Valley'.

If you're a regular reader of RedShark News, you're likely familiar with the concept of the 'Uncanny Valley'. For those approaching the term for the first time, here's a quick primer: the 'Uncanny Valley' refers to the difficulty in making a CGI creation so faithfully representational of its subject that a viewer will accept it as 'the real thing'. While work in CGI imagery has undoubtedly advanced by leaps and bounds over the last decade, in the case of CGI humans, there's still a gap between what's being produced and what will 'feel' right for the viewer. This gap is the 'Uncanny Valley' or the 'so close yet so far' conundrum. Getting close to photo-realistic and behaviourially-proper CGI human representation exaggerates the effect of the comparatively minor missteps, because they are, in fact, the elements keeping your brain from positively identifying what's being seen as a real person.

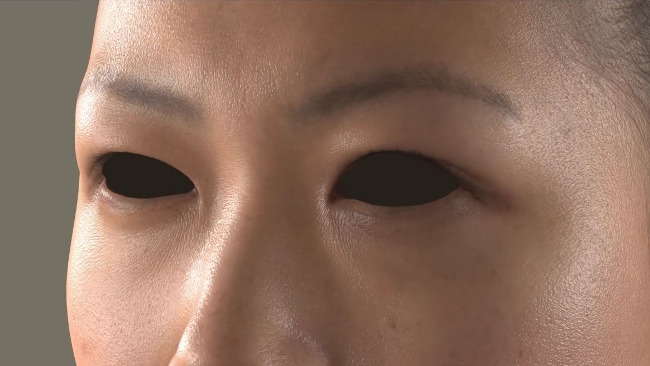

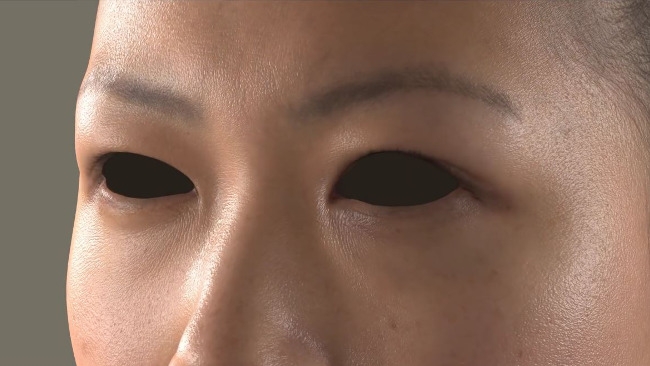

The three elements that have been identified as the main sticking points for faithful CGI human creation are hair, eyes and skin. While we may have to contend with hair that doesn't quite move or catch the light correctly or eyes that don't quite have a spark of life behind them a little longer, we may be on the verge of CGI skin so realistic that you won't be able to tell it from the genuine article. A research team from USC's Institute for Creative Technologies has prepared a technical paper and video presentation of a new method for "skin microstructure deformation with displacement map convolution." Previous efforts elsewhere focused on mesostructure (intermediate detail) structure dynamics at sub-millimeter resolutions. This new technique to achieve microstructure dynamics employs scans at 10 micron (or 1/100th of a millimeter) resolution of real skin being mechanically stretched at intervals. The team charted data derived from these scans, concluding that the skin surface looks rougher at compression and more shiny at stretch. The team then used the normal map (a bump map that replicates the effect of lighting on the surface) as a guide, blurring in the direction of 'stretching' and sharpening in the direction of 'compression' during displacement map convolution.

That was a quick(ish) run-through of the process, but do yourself a favor and watch the video below. It not only outlines the process in more detail, but also demonstrates how well this new CGI skin parallels reality.

Tags: Technology

Comments