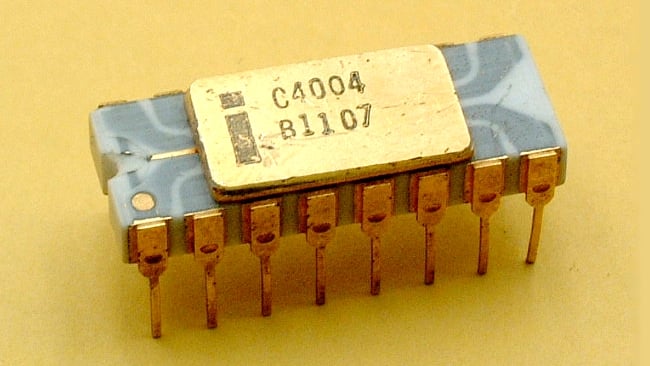

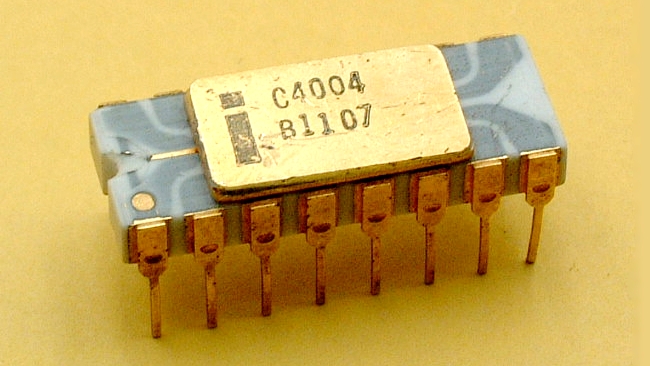

The end of moore's law: Intel 4004

The end of moore's law: Intel 4004

Moore's law is an assumption which may have run its course. So what happens in the Post-Moore world? Is this the end of rapid development in digtial video?

At the Recent Dell Precision day in Austen Texas, one of the speakers, Ben Cochran, Senior Software Architect from from Autodesk, made a point that made me sit up and slice through my jet-lagged, post-lunch narcolepsy. I'd asked a question, and his answer was quite surprising.

I'm a big fan of the theory that technology is accelerating. There are enough articles in RedShark to make you well briefed in the subject if you haven't come across the idea before, but its pretty well established and there's plenty of evidence if you look at the last fifty years, and then the fifty years before that, that things are changing faster.

Moore's law

Part of this, but only part, is Moore's Law, which isn't a law at all but merely an observation that's become a pretty accurate prediction as well. It's often stated in several forms, but essentially it is that as chips get smaller, you can pack more electronics on to the same piece of silicon. It's a virtuous circle where the more you cram in, the faster everything goes, and, amazingly, it all gets cheaper as well.

Typically, you'll find that processors and digital chips in general can fit twice as much in the same space every 18 months.

Whether you call it a symptom or a cause of exponentially accelerating technology doesn't really matter: what matters is that with each generation of computing tools, we design the next, better generation.

All of this is established enough not to require an overall justification. It just is. But when you look at smaller parts of the whole, there is a lot of variation. This is definitely a macro effect that is not in all cases valid at the micro level.

Heavy Duty

The point the speaker made was that in past times (maybe five to ten years ago and longer than that) if you wanted to increase your computing power - let's say you wanted to render something faster - you'd have to get a more powerful machine. This is because the heavy duty applications that need heavy duty computers were written for a single processor. To make things work faster, you'd need a faster computer. And that's where it gets expensive; just like with cars.

It's easy enough to make an ordinary car do sixty, or even a hundred miles an hour. But if you want one that will do two hundred, it's not just going to cost twice as much as a car that will only do one hundred. It will probably cost ten or twenty times as much. And if you want one that will do four hundred - it's going to have to be a special design, probably with a jet engine, and the chances are that it will cost millions.

Typically, with computers, there's more to running fast than turning a big, LED illuminated knob labelled "Speed", and that's why, with a single computer, the faster it gets, the (exponentially) more it costs.

But we've passed through the era where we're turning up clock speeds all the time. Since about 2004, this hasn't been the preferred way to get more processing power.

Chips are so small

Because now that chips are so small, you can fit more of them into the same space, and have multi-core processors.

For ages, Intel has had working designs with up to 50 (and more, for all we know) cores. They just haven't bothered to commercialise them because there's little point - the industry still thinks that 8 cores are amazing.

And since processors are now so small, we can add them almost ad-infinitum when we want more computing power. But here's the thing: if you get more computing power by adding processors, the cost goes up linearly. That's as opposed to getting more computing power by turning up the speed on a single computer, which as we've seen, increases the cost exponentially.

So, if we can now increase our computing power linearly, it means it's much cheaper than before, which means that we can have more compute power per buck, and that means we'll be building better tools again.

A difference

What this shows, I think, is that there is, and always has been, a difference between Moore's law, which says that things half in size/processing doubles every 18 months, and the "law" of accelerating technology, which says that the power of technology is increasing exponentially.

On the face of it, they mean the same thing (doubling will always mean exponential growth), but Moore's law is about processors, or at least chips, alone, whereas exponential technology refers to virtually everything we do.

Unllimited

The final part of our speaker's answer to my question about Moore's Law was illuminating. My question was: "what's the default assumption for software developers about the way technology is going". He said they don't use Moore's law any more. What they do instead is assume that there will be more parallel processing (i.e. more processors!) and that these processing cores will probably not be as powerful - but they will be totally ubiquitous. In other words, they'll be everywhere. As they approach grains of sand, they'll all nevertheless be in touch. They'll be able to work together in a gigantic mesh, and, effectively, the computing power at our disposal will be unlimited.

Tags: Technology

Comments