Recently, Sony stated officially what many of us have been thinking. That smartphone cameras will eventually reach parity with, and exceed the quality of interchangeable lens cameras.

In a recent business briefing session for Sony's Imaging and Sensing solutions, the President and CEO of Sony Semiconductor Solutions, Terushi Shimizu, stated that he expects the image quality of smartphones to exceed that of interchangeable lens cameras by 2024.

This will be achieved, he said, by combining the new pixel structure "double-layer transistor pixel" technology, better ISP processing, and artificial intelligence (AI) processing technology. The addition of periscope lenses will mean great zoom reach for smartphones as well. Be under no illusions, computational video will play a big part in all future video acquisition, and Sony expects high end sensors in phones to double in size by 2024. The Sony statement also alludes to something I've been banging on about to anyone who will listen since the early 2000's, and that's depth mapping.

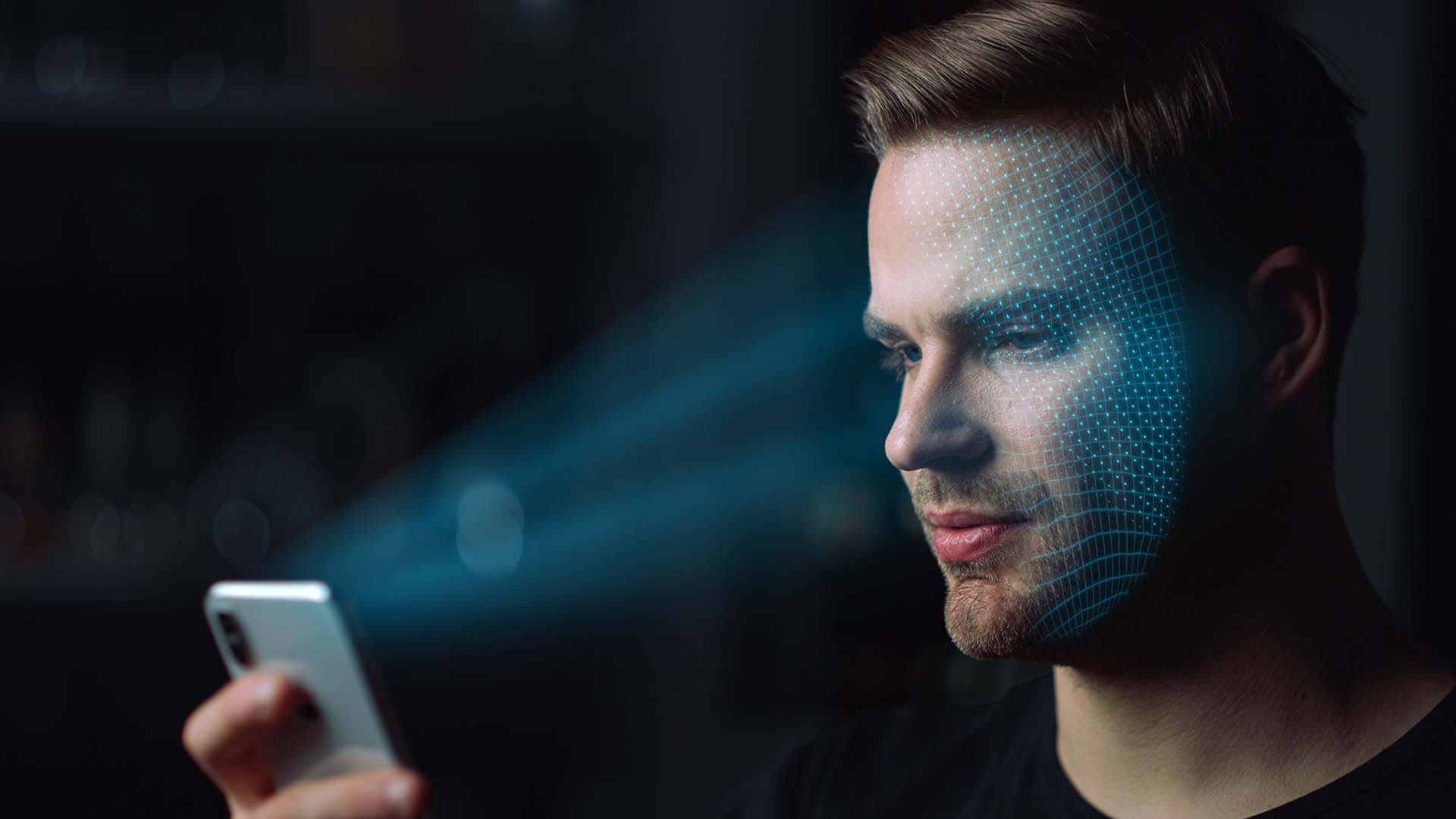

Sony mentions ToF (Time of Flight) sensors and background blurring in 8K in smartphones by around 2030. ToF sensors are 'ranging' sensors. If you have a recent iPhone you've already got one, the LiDAR sensor. And whilst it is currently limited in resolution, this will change over the years, and it will have a profound effect on what we can do with video.

The importance of depth mapping for video

High resolution depth mapping, in realtime, is a dream I have had for video ever since I used depth maps with 3D animation throughout the 90s and early 2000s. The benefits of having such a function were obvious even back then. With a high resolution depth map you can isolate objects and people extremely accurately without the need for a studio or a green screen. In terms of modern video and film production, this is an incredibly important thing to think about.

In the early 2000s most of my thoughts were with regards to compositing and doing away with the green screen, but there are whole host of other things that are possible with depth maps.

For a start it means that you can have ultra accurate auto-focus on very specific objects and parts of a scene. The effect it can have on accurate focus was recently highlighted by the inclusion of a LiDAR sensor on DJI's Ronin 4D camera. It also means that if you shoot with a deep depth of field, say, with a very small sensor, you can accurately create 'virtual focus' and store the information along with the main image. In other words you can control your focus in post.

We already have a semblance of this on current iPhones, but it's nowhere near as good as it could be with ultra-high resolution depth mapping. With context aware AI, we will reach a point where it will be possible to ask your phone to focus on John until you reach a certain mark and then slowly pull focus on Jane without the need for a focus puller.

In terms of drastically increasing the scope of what is possible on a phone, or indeed any small camera device, this will only serve to democratise video even more than it already has been. But there's another aspect here, and it's one that will affect both lower end camera systems like smartphones as well as the high end.

DJI's Ronin 4D already makes use of LiDAR range finding for its autofocus system. Image: DJI.

Making LED volumes a thing of the past

Realtime high resolution depth mapping could also make LED volumes a thing of the past. Or at least significantly reduce our reliance on them for virtual production. One of the current advantages of using an LED volume is that it can contribute to ambient lighting as well as give more 'atmosphere' to actors as well, since there's a realtime environment to react to. There are other advantages too, such as realistic reflections in, say, car windows during driving scenes and the like.

These are all reasons why LED volumes are being used for virtual production, but there are still limitations, such as the size of the LED volume, the distance you can shoot from it, and the need for the studio itself. If we think in terms of democratisation of filmmaking and contextual AI such as that demonstrated recently by the Dall-e system, as well as Google's Imagen system, we can get a solid idea of where this could, and most likely will, head.

Imagine you have your smartphone, and you're in an empty warehouse space, or even in the local park, you could utilise the power of high resolution realtime depth mapping and AI by asking your phone to place your actor in NYC, or in front of an erupting volcano. You won't even need a 3D modeller because the AI, whether it is based in the cloud or on the phone, will know what you need. You could then tweak that environment to your liking. Virtual production without the need for an LED volume or green screen.

iPhones also include a LiDAR sensor, which is being used for low light focus assistance as well as for 3D mapping apps. Image: Apple.

Virtual lighting

But what about the lighting? Here's where things get extremely interesting, because depth mapping also makes 'lighting in post' or even realtime augmented reality lighting, possible. Depth mapping gives you access to all the shaping of the environment and the people within it. In other words it's a 3D reference, and therefore light and shadow can be calculated from that. All of this processing wouldn't necessarily have to be performed directly on your local device, either. It's quite possible that it could be done through the cloud.

Now, for the industry, or for those of a more conservative mindset, there will be proverbial hairs on backs rising right now, because it hints at a filmmaking world where everything is virtual and fake, with jobs such as focus pullers being made redundant.

But I don't think this is anything to be concerned about. There will always be a need for 'real' filming in real environments. And filmmakers never truly like to leave old methods behind because it's part of their creative DNA. Vintage lenses that need focus pulling will always exist, and will be used. But what needs to be understood here is that this sort of technological development gives creative power to those without massive budgets. It gives a freedom that wasn't there before to achieve the sort of image quality and control that would normally need a large crew and expensive studios.

We shouldn't forget that already virtual production is a massive topic right now, with more productions utilising current VP technology. The development of the technology above doesn't just benefit smartphone users, it will also benefit the high end as well, taking forward the possibilities of VP to a different sphere.

It could also benefit filming in natural locations. With depth mapping you could be filming a close up of someone in an exterior location, but you just need a lovely golden hour style backlight. The light you get in reality isn't giving you quite what you want, so you can augment it with a virtual backlight instead. One example of many where its use could be subtly integrated with traditional filmmaking.

Once again many people will still think this technology is a long way off, at least to be able to give a good enough result for the cinema screen. However, what we should also consider is that the building blocks are already in place. The process of reaching that point was begun a number of years ago, and we are now starting to see the results of exponential progress, so these abilities will reach us far faster than we anticipate. We already have some of them in rudimentary form.

That Sony agrees that smartphone camera technology will leave interchangeable lens cameras behind is significant. That it is forecasting such leaps in image quality due in no small part to AI and ToF sensors is also extremely important. Some may scoff, but this is the most important manufacturer of imaging technology in the world making these statements. It would be a huge error to ignore them.

Tags: Technology Futurism

Comments