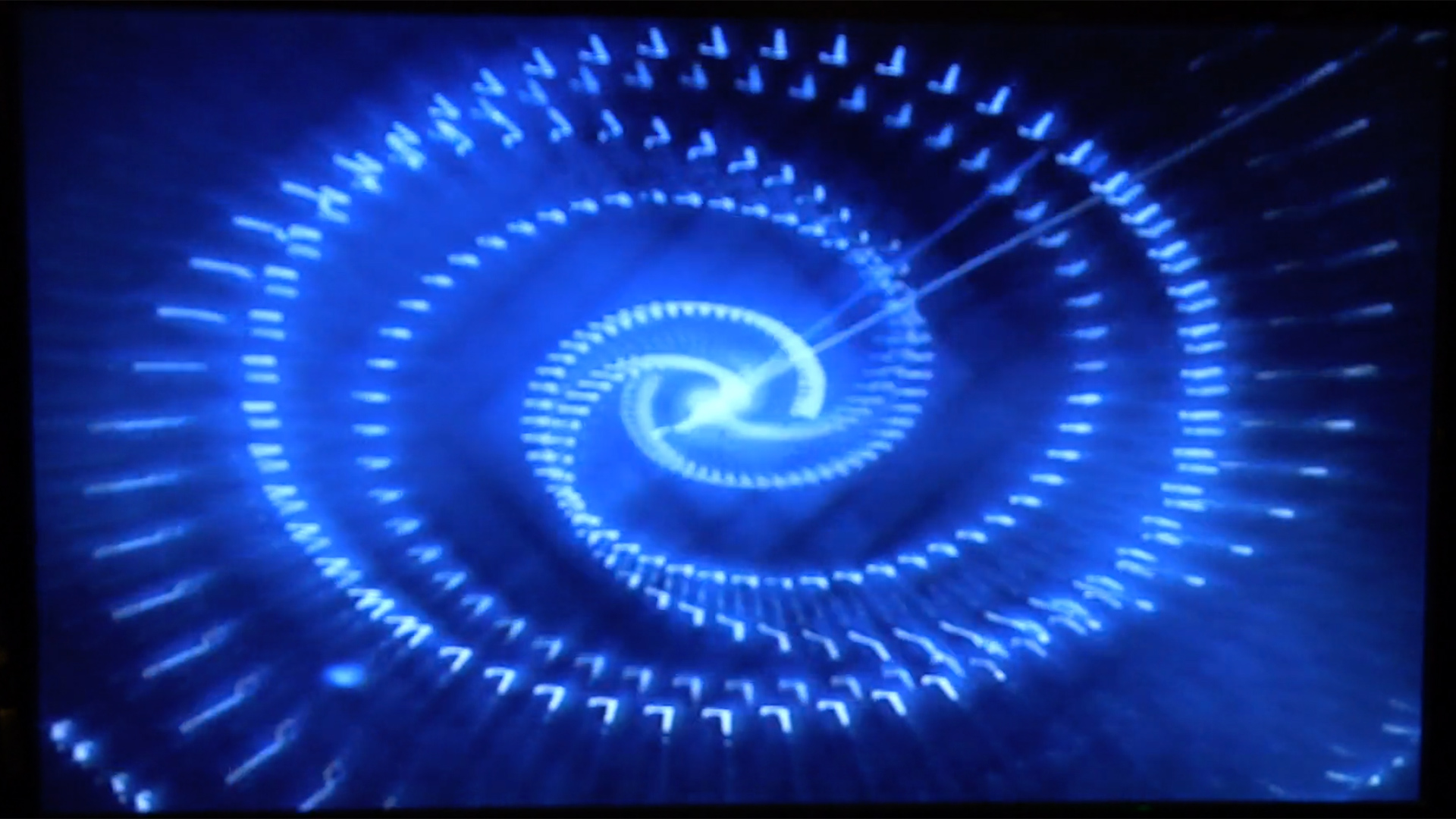

Replay: At a time when computers struggled to render a few animated lines, the Scanimate could take graphics and animate them in realtime. Phil Rhodes looks back on a revolutionary system that you might not have heard of, but you've most certainly seen in action.

It’s often been said that the limit on the cleverness of motion graphics is the performance of the computer. Often, we stop polishing simply when things become unbearably sluggish. “My agony is that computers are not small, fast or cheap enough,” said one particularly well-known figure. “My real agony is one of impatience. I expect to see one day an art of graphics as a regular daily part of television programming. I am impatient with the probability that to bring this about will require about as much invention, hard creative work and digestion of many new techniques as will be required to bring about the home, television-sized computer.”

Wait, what? Who said that? John Whitney, one of the fathers of computer graphics, in 1968. It’s arguable that Whitney actually invented the term “motion graphics,” having started his company of the same name in 1960. Eight years later, Whitney was artist in residence at IBM and working with some of the best equipment then available. The IBM 2250 was a vector display terminal with a resolution of 1024 points square, commonly connected to an IBM System/360 which could, in theory but not often in practice, boast up to 8MB of memory and a performance of 16MIPS.

Or, in today’s terms, not nearly as much as a wifi-controllable lightbulb. It took several seconds per frame to render some of Whitney’s experiments, and he bemoans the fact that actually watching moving images required the sequence to be shot out to film frame by frame, processed, and projected. All that, bear in mind, for something that looks somewhat like the Windows “Mystify” screensaver.

Enter the Scanimate

So performance is an old problem. Inbetween Whitney’s experiments and the modern world of unimaginably more performant hardware, though, came a few concepts in motion graphics that slalom neatly around the problem, with perhaps the best-known being Computer Image Corporation’s Scanimate, which, being entirely analogue and only slightly programmable, was barely even a computer, but had certain advantages. By “best-known” in this context we probably mean by results, which are immediately recognisable to anyone who watched television in the USA during the late 70s and early 80s; the system itself is somewhat obscure because few were made.

Scanimate is controlled in a way that would be vaguely familiar to users of modern motion graphics systems, inasmuch as it’s got effectively two keyframes called “initial” and “final” with filter settings variable at both those points. It’s perhaps best described as a device which transforms graphics, rather than a device that creates graphics; it takes an input video signal and applies various distortions. Often, that input video signal would be a camera viewing high-contrast graphics, such as white text on black. The most basic, initial version of Scanimate included settings to apply what looks essentially like a horizontal wave warp, a vertically-oriented sort of wrap and curl, and an approximation of perspective, plus simple translation and scaling. Later versions included more, and all in realtime.

How Scanimate worked in realtime

But Scanimate was initially developed before 1970, at a time when John Whitney was waiting several seconds a frame for a screensaver containing far fewer lines than a television picture. How did it work?

To understand that, we have to understand how a television picture is normally drawn. Most people are aware that traditional TV – which is the world in which Scanimate operates – is drawn as a vertical stack of horizontal lines. These lines are scanned out one by one by an electron gun which causes the phosphors on the face of the tube to glow. In a conventional, old-style glass tube monitor, that involves two signals. One controls the horizontal position of the electron beam, so for an NTSC picture with 525 lines it must ramp upward, to create the horizontal scan, then snap back to zero 525 times per frame, or just over 15,734 times per second. The other signal controls the vertical beam position, and it must ramp once per vertical scan, to create the downward progress of the beam. We’re glossing over a few details, but that’s roughly how it works. Normally those signals are set up so that the scanning pattern creates a normal, rectangular image.

But what if we just don’t do that?

If we alter the vertical scan to ramp up less, it’ll compress the picture vertically. Anyone who’s played with the vertical size controls on a CRT monitor will understand this; this is how monitors used to switch between 16:9 and 4:3 mode. In the same way, if we reduce the maximum level of the the horizontal scan, it’ll compress the picture horizontally. We can add or subtract from those signals simply to move the image around on the display.

We can also get clever: alter the horizontal scan so that the amount it ramps decreases toward the bottom of the image, the image will be horizontally compressed toward the bottom of the screen – that’s Scanimate’s pseudo-perspective effect. Those wobbly sine wave effects are produced by adding the output of a sine wave oscillator to the horizontal scan offset. Adjust the level and frequency of that sine wave, and the ripples change shape. The settings for most of this can be preset for the “initial” and “final” positions, so, for instance, text can be flown in and resolve from a distorted shape.

The result is scanned out onto a very flat precision CRT, and rephotographed using a second camera. Crucially, the image is drawn simply by steering the electron beam around the display according to the modified scan geometry created by the Scanimate’s electronics, there is no rendering step here, and everything happens in full resolution, at full frame rate, with effectively zero latency.

Other, more advanced effects came later, with the ability to rotate the image, split it up into horizontal chunks to be treated independently, and more. With options to add colour, use optical filters to add glow, star filtering, and other effects, plus whatever a vision mixer can add to proceedings, advanced composites become possible. Some of the most advanced tricks might require a few stages of re-recording and thus aren’t strictly a realtime effect, but in the 1970s it was spectacular. After Effects users, staring at a crawling progress bar, might feel some reason for nostalgia.

If we've whetted your appetite for nostalgic motion graphics, take a look over on the Scanimate site for more insight into this amazing machine.

Tags: Post & VFX Technology Editor Featured

Comments