This camera will never exist in pocket form

This camera will never exist in pocket form

Why the quest for the perfect camera is a journey without end.

Most people are familiar with reciprocity as it applies to photography. Open up a stop, halve the shutter speed, and the effective exposure is the same – basic stuff. What's perhaps less often talked about is that this sort of thinking applies to the whole design process of digital cameras. This came up recently when we discussed issues of sensitivity and dynamic range, where it quickly became clear that many of the most important aspects of camera engineering are tradeoffs, one against the other, and we can't have everything at once for any amount of money.

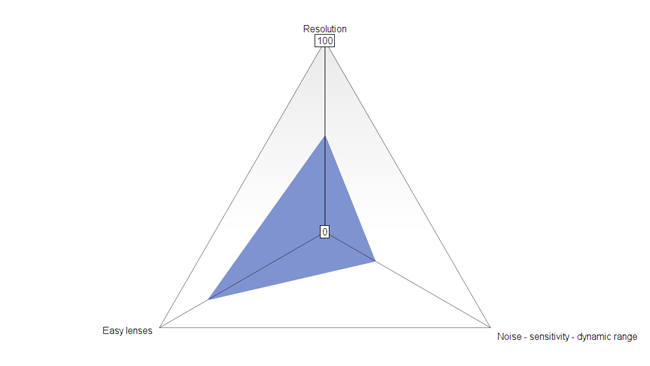

We could express this as a sort of triangular radar plot. Let's look at a simple example:

A small sensor has mediocre noise performance but requires an easily-manufactured lens

This is a relatively small sensor, such as the one in a cell phone. It's easy to make a lens for this sensor because it's so small, but in order to achieve reasonable resolution the photosites have to be fairly small, so the noise, sensitivity and dynamic range (which are to some extent aspects of the same thing) are not particularly great. Let's look at another example:

![]()

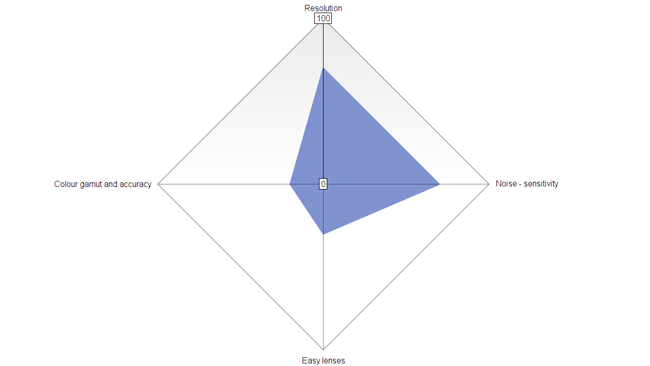

A larger sensor has excellent resolution and pixel quality but requires upscale lenses

This might be a full-frame, high-end digital cinema camera with excellent resolution and dynamic range, but it's hard to make lenses for it. In order to achieve high resolution and high sensitivity with low noise, it needs a large number of large photosites, so the sensor ends up being large and requiring a large, high-precision, expensive lens to achieve a reasonable f-number.

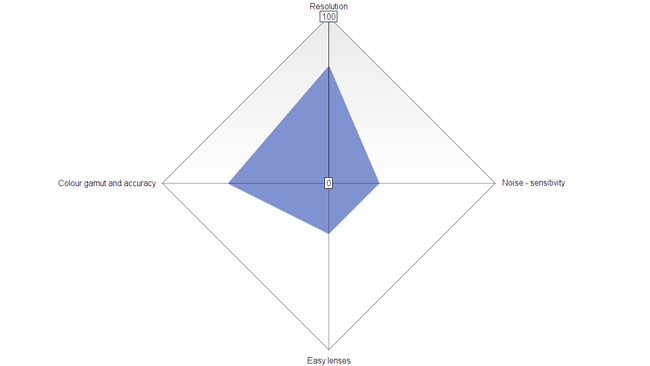

The important thing to realise is that the area of the blue triangle is basically the same in each case. The area of the blue triangle represents – more or less – the technological ease of achieving a certain image quality and it is limited what physics gives us. We could add more points to the shape. One prominent example is colour quality, which is in direct competition with noise, sensitivity and dynamic range. Better detection of colour requires denser colour filters, which reduces the amount of light hitting the sensor.

This camera has excellent noise and dynamic range performance but achieves that with pale colour filters, sacrificing colour quality

Make the colour filters denser, and colour quality improves, but sensitivity is compromised

Beyond that, it's a world of compromise.

If we want to use easier (cheaper, smaller, lighter) lenses but don't want to sacrifice resolution, we can sacrifice dynamic range by making a smaller sensor with the same number of photosites. A smaller lens can achieve the same field of view and f-number on the smaller sensor, but the highlights don't look so nice.

If we want more resolution but don't want to sacrifice noise and dynamic range, we can sacrifice a small, lightweight lens by making the sensor bigger. The larger sensor achieves higher resolution with the same size photosites, but we must tolerate a larger, heavier lens to achieve the same field of view and f-number.

Canon's well-known 17-120mm zoom is hugely capable, but not an option outside the high end (and only covers APS-C, for that matter)

If we want more dynamic range, we can sacrifice resolution by making the photosites larger, maintaining the same lens, but the image isn't as sharp.

It all gets a bit circular after a while. Want everything at once? The area of the blue triangle has already been increased by overall improvements in sensor engineering. At any given time, it can, to a small degree, be increased by spending more money on high-grade engineering, but honestly not by much. Really good engineering can improve the analogue side of an imaging sensor to the point where noise is suppressed and sensitivity and dynamic range increased, but only by perhaps a stop or two. Everything else is related.

Alexa LF. Sensor - large. Quality - high. Lenses - tricky

The simplest way to improve things is to put more light on the scene. The modern obsession with shooting everything in candlelight at huge f-numbers is unhealthy, especially with larger sensors. Insisting that lenses must achieve an f/2.8 or better in order to be considered usable is increasingly impractical from a focus point-of-view. Some scenes are easier to brighten up than others, but it's at least sometimes easier to add light than it is to rent a vastly bigger, heavier, more expensive lens in order to land a brighter image on a bigger chip.

Things are likely to continue improving at least a little, as modern techniques with stacked semiconductors make sensors better and better. Regardless, the compromises involved in the size, resolution, sensitivity and dynamic range of a sensor create a sort of circular chain of consequences which mean we can't quite have everything at once, for any amount of money, and that some things are mutually exclusive. In the end, while engineering advances, though, physics is immutable. Understanding of physics changes, but the cutting edge of experimental science tends to take some time to find civilian applications. The discovery of the Higgs Boson doesn't have any immediately obvious applications to things like lenses or imaging sensors.

EOS-5D Mk. IV. Big chips and high-quality shooting are more accessible than ever, though cinema lenses to suit them are still rare and expensive, particularly zooms

As such, once manufacturing techniques have advanced to the point where we can use everything physics gives us, all we can do is keep making sensors bigger. If there's an upside, it's that the tenets of filmmaking are still rooted in art as it was pursued in the mid to late twentieth century, so it's quite possible that conventional, mainstream film and TV work doesn't actually need endlessly better cameras, anyway.

Tags: Production

Comments