Alpenbrevet Red Bull

Alpenbrevet Red Bull

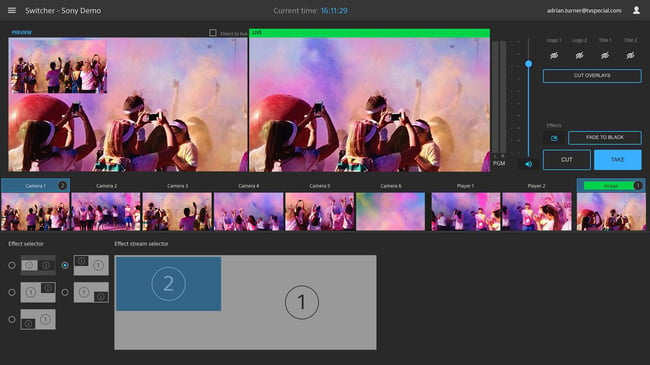

Sony’s Virtual Production system, announced today, brings the ability to control a multi-camera live stream with nothing more than a web browser (plus, of course, cameras). There’s no physical switcher. This an important moment in the history of live productions, because some day all TV shows will be made like this

Sony has made a live production system that throws away the rule book. Until now, complex, powerful and often expensive hardware has been at the core of live multi camera productions. Video switchers are vastly more complicated than their simple name would imply, although it is true that, in essence, they are used for selecting between a choice of camera feeds, and stitching them together into the output stream.

Sony Virtual Production System - main page with audio controls

What Sony has done is place this hardware-intensive process “in the cloud” and replace it at the user end with a browser-based user interface. Yes, it’s a cut down experience, but despite that, it’s already immensely usable and can only grow in capability. And already, it makes things possible that weren’t before. How has all this come about?

We all know that things are getting faster, bigger, more powerful. Technology is getting better. It’s an inescapable conclusion, whichever direction you look.

But occasionally, something happens that takes us all by surprise. Think about the first iPhone, released eleven years ago. There was nothing about the original iPhone that represented fundamentally new technology (except, perhaps, from multi-touch capacitive screens). What was new - and changed everything forever - was the combination of many technologies in a device you could put in your pocket.

How to be first

But how was Apple able to be first? Apart from the obvious climate of innovation and obsession with detail at Apple (when Steve Jobs was alive!), it was the way that the company looked at trends. It started to design the iPhone at a time when it knew it couldn’t be made, but at every stage, it took the design as far as it could, with current technology. And then, it reached a point where it would all work. Shortly after that, the design was released to an unsuspecting world, and we’ve never looked back.

To consumers and tech-watchers, this was a shock. It seemed to happen suddenly. This is how progress appears to anyone not in the development cycle.

And so it is with Sony’s new virtual production system. All of a sudden, out of the blue, it’s possible to run a live, multi-camera video production, with no more than a web browser (and, of course, cameras).

And the reason it seems like a sudden change is because everything is now in place to make it possible. Bandwidth, processing power within AWS. And browser technology.

We’re very glad that Sony is calling this a “virtual” production system instead of describing it as a “cloud” service. It is both of those, but the important thing to grasp is that this new technology has virtualised all of the seriously complicated and expensive equipment that you would normally need to run a multi-camera live production, and it does this by placing all of this “in the cloud”.

Sony’s Virtual Production System is currently hosted on Amazon’s AWS and there are few details other than “magic” and “secret sauce” to help us understand what’s going on in there. But in a sense it doesn’t matter - because the results show that it works, and works well. It’s basically a different way of thinking about a very common type of broadcast, but when you start to look at it in detail, you have to admire Sony’s approach.

Let’s step back for a minute and look at why this is happening in the first place. Why did Sony take this route?

Viewing habits are changing

When you look at the way viewing habits are changing, there are some very significant trends. The biggest of all, perhaps, is that fewer people are using “traditional” TV as their primary source. Viewers are gravitating towards streaming in very big numbers. These big numbers include on-demand, streaming live from smartphones, and more organised, higher quality live productions.

While it remains the case that phenomena like the World Cup and the Olympics attract the biggest audience, almost everything else is changing. The “long tail” is the huge variety of streamed events that can range from a music concert to weddings and corporate events. Each production might have a significantly smaller audience than mainstream TV events, but add all the viewers to these events together and the number is massive.

Video viewing is not diminishing, it’s just shifting to a new platform. And it’s into this space that Sony has moved with its virtual production system. Sony sees valuable growth here and while the system, as we’ll see in a minute, is very capable, it’s also clear that it’s only the first rung on a very long ladder.

The topology of the Virtual production system is very simple. There are some cameras, somewhere; it doesn’t matter where. And there are one or more instances of Sony’s Virtual Production application, run inside a web browser. Specifically, apart from at the camera end, there are no video switchers and no cables. In the Virtual Production interface, you can see previews of all the camera feeds and you can switch between them. But this requires much less bandwidth than individual high quality feeds from the cameras would take if they were sent directly to a computer for processing.

Crucially, video from the remote cameras doesn’t get processed on the local devices. That’s all done in the cloud. Sony didn’t release any information about their back-end system, which lives on Amazon’s AWS. This isolates the task of video processing from the client computers and really does make the point that this is a system with a totally different topology to conventional live production.

At the camera end, you either have to use certain models of Sony camera which are able to generate a stream for themselves, or a non-Sony camera via an encoder (Sony makes them) with an SDI input and an RTMP stream output. Certain Sony cameras can generate a stream using Sony’s own protocol that can adapt to varying streaming conditions, giving a level of quality of service assurance. But the system will work with “plain” RTMP too.

Does it work?

Yes, it absolutely does. The software’s easy to learn. At this stage it’s fairly basic, but still has enough of the core functionality to stage a multi camera live stream. This is a new topology for live production. It means several things are now possible.

First, using only the internet, the production itself (i.e. the camera end) can be anywhere on the planet with an internet connection (you need 6 Mbit/s per camera and there’s a limit currently of six cameras).

Second, again using only the internet, you can switch and control the output from the stream from essentially any device that can run a browser.

Third, there is no need for any physical hardware apart from cameras and encoders if not built into the cameras.

Sony Virtual Production System - Picture in Picture

All of this means that live event producers don’t need to invest in hardware video switchers. The ability to stream a live event becomes a service that you can pay for. Sony - at this early stage - has several monthly “plans” that will allow you to choose how many hours per month you’re likely to want to use the service.

The service is initially aimed at social media producers and in that respect it’s a very focused and useful solution, not least because the output from the production can be sent directly to multiple social media platforms. This is all built into Sony’s software and is just a matter of telling the system where you want the streams to go.

Isolating the delivery process from a local producer's computer is an extremely good move: it means that the delivery and quality of the content is not determined by bandwidth or reliability at the location of the producer. In an extreme case, if a producer lost all connectivity, the stream would continue, using the most recent settings. Degradation of local bandwidth would have no effect on the quality of the streamed material.

Sony took me and some other journalists to Switzerland where to show that Virtual Production works in real conditions. And - if you’re thinking “who might this service be good enough for”, the answer is Red Bull - the energy drink manufacturer that has made an art form out of action video.

Red Bull Alpenbrevet

Every year since 2010, Red Bull has organised an Alpine race called “Alpenbrevet”. If you try to imagine Mad Max Fury Road but with unsuitably low-powered motorcycles and wedding dresses, you can’t go far wrong. With a maximum speed of under 20 MPH, it’s not the fastest spectacle, but it may be the most eccentric.

Red Bull has made an extraordinary commitment to Sony’s Virtual Production service. According to the drinks company’s Head of Production for Switzerland, Hubert Zaech, the relationship started three years ago. I asked Zaech what made the company choose Sony’s solution. "Its so easy. You just need a few cameras and that’s it. Incredibly flexible” he told me.

Live video shoot of the Red Bull Alpenbrevet race showing 4G dongle - Sony/Red Bull

Which may be something of an oversimplification but in essence it’s true. Add connectivity in the form of 4G cellular data - and an encoder if your cameras don't already include the ability to output a stream. There’s no need for any additional infrastructure. And of course, because it’s in the cloud, geographical locations are no longer even a factor. (Not even, it would seem, for the Alpenbrevet race. Red Bull carried out a signal strength survey before the range and even in the deepest valleys there was a signal.)

What are the limitations?

As ever, this all depends on reliable and reasonably fast Internet connectivity. At the camera end, this can be cellular. a 4G connection is easily fast enough for multiple streams. Another factor is latency. There’s typically a switching latency of around two seconds. That’s a lot if you’re used to frame-accuracy. But you get used to it.

The good news is that broadband is getting inexorably faster. 5G will be up to a hundred times faster than 4G, and with orders of magnitude lower latency.

So to sum up, the functionality today is basic. But it’s enough for many types of production. The essential advantages of virtualised production are already there:

No need for infrastructural hardware

Complete geographical flexibility - work from anywhere

The reliability of a hosted service

Direct delivery to multiple streaming services

There’s still work to do on titles, uploading media for inclusion in the transmission, and overall functionality. Sony says that this is an ideal system for beginners, but some potential users in my view will have a more advanced expectation. But I don’t think this matters right now. Because it’s important to get this out there. It will improve with time and if you commit to this service, you’ll see it getting better gradually and continuously.

How do you pay for it?

There are several “plans”. For a monthly fee you can have a limited number of hours for live streaming. 300 Euros entitles you to four hours per month; 700 Euros gives you ten hours per month. There's an "enterprise" plan for 100 hours or more per month. Each package will give you the ability to produce video streams with:

Up to six live camera streams

Two playout servers

Two logo keyers

Two caption keyers

Stream recording

Red Bull Alpenbrevet race/Red Bull

A Sony representative told us that the company is exploring ways to implement a more granular service where users might be able to purchase production time purely on demand, rather than as a monthly plan, but this is some way off. Sony also mentioned the possibility that rental companies might be able to provide camera/encoder bundles that are ready to be used with the service, and this seems to me to be an excellent and almost essential part of it.

How important is this?

I genuinely think this is an inflection point in the history of TV production. It's real-time production in the cloud. And for all the tongue-in-cheek deflections from Sony about this being "magic", don't be misled into thinking that this is anything other than a massive technical achievement. It's one thing to set up a live production system using deterministic SDI connections; but quite another to achieve the same but with the frequently cantankerous technology of streaming. But Sony has form with this. For example, there are links to Sony's Ci video content storage and review system, and many Sony cameras incorporate its QoS streaming system that evens out issues with bandwidth and produces a better aggregate quality stream than other, more basic protocols.

Watch this space very closely. At some point in the surprisingly near future, this will morph from being an entry level virtual live production system for social media to the default way that we all produce live television.

Tags: Production

Comments