We may be thinking about 8K in the wrong way

We may be thinking about 8K in the wrong way

RedShark's Editor in Chief states the case for a different line of thinking when it comes to to controversial foibles of 8K.

Let's face it: 8K is turning out to be controversial. Some people think it's the best way to get the best images; others think it's overkill and even that it's a cynical ploy to make current cameras obsolete.

I side with the first of these. I have seen both theoretical and empirical evidence that resolutions even beyond 8K are beneficial. Most importantly, they can make lower resolution derivative images look better than if they were originally shot in a lower resolution.

But here's the disconnect. I'm not saying that we should immediately move towards viewing video in 8K. It's just not practical. It would be expensive and we'd need screens the size of walls. That may happen eventually and it would certainly be nice to have the option, but it's not for now.

And I completely accept that many people think 4K is "enough" - and sometimes even HD. In fact I saw a product recently whose 1080p images were so clean that I would prefer them to some examples of indifferent 4K that I've recently witnessed.

It's very easy to get dogmatic about this and unfortunately that clouds the issue. It's essential as we move towards higher resolutions that we remain practical and evidence-based.

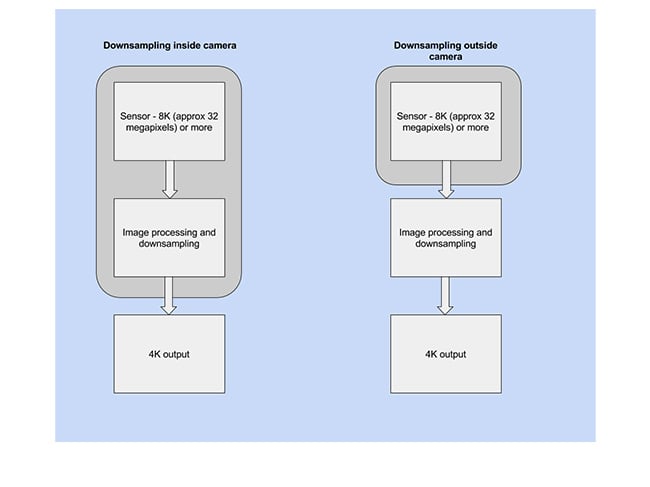

So here's my thought experiment. Forget about displaying 8K video at its native resolution. Assume that - even in the cinema - the only way we're going to see 8K video for some time is downsampled.

It's widely accepted that downsampled video from a higher quality source is better than the same video captured at a lower resolution. It solves all sorts of issues. I don't think there's any need to debate this.

In which case, think about this.

Which would you prefer?

A 4K camera that outputted 4K but started with a 4K sensor?

Or

A camera with an 8K sensor that downsampled from the entire sensor (ie including all the pixels) to 4K.

To the user, since both cameras can only output 4K, they're rightly called 4K cameras. But there's an essential difference. One is starting with more information.

The 8K camera is able to use virtually all of the information captured at that resolution to benefit the images it creates at lower resolution.

The 4K native camera will have bigger pixels, admittedly, but I believe this advantage will be outweighed by the larger number of pixels on the 8K camera. There are already cameras that downsample from much higher resolutions to give a 4K output.

To sum up, here's what I mean in a diagram.

That's all I'm going to say at this stage. I'm just throwing it in there because I haven't heard anyone else talking in these terms.

What do you think?

Header image courtesy of Shutterstock.

Tags: Production

Comments