Can you tell the difference between real and artificial? AI generated cloud formations

Can you tell the difference between real and artificial? AI generated cloud formations

Clouds are one of the hardest things to create artificially within a computer. Various techniques have been used over the years, but now Disney is looking at a new technique that really does give photoreal results.

There is, of course, no such thing as white or black clouds. Clouds are made out of tiny drops of water or ice which are nearly colourless, at least in the amounts present in clouds. Visual effects people have been able to simulate clouds for some time, but it's difficult to create a really good-looking result that simulates the behaviour of real clouds. Render times are in the tens of hours for an HD video frame containing mainly atmospheric clouds. However, in the last few days, a team from Disney Research and ETH Zürich have published a paper demonstrating a much faster way.

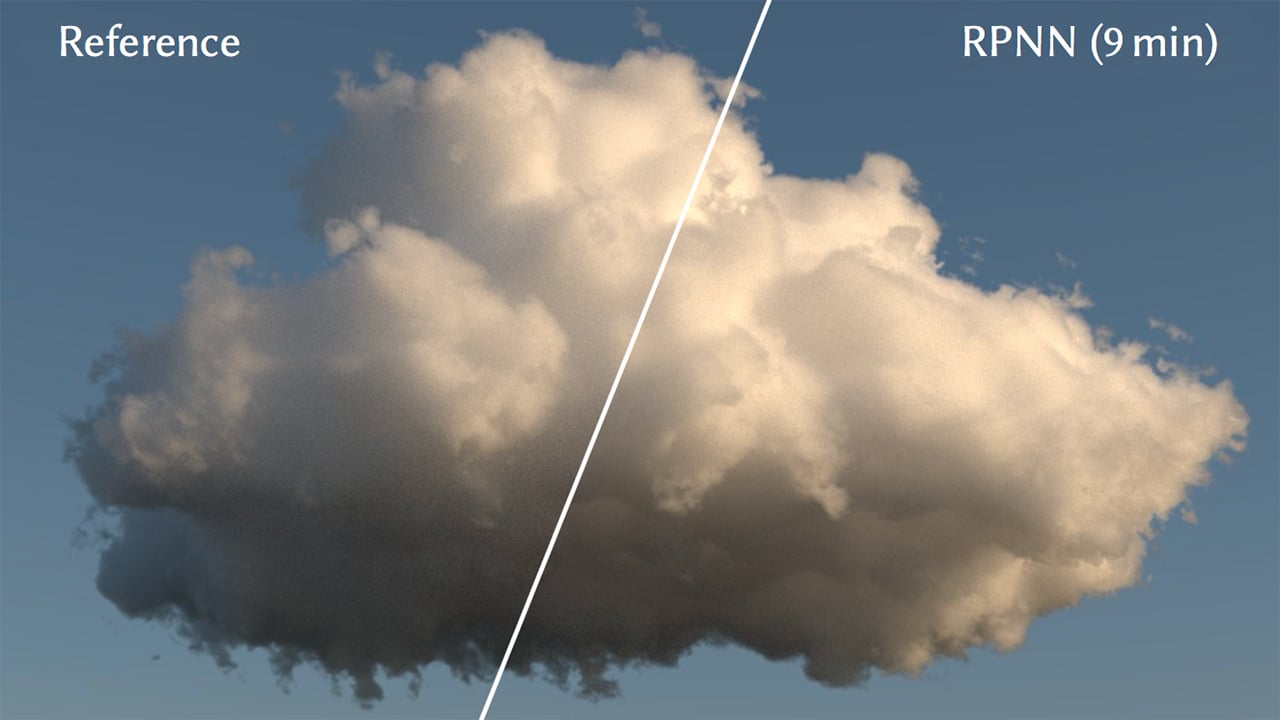

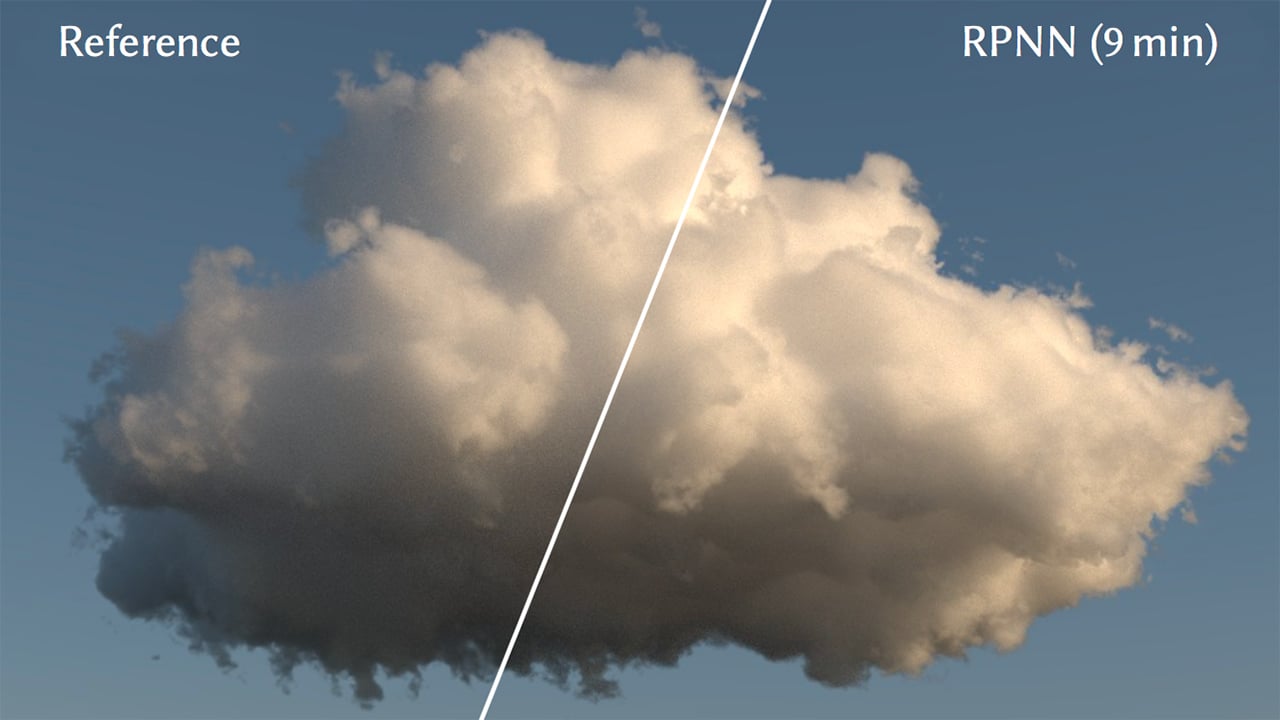

The faster way involves neural networks and the results really do look production-ready. The new techniques will need integrating with the world's popular software before they can be deployed on real productions, but the results look very realistic. It's also an example of a neural network doing something that seems fairly ready to go and it is certainly immensely fast, producing results in single-digit minutes that would take other approaches a day or more. We're not going to go into the details of how this one works, but the associated technical paper is freely available. It's written by Simon Kallweit, Thomas Müller, Brian McWilliams, Markus Gross and Jan Novák, titled “Deep Scattering: Rendering Atmospheric Clouds with Radiance-Predicting Neural Networks” and is published as part of the ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2017), vol. 36, no. 6.

We don't need to simulate each droplet

Even the slow approaches to generating clouds use approximations. Nobody's simulating the billions of spherical droplets of water. Each of them absorbs, reflects and refracts light in complex ways. Light entering a cloud can be absorbed or can be reflected and refracted around until it makes its way out again. Given the number of particles in the cloud and the random factors involved in the propagation of light, the actual path taken by any given photon might be immensely complicated. Usually, this is simulated by firing virtual light rays through the volume of a virtual cloud and applying an estimation of what proportion of that light ray might be diverted from its course and where it might end up. Because any given ray of light might be diverted in any direction, then diverted again once it encounters other regions of the cloud, this is a longwinded approach.

Faster ways of generating clouds generally don't look very good, though they're often applied to quite high-end filmmaking, in computer-generated children’s movies where the art style can tolerate a stylised look. This won't do for live-action material where it might even help out with things like pyrotechnics and smoke effects (though the behaviour is not necessarily that similar, especially on a small scale.) One of the goals of this new technique seems to have been what the researchers call “interactive performance” so that we can change a control and evaluate the results while the mouse button is still down. The neural network certainly provides that — it's still not a fully real-time experience, but we get a viewable preview in a few seconds rather than a few hours.

The result simulates the key characteristics of cloud — the bright line around the edge when it's backlit, the change in colour from white to grey depending on the incident light and other things besides. Crucially, the cloud simulation is also stable as we change certain parts of its settings. This means it doesn't start to look unrealistic as, for instance, we change the direction of light. This makes it suitable for animation, which is clearly Disney's interest. The animated demonstrations also show some animation of the cloud structure itself, as might be caused by wind, although this is less successful and the photorealism of the result is spoiled.

This sort of rendering can be viewed as a special case of sub-surface scattering, an optical effect that's most famously found on human skin. The rendering of humans became a lot more convincing once it was realised that light can penetrate skin slightly and then bounce out again. Cloud has something of the same effect, albeit over a much deeper volume. Tests have been done to see if the new cloud code could also be applied to things like skin. There were problems and if it could be made to work, it certainly won't be with this exact same code.

Overall, though, it's an absolute triumph, simulating photorealism. It even simulates the interaction of skylight. Perhaps most impressively, the AI itself was trained on a very small collection of only 75 cloud images, although we don't know how complex it was and keeping the training process relatively straightforward might have been a requirement in itself.

There's a video presentation which gives a reasonable overview of the approach that's being used, as well as the full technical paper, available here.

Tags: Production

Comments