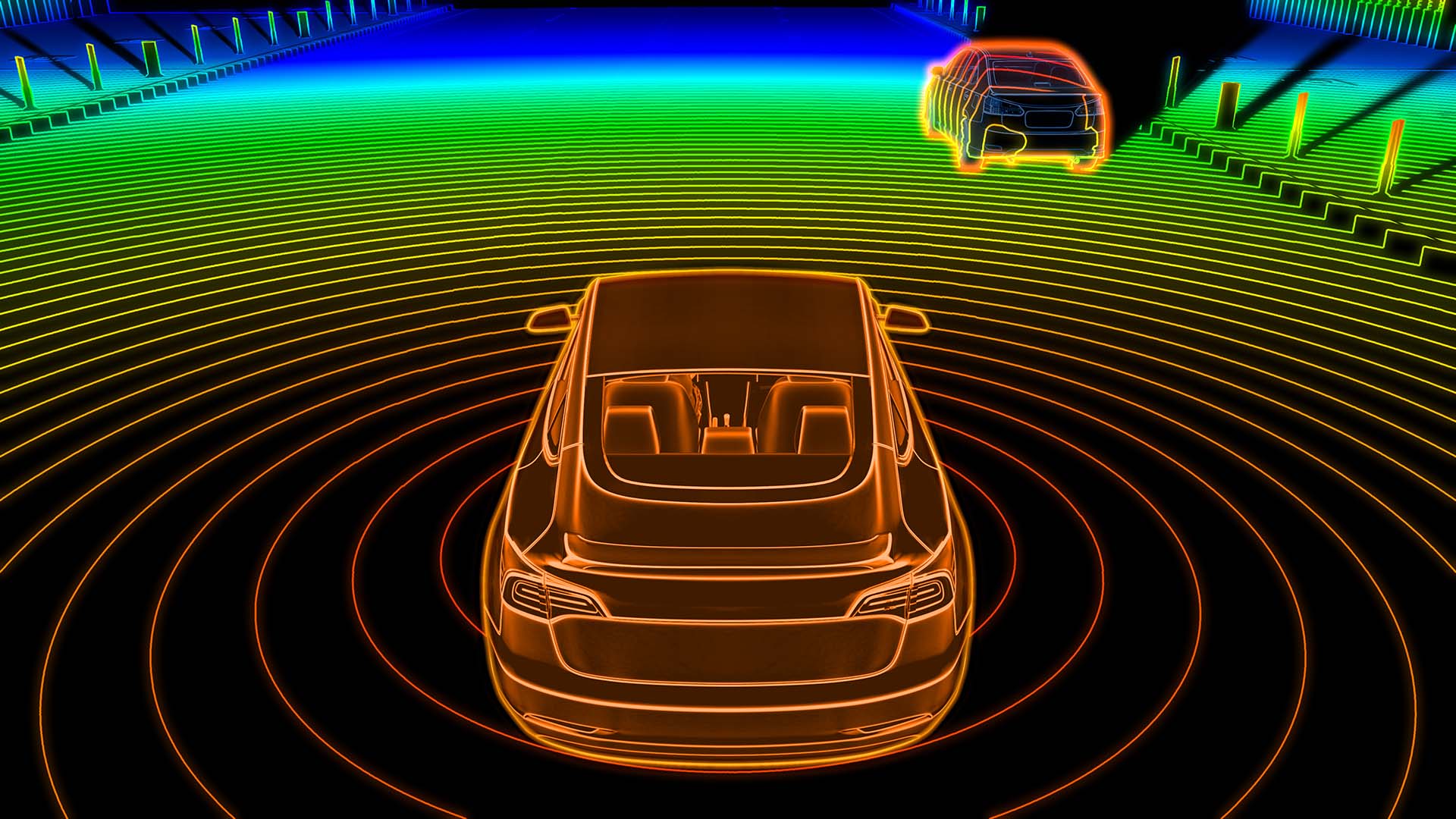

A new solid state LiDAR chip could, finally, make high resolution depth scanning a reality for small devices. But how does it differ from current systems, and can it really offer the benefits we're expecting?

When the first laser was fired by Theodore Maiman's team at the Hughes Aircraft lab in Malibu on May 16, 1960, it created a fantastic opportunity for a beach party that evening. It was also more or less the prototypical solution in search of a problem, though eligible problems were quickly provided. One was rangefinding and 3D mapping, which is crucial in the modern world both in the context of self-driving cars (which are likely to be a huge boon to society) and virtual reality applications in which we pretend to be a futuristic super-soldier (which is what'll actually fund the work).

Whether we're driving an electric car or scanning a raisin-oatmeal cookie for a Blender physics demo, environment scanning is a technology both in demand and ripe for improvement. The 3D sensor system on the original Xbox Kinect is only a 640 by 480 camera, and with an even lower effective resolution. Projecting a field of dots into the world and detecting how much they appear to have moved sideways from an adjacent point of view gives you an idea of the distance to the surface they're being projected onto. The differences are small, though, so it's noisy.

Although, then again, so are most of the other approaches. Time-of-flight measurements work, as the name suggests, by detecting how long it takes for light to get from the sensor to the target and back. Photons go fast, so for most distances that's a very small window of time, and flash lidar can be noisy as a result.

A gigantic frickin' laser

A better approach involves taking a laser rangefinder and strapping a rotating mirror assembly on the front, so that it can be used to take millions of measurements of an environment. That's the way that scene scanning for film and TV work is often done. Limits on how fast it can make repeated measurements mean scanning a large environment with reasonable resolution is slow, and it generally requires bulky, heavy, delicate, power-hungry and expensive rotating optical assemblies.

Making this relevant to cellphones - always a good way to get something funded in 2022 - requires a much more solid-state approach. The 3D scanning on phones that currently exists is often reliant on analysis of the camera image and struggles to create results good enough to sell on TurboSquid. Nobody's going to strap a rotating lidar scanner onto a phone any more than they're going to hang around in one spot like a human C-stand for many tens of minutes while the thing does its work.

So, the demand for a depth scanner that can be deployed like any other camera sensor has been intense. Radar nerds, if such a personality type exists, will already be screaming phased array at the screen, and that's certainly an option. Much as it sounds like the sort of treknobabble made up by a late-90s sci-fi screenwriter, the idea is simple: take a row of radio aerials and send a signal to all of them at once, the signal will come out more or less at 90 degrees to the array. Delay the signal slightly to each, though, we can begin to alter the direction in which the radio signal travels. That's why the famous early radar installations built in the UK had three towers.

Phased arrays are counterintuitive, described by aspects of quantum physics that go so deep the fish all have lights on their noses. They're widely deployed, though, particularly in radar for fighter jets (which like being able to see behind themselves) and even in satellite newsgathering trucks. Because phased arrays tend to be flat, that means the satellite truck doesn't even look much like a satellite truck any more.

Since light is just really high frequency radio waves, it's no surprise that phased arrays work for lasers, too. Unfortunately, those frequencies, expressed in hundreds of terahertz compared to the megahertz of most radio, make things difficult if we want lots of angular coverage, high precision, and low noise for fast scanning.

The new method, MEMS

One solution arises in micro electromechanical systems, MEMS, the devices that make it possible to put a gyroscope in your phone. Whether or not MEMS are truly solid state devices is a matter of opinion, but we've had systems capable of steering beams of light for a while in the form of Texas Instruments' moving-micromirror DLP projector chips. The concept of silicon photonics is increasingly applied to this sort of thing, as well as to more general-purpose computing, as the estimable Mr Wyndham recently noted.

We're veering off onto the treacherous backroads of silicon valley venture capitalism and the things it funds, now. Still, since at least 2015 one company, Quanergy, has been discussing MEMS lidar in the context of self-driving cars, and not just single-point scanning but area sensors. The company made its first announcement at CES, though, and while it's arguable that cars are an aspect of consumer entertainment it's equally possible that Quanergy is just as interested in the huge market associated with making everyone's social media mugshot into a 3D model.

So, perhaps we'll all soon be able to make a high-resolution 3D scan of our midmorning doughnut and send it to a friend who's on a diet alongside a suitably mocking emoji. As with any rangefinding technology that relies on sending out light, these new designs are likely to work progressively less well as range increases, which is exactly the opposite of ideal behaviour for something that might help out the average focus puller. Still, the idea of being able to make a depth sensor that looks basically like a conventional camera's imaging sensor sounds great.

Anyone interested in a deeper dive on this should look at the paper "MEMS Mirrors for LiDAR: A Review" by Wang et. al. at the University of Florida's department of electrical and computer engineering. It's a reasonably easy read for an academic paper, and goes into fascinating detail about many of the options that currently exist.

Tags: Technology

Comments