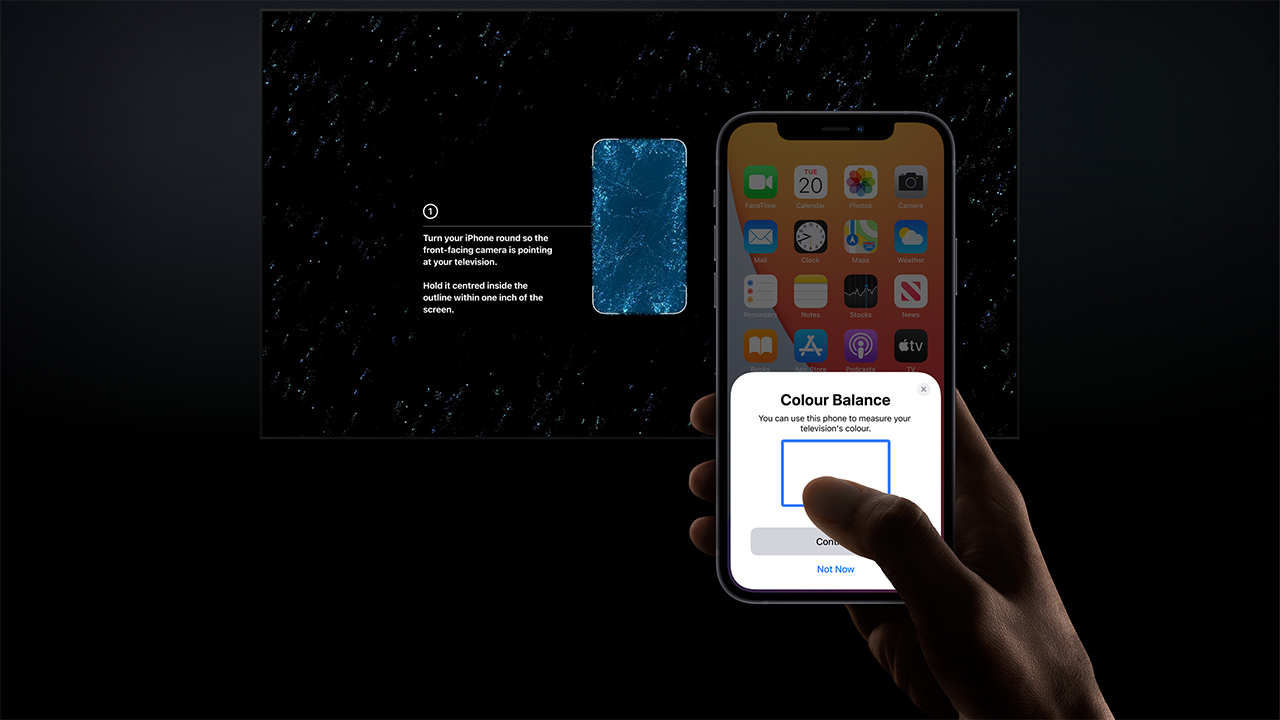

Toward the end of April, Apple announced a new feature for its Apple TV decoders: with a suitably recent iPhone, users can perform a calibration of the attached display using the phone’s front-facing camera.

It comes at a time when TVs have frankly never been capable of more, although that capability is sometimes hamstrung by commercial expediency as much as technology, even as technology marches on.

A proportion of the audience, perhaps that proportion which has spent five figures on a top-of-the-line calibration probe and software, will already be forming a pitchfork-wielding mob in the comments section at the suggestion that even a phone camera can reasonably behave as a calibration probe. There’s more at stake here, though, than just the suitability of an iPhone’s face ID camera for serious optical metrology.

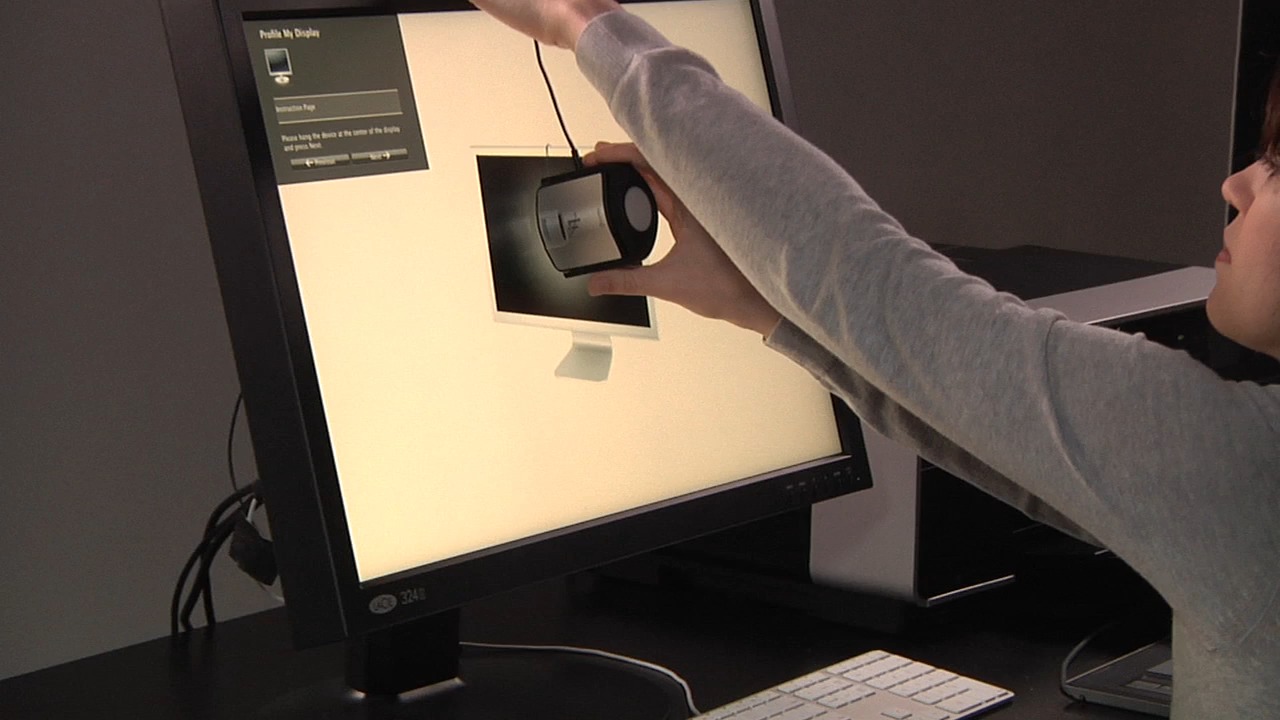

To be fair, Apple calls the feature “colour balance” and tests appear to take significantly less than ten seconds – perhaps something like six – involving only primary colours and full white, so we probably shouldn’t expect it to match the results of a pass with CalMan. Low-cost calibration probes, things like the X-Rite i1 Display Pro, tend to perform most poorly at low light levels where noise becomes an issue, and while the phone camera is probably technologically dissimilar to any sort of real monitor probe, it’s reasonable to expect similar concerns.

That’s possibly why there’s a reticence to analyse brightness, requiring the measurement of very dim test patches. It’s a shame, because while correct colours are naturally important, human beings aren’t very good at remembering what colours are supposed to look like. Even the best of us can only do it for a few seconds at a time.

Many colourists might place greater value on a predictable threshold of visibility in shadow detail, allowing them to place things more realistically near the precipice of blackness. Make the lurking fugitive too visible, and the characters appear unrealistically unobservant; too shadowed, and the audience may miss it. Apple’s calibration system could possibly solve the noise problem by sampling and averaging a longer period, but then the process might take more than six seconds. It’s also possible the sequence was edited for the promo and does include other test patches, which would obviate all this sleuthing.

A more traditional method of calibration - The X-Rite i1Display Pro. Image: X-Rite.

Bit-depth

There are, in any case, other reasons Apple’s approach might not work quite as perfectly as everyone would hope. Particularly, the adjustments take place in the the Apple TV box and that box is probably connected to the monitor via an 8-bit link, at least for SDR. Calibration tools often like to work in the high bit depth environment inside the monitor, or at least in a ten-bit film and TV post setup. The 8-bit image won’t stand much manipulation without starting to pick up quantisation noise and look iffy. Things may vary for various types of HDR material, though the feature is not active for Dolby Vision.

That’s about all the guesswork we can do based on published information, and overall, while we’ve picked holes in the idea, it’s good to see the idea of display accuracy being popularised at a consumer level. If the phone cameras are sufficiently reliable, there’s a lot more that could be done with this. In extremis, it’s even possible to imagine the phone reading the ambient illumination and tweaking the black levels to account for the variable behaviour of the eye in various lighting conditions, as is sometimes done, even a little on the sly, during higher-end calibration sessions.

Conclusions

One of the realities underlying all this, though, is that even the basest domestic TVs in 2021 are vastly more accurate than they have been for almost the whole history of television. Perhaps most damningly, lots of the inaccuracy that we see in consumer electronics showrooms is deliberate, the result of manufacturers’ attempts to make their TVs look superficially attractive on the shop floor. Many TVs can be made more accurate just by going through the menu and setting as many things as possible to “off,” “normal” or “zero”, especially if the default options are things like “sports” or “vivid”.

The TV manufacturing industry seems to have recognised this problem, hence the “creator mode” we’ve occasionally seen promoted in higher-end home displays. It’s a quirk of technology that the higher end option is often simpler because it has less to hide. Either way, we’ve never had it so good, and it comes at a time of uncertainty.

Whether LCDs keep getting better or yet another technology – micro LED? - springs up to replace it, it’s hard to argue with the progress made in terms of the home viewing experience in the last few years. It seems a bit less likely, though, that any other manufacturer will be able to trust a phone camera as much as Apple does.

Tags: Technology

Comments