Frame.io has launched the public beta of its most significant search overhaul to date, introducing an entirely new Media Intelligence engine that lets users search the way they think and not the way they file.

Built around natural language processing (NLP) and semantic understanding, Frame.io has detailed the workings of the new search system in a detailed blog post. There's a lot to unpack, but essentially the new system is designed to surface assets across comments, transcripts, metadata, and the visual content itself.

For creative teams dealing with ever-expanding media libraries, Adobe reckons this is a foundational shift rather than an incremental update. Creative teams generate more footage, more versions, and more metadata than ever. That means that traditional folder structures and manual tagging no longer scale.

What’s New in Frame.io Search

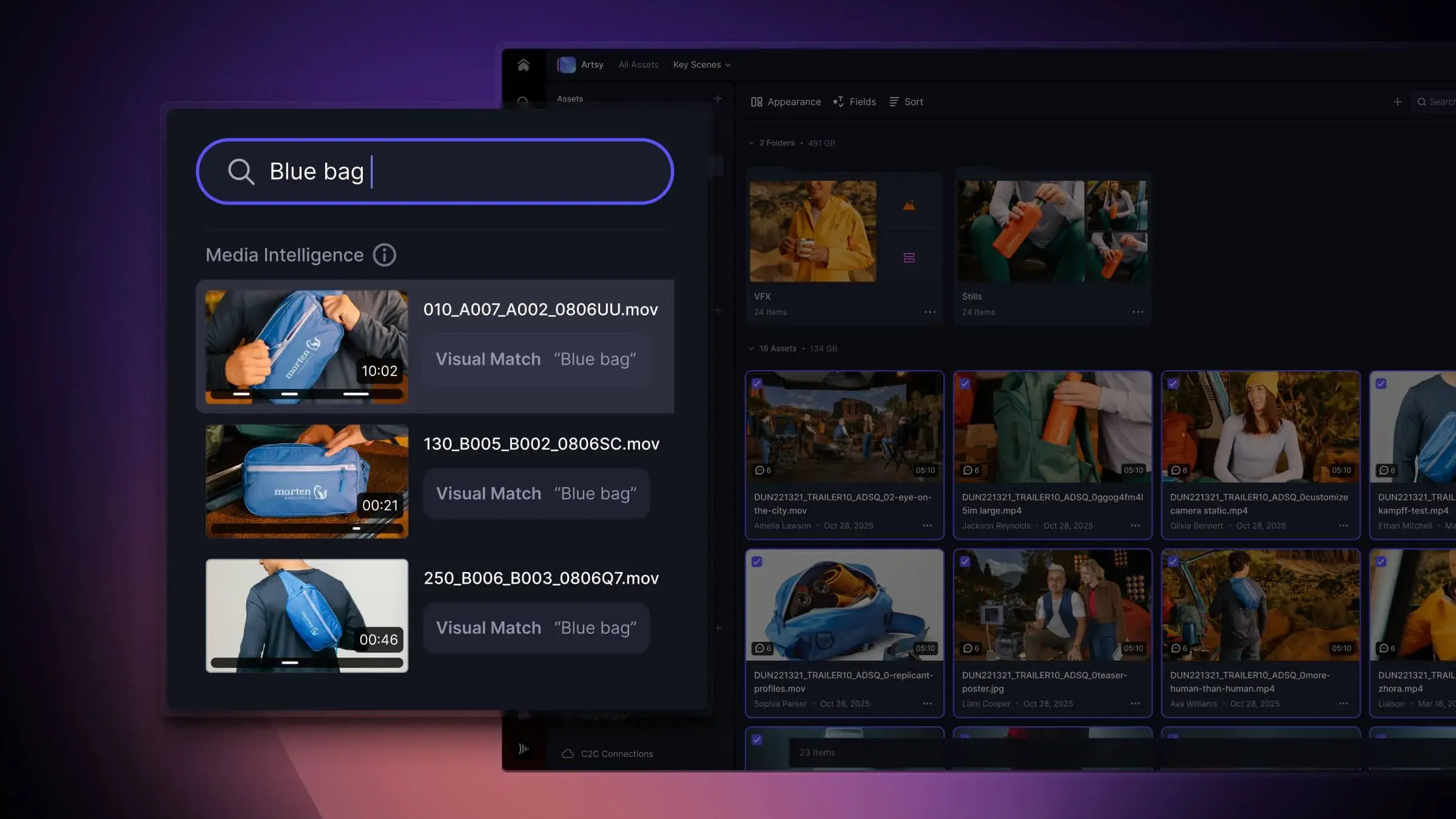

At the centre of the new search is Media Intelligence. This uses NLP to let users type everyday queries (the example it gives is “4K clips of sunsets uploaded last week”) to find precisely what they want. Search is multimodal too, and runs across comments, transcripts, and expanded metadata. It also works across all paid Frame.io plans with no tiered gatekeeping.

Hit the Teams and Enterprise tiers and Semantic Search for all newly ingested assets is unlocked. This finds everything covering moments, objects, emotions, and on to visual concepts in images and video without relying on filenames or tags. It also generates subclip markers for quick navigation.

Hybrid Search combines everything into one: lexical, NLP, and semantic search in a single query. Adobe illustrates this with the phrase "approved 4K ProRes sunset clips of happy people."

Results are split into Standard (name/metadata) and Media Intelligence (NLP + semantic). Quick actions let users jump directly to assets, comments, transcripts, or semantic subclips. Meanwhile the software interprets multi-intent requests involving dates, file sizes, ratings, metadata fields, and more.

As is customary with its AI offerings, including all its Firefly genAI products, Adobe says that no customer media or scraped web data is used to train models. Organisations can also opt out of the semantic processing specifically if they so desire.

Current Limitations and Future Developments

There's a lot to this, but it's not the completed article as yet. Negation logic (e.g., “not trees”) isn’t supported yet, custom metadata fields aren't searchable yet, and Adobe says that ranking and recency sorting are still being tuned. Also, currently only the last 30 days of uploads are indexed semantically by default, though historical indexing is available on the Enterprise tier.

So, all that feeds into the roadmap for forthcoming developments. These include:

-

Performance and ranking improvements

-

Better NLP interpretation

-

Fine-tuned semantic weighting

-

And future features under exploration such as face recognition and auto-tagging.

Pricing and Availability

Pricing is as before, and the new search beta experience is available to all paid accounts using the latest version of Frame.io Version 4.

The aforementioned blog post has more detail, and Adobe has also prepared examples, tips, and best practices to help users get the most out of this release in a dedicated support article →.

Tags: Post & VFX Adobe Frame.io

Comments