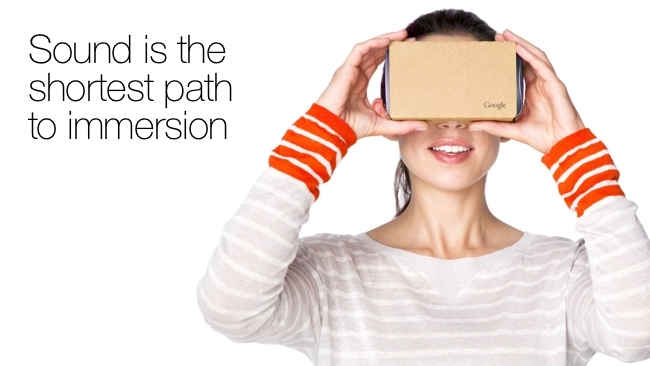

Google Cardboard

Google Cardboard

Google Cardboard is a remarkable and easily obtained VR viewer. We tried it out and wondered how much directional audio would improve the experience

I recently tried out Google’s utilitarian (but actually rather good) Cardboard VR visor. If you haven’t tried this yet, it’s a clever and very cheap way to use your smartphone as a VR viewer. The “Cardboard” part of it is a folded mount for the phone that also houses two 45mm focal point lenses, one for each eye.

Google-provided software running on the phone splits the display into two areas, one for each eye. It also corrects for the intrinsic barrel distortion in the simple lenses.

When you hold the device up to your eyes, you see a display that floats in front of your face. As you move your head, the scene changes proportionately. It works side to side and vertically. It even works if you tilt your head.

I tried the system with Lockwood Publishing’s Avakin. This is a multi user virtual world populated by avatars that are created by the users. The avatars exist in a shared space, and as you look around, you can see other people’s avatars, who can see the same scenery, objects and avatars as you can.

Avatars can communicate with each other through text messages. You can purchase clothes and other artefacts to wear, live in and to play with.

Avakin has some lush environments, with a strong bias towards sun, sand and sea.

How did it look in Google Cardboard? Actually pretty good. If you ever wondered whether you’d benefit from an 8K resolution display in a smartphone, here’s your reason. Effectively you’re dividing the phone’s resolution in two (because you need half of the screen for each eye), and then each side has to fill almost your entire field of vision.

It does this admirably, but there are limitations. You can see the individual pixels, and quite pronounced aliasing along diagonals.

But despite this, the the resulting experience is perfectly acceptable. As you move your head, the images track responsively (remarkably so, when you consider that a smartphone is not a specialist VR device. It just goes to show how good the internal positional sensors in smartphones actually are these days.)

It does get a bit jerky. Sometimes when you move the motion isn’t entirely smooth. I don’t know whether this is a limitation of the phone’s sensors or an inability to generate the scene quickly enough. The "Cardboard" itself doesn't of course have any impact on performance.

But the point is that it is close enough and good enough to be enjoyable. You definitely get that cinematic transfer of reality so that you believe you are there. Some scenes work better than others. One of the best for me was when I was inside a beach shelter, with four posts holding up a palm-leaf roof. You could see the floor and the ceiling. And you could see the beach, the sea and the seagulls flying too. There was a real sense of being there, and you wanted to pivot your head as far as possible to see what was actually out there.

The VR experience will only get better. But meanwhile, one way to add massively to the experience would be to have VR sound.

It doesn’t have to be complicated. The very least that would be required - and this would suffice in most circumstances, would be the ability to rotate a sound field. Let’s say you’re listening to the waves breaking. This would be a typical aural backdrop to a beach scene, and it doesn’t need to have massive positional accuracy. In fact, it would still enhance the virtual environment even if it didn’t respond to head movements at all.

Other sounds are more directional. Birdsong is always a great way to simulate a natural environment, and a sort of generalised backdrop of seagulls would work well here. On top of that, and this might be a bit of a luxury, you could envisage seagull sounds that follow the birds as you see them in the sky. This would need more accurate tracking, calling for more resources and highly layered audio.

All of which is either now or will be in the near future well within the capabilities of smartphones.

The ultimate virtual audio environment would include reverberation based on your environment, and audio accompaniments for your movements, especially footsteps. This can be incredibly effective. Ages ago (over ten years) I remember seen an exhibit where sensors picked up your footsteps and - completely in sync - played audio to make you think you were walking on various textures. I remember autumn leaves, shallow water and a pebble beach. Even though it didn’t physically feel like you were walking on these surfaces, it certainly made you think that you were. You would see people picking up and shaking their feed to the sound of walking through puddles.

There’s a long way to go with some of these techniques, but most of it is possible now. I’m pretty sure that if VR content providers were to pay more attention to directional audio, we’d be much more accepting of a low pixel count on the displays.

Tags: Audio

Comments