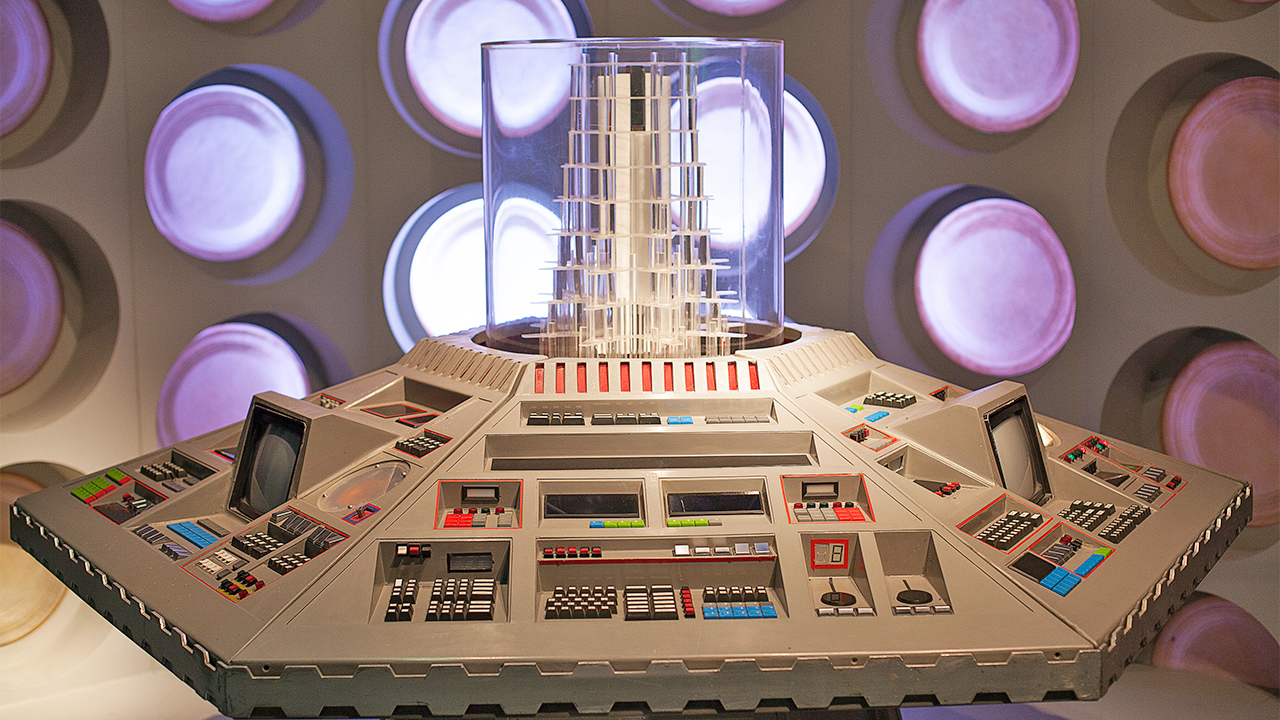

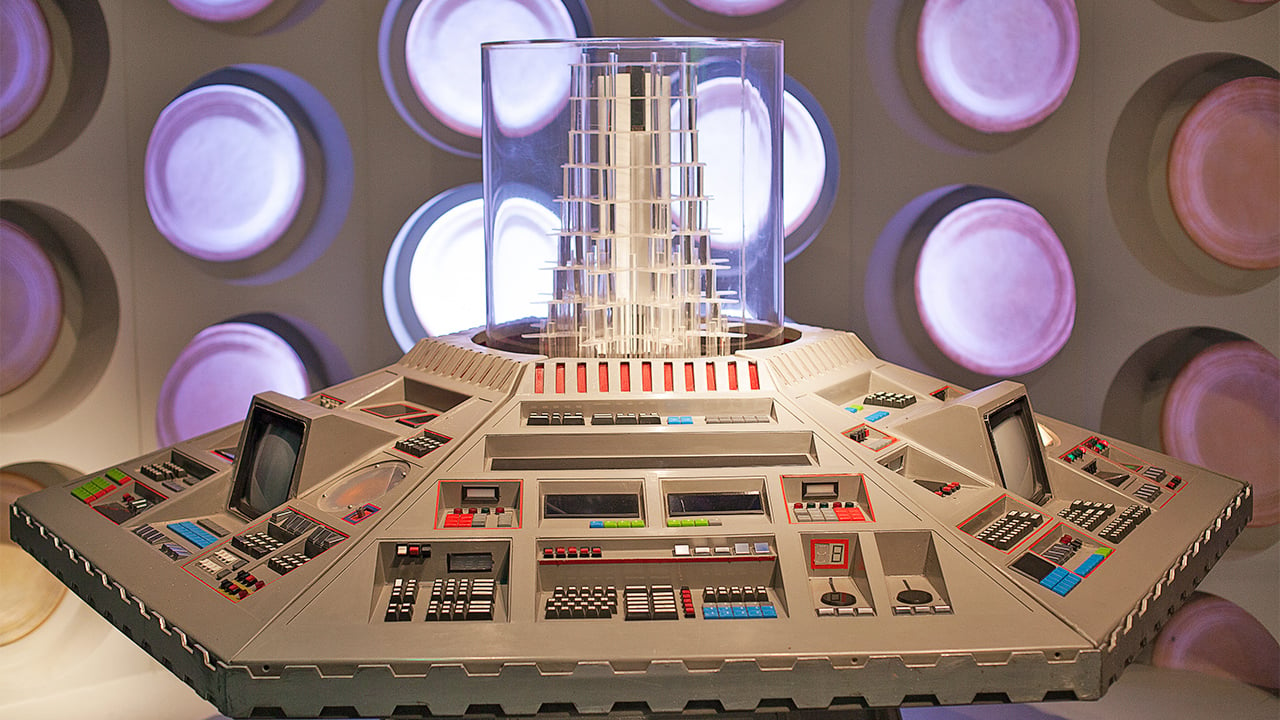

How developers are effectively making VR possible in small spaces. A bit like a virtual version of Doctor Who's TARDIS.

One of the incidental pleasures of SIGGRAPH is the single PDF file which contains the first page of all the technical papers to be presented at the conference. The result is a sort of choice buffet of technological morsels from which it's possible to get a rough idea of where the cutting edge is being cut, and what to look out for in the next Pixar masterpiece.

Papers on related subjects seem to be grouped together and most of it isn't as impenetrable as we might fear. Read the first few sentences of the paper entitled “Quadrangulation through Morse-Parameterization Hybridization” and it becomes clear that it's about better ways to convert meshes made out of three-pointed shapes to meshes made out of four-pointed shapes. The details sometimes become hazy – read on, if you understand the Dirichlet energy of optimal periodic vector fields – but the general area is usually fairly clear. Sometimes the titles are frustratingly jargon-heavy, as in “tempoGAN: A Temporally Coherent, Volumetric GAN for Super-resolution Fluid Flow,” which seems to decode to “better smoke rendering with artificial intelligence,” but it only takes a little bit of reading between the lines to work that out.

Anyway, there are pictures of 3D rendered smoke, which makes it fairly obvious.

Subjects such as modelling tricks and the special effects like smoke and complex translucency are not particularly surprising for SIGGRAPH, though they are useful. There are also things like “Shape Representation by Zippables,” featured in the demo video we've already looked at, which are surprising but perhaps not all that useful. However, there are also things which are both surprising and useful, particularly the two papers which deal with ways to trick our brains into thinking we've travelled further than we really have when we're in a VR world.

VR tricks

The papers in question are called “Towards Virtual Reality Infinite Walking: Dynamic Saccadic Redirection” and (deep breath) “In the Blink of an Eye – Leveraging Blink-Induced Suppression for Imperceptible Position and Orientation Redirection in Virtual Reality.” Both of them suggest related techniques for overcoming the same problem: that the amount of space in a virtual world can't be bigger than the amount of space in the real world. It's possible to draw an acre of open space in the computer, but if the user tries to walk across it while inside an average-sized office, a very real, non-virtual wall will soon get in the way.

Both of the techniques described rely on the idea that even humans with a normal visual system are effectively blind for about ten percent of the time. We can't really see very much when our eyes are darting from target to target and when we occasionally blink. It is apparently possible to make changes to the virtual world during these very brief instants which the brain doesn't notice, and which don't, crucially, provoke motion sickness or the other physiological problems of VR and 3D. The idea is for the computer to be aware of the fact that the user is walking towards a virtual object and, when the user blinks, to change the position of the camera to be slightly closer. As a result, the user perceives a normal process of walking toward an object in the virtual world, but the real-world distance covered is reduced.

Using this technique, researchers were able to simulate a 6.4m square space convincingly inside a room only 3.5m square. It requires eye tracking, but it's not the only VR trick that requires us to know where the user is looking. The idea of foveal rendering is to spend more time drawing the graphics the user is actually looking at, which makes a lot of sense, given that humans really don't see much sharpness other than in a fairly small bullet-hole in the middle of the field of view. Doing that means the computer needs to know what we're looking at, which means it will also know when we're flicking our eyes from spot to spot, so it can – well – cheat.

Over the next few days, there should be a good few more things like this. Mathematics and modelling are great, of course, but it's when the worlds of biology and physics start to interact with them that the real fun starts. In the meantime, have a scroll through the first-pages of the PDF. Some of it's complicated, but it's all fascinating.

Tags: VR & AR

Comments