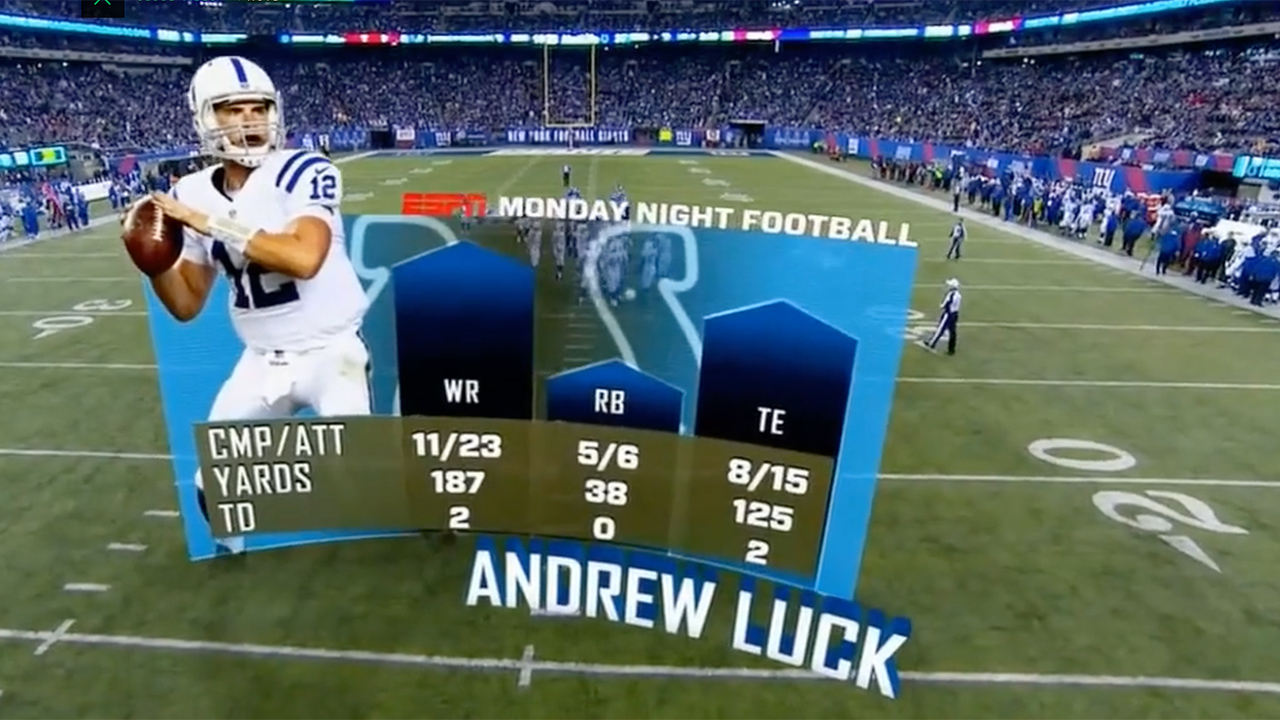

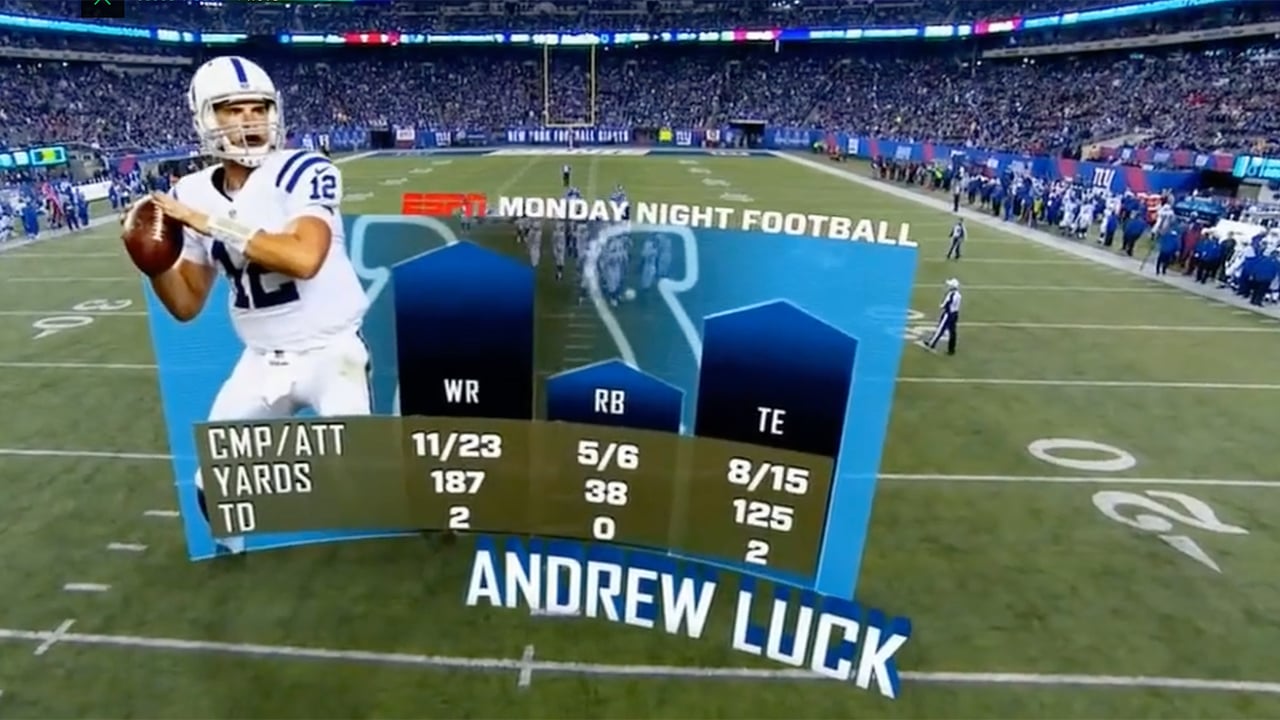

ESPN have been using Ncam's technology to enable live overlays on their sports broadcasts

ESPN have been using Ncam's technology to enable live overlays on their sports broadcasts

With NAB just around the corner, it’s looking like one area of technology that’s breaking through into mainstream importance is the use of computer gaming engines (like Unreal and Unity) to create photorealistic virtual objects and scenery in real time.

It’s now possible to create entire and convincing animated CGI films using these engines (like this one, rendered in real-time with Unity).

Meanwhile, companies like Ncam have been developing ways to use these engines to bring virtual objects into real-time productions. You can imagine the time and cost savings this would bring in comparison with the old way of animating.

Virtual sets

Real time virtual sets have been around for a long time though. RT-SET who merged with Peak Broadcast Services to form VizRT in 2000, started life in 1994. What’s new is that you don’t need an SGI supercomputer to process the graphics, nor masses of markers and grids to allow cameras to track the scene.

If that was the first generation, then the second would be the ability to use PCs with Nvidia GPUs and a choice of tracking system technologies - for example encoded heads from Vinten, or marker-based tracking from MoSys and Stype, or marker-less tracking from Ncam.

The third generation - which is where the sector is headed now, involves the use of games engines like Epic Games’ Unreal Engine 4. This leads to vastly more capability, including

1) Photo-realism. The more realistic you can make virtual objects in an augmented reality set, the more they will be accepted by the viewer as simply part of the scene.

2) Physical attribute realism. This is the ability for games engines to render depth of field in augmented graphics to match the camera lens depth of field, to add particles (e.g. rain, snow, etc), to add physics (e.g. gravity). It also means generating a real world with naturally-behaving elements like grass, trees, shrubs, and all the peripheral items that you barely notice in real life, but which would be conspicuous if they didn’t behave naturally.

3) Positional realism - the ability to understand depth of real and augmented objects in a scene, and to know which should overlay the other.

Following on from this there’s the ability to capture real-world lighting, and to use this data to render lighting characteristics onto augmented objects in real-time to reflect their real-world environment. It’s hard to over stress how important this ability is to convince viewers that the augmented object is part of the real-world scene.

Ncam seems to be leading the field with this technology - especially the ability to extract depth information and map lighting onto virtual or augmented objects.

If you’re at NAB, you’ll find them here on stand C.5629.

Tags: VR & AR

Comments