It’d be easy to get the impression that realtime 3D is really coming of age. For a long time, all computer graphics looked like – well – Tron, at best, and often not nearly as slick.

Then pre-rendered 3D started looking good enough for things like spaceships, and now we’re able to clamber almost (but not quite) all the way out of the uncanny valley in which CG humans have often lived. Realtime CG renderers have not been idle, of course, and ever since 2007’s seminal Crysis, Nvidia and AMD, and the people who write the code, have been pushing hard to make video games look as good as movies.

And haven’t they? We’re now using code written to draw video games, things like Unreal Engine, to produce graphics for LED volumes that we’re expecting to look photorealistic. OK, that doesn’t often involve human beings, but recent releases such as Cyberpunk 2077and Watch Dogs: Legion are both, if occasionally, capable of producing scenes capable of provoking a fleeting impression of a real city being just the other side of the thin film transistors. Isn’t this convergence; can we expect to see a future in which the difference between pre-rendered and realtime 3D becomes irrelevant?

-png.png)

The original Elite just about managed to depth-sort its wireframe graphics, making them notionally solid-filled (if only ever with black)

Well, maybe, but this isn’t it. Until very recently, video games invariably used what was fundamentally a development of techniques going decades. First, we started making 3D objects out of triangles. Why triangles? Because no matter where we put three points in space, they always describe a two-dimensional, flat area. At first, the triangles weren’t even filled in – wireframe graphics you could see straight through. Next, we figured out things looked better if we filled them in solid. Then we started sticking bits of image data onto those triangles, so that they’d have some texture, and about the same time started taking notice of where virtual light sources were in the scene to make the triangles brighter or darker.

The late 90s saw hardware acceleration, which would eventually become the GPUs we so powerfully leverage now. This is the 1998 game Unreal, from which the modern Engine takes its name

Trickery

That level of technology worked out okay through the 2000s, but there are some obvious things it didn’t do, things that pre-rendered 3D could. Until recently video games couldn’t show accurate reflections – they could sometimes do something that sort of looked like a reflection of the environment in a chrome surface, but look closely, and it was just a fixed image that didn’t represent changes in the environment that’s being reflected. They also didn’t show shadows, at least not without a lot of workarounds, and shadows of unmoving objects such as terrain and buildings were pre-rendered into images then pasted onto the triangles to simulate light and shadow.

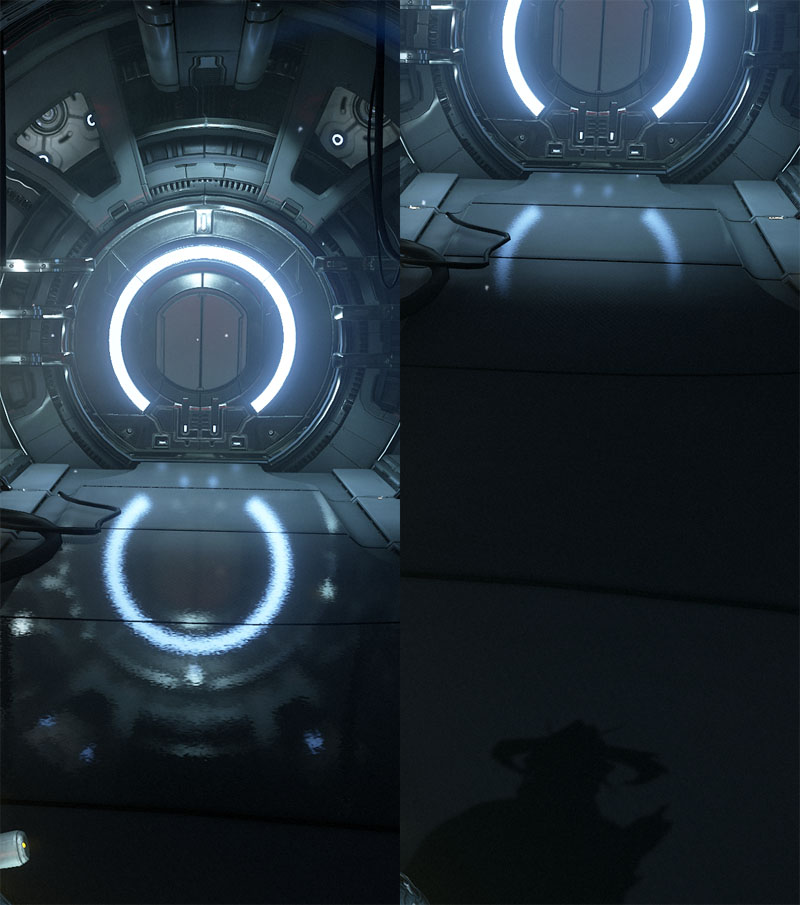

Reflection by image flipping. If we look downward, the top half of the hatch isn't being rendered, and thus can't be reflected. The game fades it out in the hope we won't notice

That creates on good example of the sort of limitations realtime 3D engines often impose in order to achieve what they do. Ever wonder why you can’t blow up walls in the average first-person shooter? Because, at least in part, the pre-rendered lighting stops being valid if you move a large shadow-casting object out of the way. Maya (or its renderer) renders that sort of things fresh, every frame, or at least it can if it needs to.

Still, combine all those reasonable-looking but actually very inaccurate tricks together, evolve for ten or fifteen years, and we get Crysis. It should be very clear, though, that a lot of shortcuts are involved. That spectacular mountain range? A fixed background image; you can never go there. The fine detail on your sci-fi rifle? The rivets are clever types of image mapping, so don’t look too close. That mountainside full of trees? Geometry instancing allows the system to use multiple copies of the same tree, scaled and rotated and scattered. Statue reflected in a pool of water? That’s the time-honoured technique of taking the picture of the statue, flipping it upside down, and relying on the irregular surface of the water to hide the fnords.

Problems occur with that statue if, for instance, we can’t see the top of the statue because we’re looking down at the water, but we should be able to see the reflection of the top of the statue. We can’t flip an image we haven’t rendered. Basically, video games cheat. A lot. That’s fine, when it works. The problem is that all the workarounds become more work than doing it properly.

Cyberpunk 2077. Objects not in view may still be reflected, among many other nice tricks.

Realtime ray tracing

Define properly? Well, for a short while, since, say, the release of Nvidia’s RTX series, games have been at capable of cheating slightly less, through ray tracing. Situations like that reflecting pool become a little more reliable if we actually work out where the rays of light would go for real. It’s easier conceptually, if not computationally, and lots of workarounds are still required. One workaround that should be recognisable to prerendered CG people is noise reduction, based on the fact that we can’t sample every ray from of every light for every pixel on the screen. We sample a random selection, which gives us a noisy image, and we apply noise reduction.

And that is something that’s very much in common with the approaches used by conventional 3D graphics software. With that and ray tracing, there’s certainly growing commonality between realtime and non-realtime renders, and therefore no surprise that things seem like they’re starting to converge. What we need to recognise is that the scope and scale of what realtime 3D can do is necessarily limited, for all of the reasons we’ve considered here. In 2021, we’re some way from being able to create arbitrarily large and complex scenes and accurately simulate all of the lighting in realtime in the way we can in software like Cinema 4D, where it’ll have some time to go away and think about things.

Crysis. A truly epoch-making opportunity to travel to the world's most beautiful places, and blow them up

Still, it’s hard to complain that some of the most respected television currently in production is using technology that was unequivocally built to bring Playstation owners joy, and with great success. Without video games, we’d have no GPU processing, no realtime LED volumes, and, most importantly of all, no Cyberpunk 2077. I’ll be busy for the next hour or so.

Tags: Technology computing

Comments