Panasonic Beam Splitter

Panasonic Beam Splitter

Most large-sensor cameras use a Bayer colour filter pattern to allow full colour images to be output - after "debayering". This entire process is now pretty routine but unfortunately what is also routine is the amount of light lost through the colour filter process. Less light means more noise, and noise is what ultimately limits the low-light capabilities all cameras

So any way to extract colour information from a sensor that did not involve throwing away most of the light would have to be considered a major improvement.

It looks like Panasonic has made exactly such a breakthrough - although it is some way off being used in commercial products.

But although we will have to wait until this new technology appears in our cameras, it does appear to be very credible, if somewhat hard to understand.

Diffraction is better than filtering

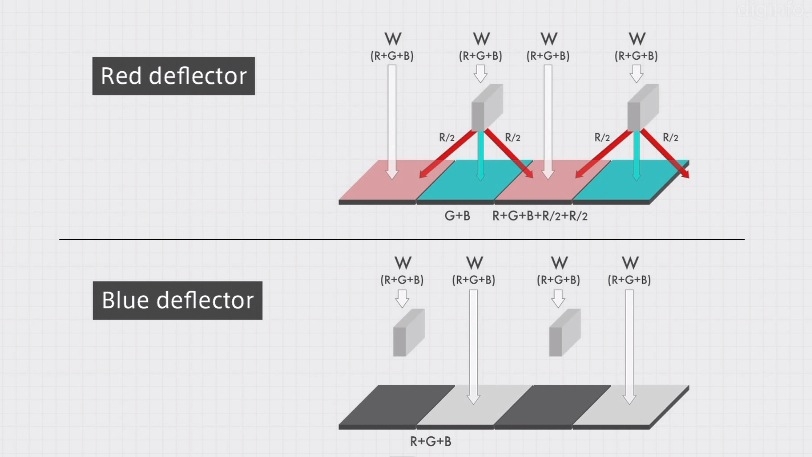

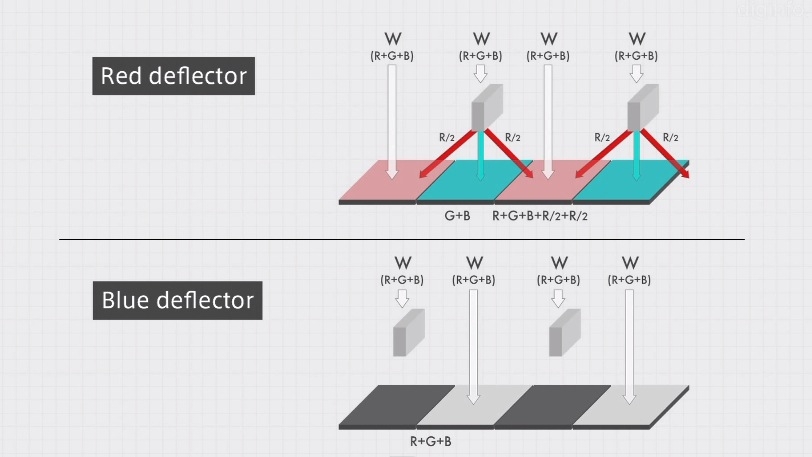

Essentially, the new technique uses diffraction instead of filtering. By using small light diffraction elements in front of the sensor, incoming light is split between wavelengths that go straight through the diffractor onto the photosites directly underneath, and wavelengths that are angled sideways onto adjacent photosites.

It then takes some complex calculations to extract the "true" colours from the output of the sensor - and these are much more complex than their Beyer equivalents - but the process is well understood and with a few generations of optimisation it should be possible to put this technique into cameras that you can actually buy.

Luckily, DigInfo TV has made this video to explain the process.

Tags: Technology

Comments