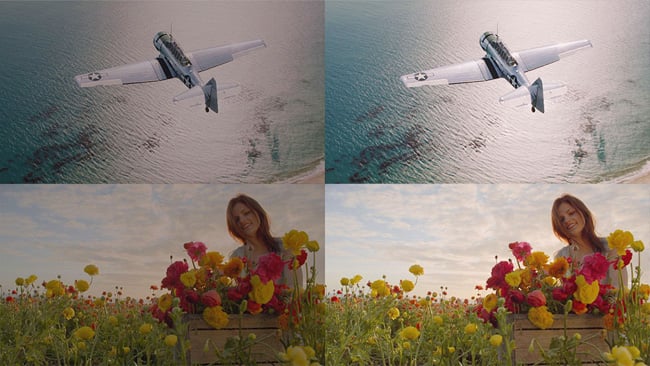

This image has been used a lot as a demonstration of what remastering might achieve. It isn't necessarily, or even often, important for material to have been shot with HDR in mind.

This image has been used a lot as a demonstration of what remastering might achieve. It isn't necessarily, or even often, important for material to have been shot with HDR in mind.

In the first of a new multi-part series on HDR theory and practice, Phil Rhodes explains dynamic range in brief and the challenges in displaying HDR for production and distribution.

When we were in the process of replacing 35mm film with digital capture, the loudest voices always shouted about resolution, even though that wasn't ever really the biggest problem. You could, after all, tell the difference between higher and lower production values on formats as lowly as VHS. Even back then, although the term hadn't really emerged, we wanted better pixels, at least as much as we wanted more pixels. Now, with 8K widely discussed, we have more pixels than any reasonable human being could possibly need, with the possible exception of VR. At least in terms of conventional, fixed-screen production, though, the demand for those better pixels has finally started to receive the prominence that it probably always deserved.

Faced with the issue of highlight handling, camera manufacturers have been striving to improve the performance of their products for decades. The need to handle highlights better was recognised fairly early, in the context of CCD video cameras which recorded reasonably faithfully, up to a certain maximum brightness, then rendered either hard white or strangely-tinted areas of flat colour after that point, depending how the electronics worked. This created a need for better dynamic range – the ability to simultaneously see very dark and very bright subjects, or at least to have enough information to produce a smooth, gradual, pleasant transition from the very bright to the completely overexposed, rather like film does more-or-less by default.

Dynamic breakdown

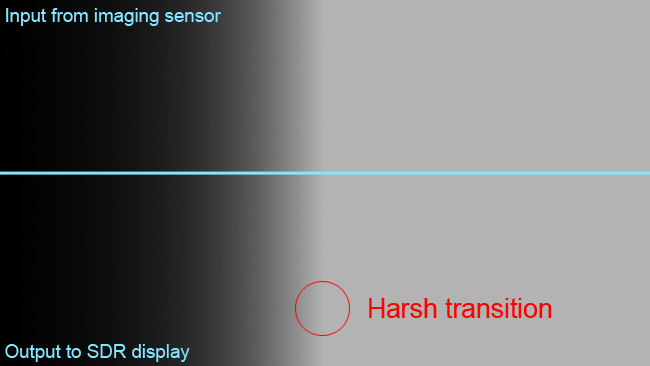

Traditionally, we might expect the camera to record gradually increasing brightness, which suddenly reaches an overexposed level, and we might display that image like this:

When there is no gradual transition between well-exposed and overexposed areas,

When there is no gradual transition between well-exposed and overexposed areas,

a harsh edge can be visible, as here.

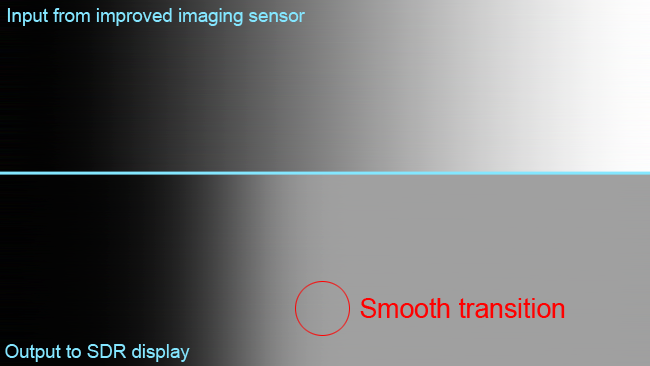

With better cameras, we can record a wider range of brightness levels (shown at the top of the image below). The display is still the same, though, because it's easy to change cameras, but very hard to change millions of TVs. So, we can't display the entire range. What we can do is apply a Photoshop-style curves filter to smooth out that highlight transition, as shown at the bottom of the image:

The wide dynamic range of the camera, top, is manipulated to produce a smooth, pleasant-looking transition to the highlights

The wide dynamic range of the camera, top, is manipulated to produce a smooth, pleasant-looking transition to the highlights

in a standard-dynamic-range image, bottom.

Now, the blacks are the same as before and the finished image, at the bottom, reaches peak brightness at the same point as it did before. It does, however, look arguably, well, nicer. Smoother. Less harsh. When applied to a live action image, more filmic, perhaps. These images are somewhat approximate, given the vagaries of how computer graphics work on the web, but they demonstrate the principle.

In theory, this sounds straightforward and cameras have been doing it for decades. It does, however, open something of a can of worms because it plays (for the sake of pretty pictures) with a fundamental thing: the amount of light that goes into the camera, versus the amount of light that comes out of the monitor. If we were to design a television system now, we'd probably define that relationship very carefully, with the idea that the monitor should look like the real scene. In reality, that was never really a goal of the early pioneers of television, who were more interested in making something that worked acceptably than they were in that sort of accuracy.

In fact, that relationship wasn't really very well-defined, at least by anything other than convention, until quite recently. As we've seen, manufacturers have already been playing around with the way cameras make things look nicer, given how monitors work. What hasn't happened is for anyone to start playing around with monitors to make things look nicer given how cameras work. OK, that's not quite true; there are various standards which have an influence on monitors, such as the well-known ITU-T Recommendation 709, which we've written about before, but the fundamental capability is still broadly similar to the cathode ray tubes of decades past.

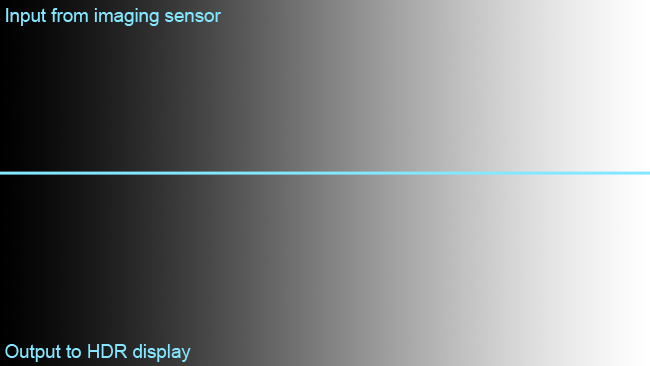

So, smoother, subjectively nicer transitions into highlights help. That's more-or-less what we've been doing, via grading of cinema material and various processing options functions. The obvious question, and the one that HDR seems to answer, is why we can't have this:

High dynamic range imaging ideally involves little or no loss of highlight information,

High dynamic range imaging ideally involves little or no loss of highlight information,

and maintenance of highlight brightness, from camera to display.

Well, we'd have to make better monitors, which can achieve brighter peak whites.

Monitoring for upgrade

If we think about it, it'd make a lot of sense to update display technologies. We have cameras which can see much brighter highlights than our monitors can display. OK, we can take the full brightness range of a modern camera and scale it to fit into the brightness range of a monitor, but that will make the picture look incredibly dull and flat. There just isn't enough light coming out of a conventional monitor to make bright highlights look right – reflections of the sun on a car, for instance. Fixing this has traditionally been part of the job of a colourist, who must creatively apply highlight control techniques much like those we showed in figures 1 and 2 above, in order to reduce the brightness range of the image to a point where the monitor can display it without making it look flat. Something, though, has to give. Detail in those highlights simply becomes white or, at least, the brightest white the display can produce. Wouldn't it be better if we had monitors which could emit more light?

This image simulates HDR performance on your standard-dynamic-range display. The upper part of the image

This image simulates HDR performance on your standard-dynamic-range display. The upper part of the image

has reduced dynamic range, with values above around 50% luminance clipped to a limited white point.

That's the question which high dynamic range seeks to answer. Of course, it's not quite as simple as "more light;" we specifically want more range, so that we can still have reasonably dark blacks while achieving brighter peak whites. There are a number of issues to overcome, not least of which is the ability of manufacturers to make monitors that can do that, especially affordably. It's particularly difficult to arrange on-set monitoring that can do the job, not because of the high brightness requirement, but because ambient light can easily make it difficult to see the darkest parts of the picture, which is just as big a problem.

Particularly, for small, portable, affordable cameras which may record relatively low-bitrate material, there's a need for the image to be recorded with lots of brightness information. Existing log modes on cameras can do that, but they're generally designed for mastering standard-dynamic-range material, which is usually distributed at 8 bit, implying 256 levels of brightness per red, green and blue channel. Because HDR material uses a wider brightness range, the difference in brightness between adjacent numbers would be too big and banding would occur; it's distributed using at least 10-bit (1024-level) encoding. Ideally, we want there to be more information in the camera recording than there is in the final material, so we can keep exercising the same creative options we always have when grading modern high-range cameras to produce standard-range output.

Banding, exaggerated here, is more severe where there are larger differences in brightness

Banding, exaggerated here, is more severe where there are larger differences in brightness

between adjacent digital values - as in HDR.

This means that 8-bit cameras are more-or-less unusable for HDR material. 10-bit cameras, or 10-bit recorders, are a realistic minimum; where the camera has low enough noise to make it worthwhile, even more precision is a good idea. The upside is that the techniques for shooting HDR don't necessarily have to be all that much more complicated than shooting a log mode on many common cameras – in fact, that's still the approach. The difference is that, instead of telling the monitor that we're shooting, say, Sony's SLog3 and that we want to monitor in Rec. 709, we tell the monitor that we're shooting SLog3 and want to monitor in one of several HDR standards. The monitor will perform appropriate processing on the image in either case, either compressing highlight detail rather harshly in the case of 709 or rather less harshly in the case of HDR. Naturally, the monitor must actually be capable of higher peak brightness (while maintaining dark blacks) when displaying HDR images.

The option to use creative lookup tables remains, but the phrase "one of several HDR standards" is currently the catch. Without going into the details, several approaches have been proposed which differ significantly from each other. Some of them, such as Dolby's Vision technology, are designed to accommodate varying capabilities of monitors, perhaps in recognition of the fact that HDR displays are difficult enough to make that there will inevitably be more and less capable ones, at least at first. These are, however, fundamentally distribution standards, to be implemented by home TVs and therefore monitors, not cameras. It might be necessary to make changes when grading to accommodate them all, but the advantage is that all of this would generally be done based on the entirely conventional recording.

It's nice to be able to monitor in HDR, of course, but well-exposed material, and film, has already been shown to make a reasonable basis for an HDR deliverable, even if it was never shot with that in mind.

HDR is, therefore, an attractive technology. It represents both an objective and subjective improvement to images and it doesn't need to encumber shoots in the way that stereo 3D did. There are concerns, of course. Excessive use of very high contrast in images can be fatiguing for the audience, in much the same way as excessive use of stereoscopy, with a similar need to resist the producer's urge to insist on using the maximum amount of a fashionable technique. The standardisation process continues and there are likely to be changes, so hardware manufacturers will need to be prompt with firmware updates to keep things in check. Nonetheless, HDR images should be available to anyone who wants them, at any level of the industry, and we'll follow upcoming equipment releases closely.

Tags: Technology

Comments