Replay: High bitrate figures are often promised, so why do the real world figures always fall short?

It’s quite easy to find files made by cameras – whether that’s a cheap simple camera or an expensive complex camera – which don’t seem to achieve anything like the specified bitrate. When we’re taught to look for high bitrates as an indicator of quality, that can feel a little like being shortchanged, especially when files from a “50 megabit” recording system sometimes average around 35. Most aren’t nearly that bad, but it’s a tricky subject: broadcasters are known to specify minimum bitrates for material. This stuff matters.

![]()

Arty macro title shot Mk. 1. VLC media player icon

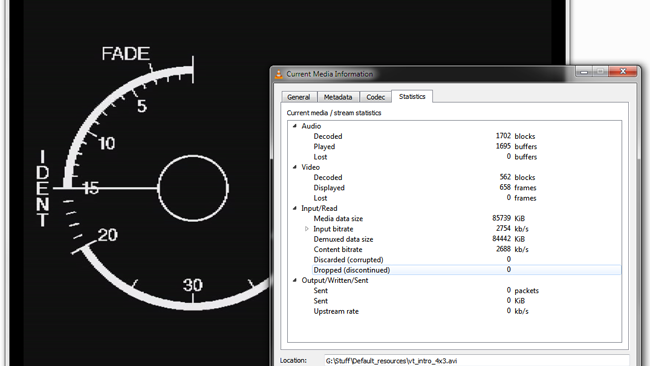

Let’s first look at how we might actually go about establishing the amount of data a piece of footage is really using. QuickTime Player will show some information in its Movie Inspector window, though VLC possibly does a better job of creating a running average of the real amount of data that is being read from the file every second. Pressing control (or command, on a Mac), shift and J will bring up the Media Information window, within which is the Statistics tab. The first thing that most people notice is that their 100-megabit camera might only be recording about 80 (the larger the absolute bitrate, the better things seem to do.)

This is VLC evaluating a standard-definition DV file, which should have a data rate of 25Mbps. On this simple graphical image, it's actually using a tenth of that

As so often, the reality of what’s going here is a bit more complicated than some camera company attempting to swindle us out of a couple of dozen megabits per second.

First – and apologies if this is a bit basic, but it’s best to be clear – we’re mainly talking about megabits here, not megabytes. Megabits have a lowercase b: 1000Mb. Megabytes are uppercase: MB. One byte is eight bits, so megabytes are eight times more data. This is only complicated because file sizes are often given in megabytes, whereas data rates on wires are often given as megabits per second. This means that, if we record about ten megabits of data every second into a file for ten seconds, for a total of a hundred megabits, 100Mb, we end up with a twelve and a half megabyte or 12.5MB file because a hundred divided by eight is twelve and a half. Yes, these are all awkward decisions, but it’s become normal.

The reason that real-world bitrates often underperform a little is that video compression is not an exact science, at least not in terms of exactly how much data will be needed to represent a particular frame. The way that many codecs work is – very broadly – to reduce the data to a series of numbers. Each number represents what proportion of a series of wiggly lines should be added together to recreate the brightness graph of a series of pixels. Those numbers will normally be long decimals (0.1234567.) Store those numbers with reduced precision (0.1234,) which we call quantisation, and they take up less storage at the cost of reducing picture quality, which is fine. The problem is, in most video, some of them will be short numbers already, some of them may be very long, and we won’t know that until we’ve already examined all of them.

In short, we need to make important decisions about how much to compress the frame, or at least a largish chunk of the frame, before we compress it. Add the idea of intra-frame compression, where whole groups of frames must be considered at once, and we might have to make some far-reaching decision on frame one, without any idea of what’s going to happen at frame 15.

This data link has hard limits on what it can handle. If the codec overshoots, that's a problem, and it can't buffer up too much to smooth things out as it's a live link

The consequences of having too little data – undershooting – are a small compromise in picture quality. The consequences of overshooting, on the other hand, can be dire. Many devices will buffer up at least a few frames in a row before writing the data to the flashcard, so if one frame comes out a little larger than intended, that can sometimes be handled. On the other hand, if frames are consistently only slightly oversize, serious problems can occur. In extremis, the recording medium – a flashcard, in most cases – may not be able to write all the data in time and frames may be dropped. At the very least, the file will be out of specification and other devices may have problems reading it.

This thing only goes so fast

So, the pressure is very much to aim under, to the point where it’s probably fairly normal for video codecs to actually use noticeably less data than advertised. This applies to some more than others, of course. Video codecs that don’t need to work in realtime, such as those operating on a workstation during the preparation of some video for distribution, can be more careful. If a workstation is compressing a frame or a group of frames and undershoots significantly, it can go back and try again, using less severe compression. If it overshoots, again, it can go back and have another try. This is what is often referred to as multiple-pass encoding. Usually, two passes are used, with one to establish just how complex various parts of the video are and one to do the compression, but it’s quite possible to do more than two if there’s a need for really accurate bitrate.

Calculate storage requirements based on bitrate and you'll overshoot by - well - actually a pretty healthy amount, so keep doing that

It’s theoretically possible that this sort of multi-pass encoding is also being done in cameras – that’s the sort of detail that manufacturers don’t generally discuss – but it would demand a lot more hardware resources to potentially do things several times. The camera (or something like an external or rack-mounted data recorder) is a realtime application and must keep up with incoming frames, so there it can’t, beyond a certain point, keep redoing things. That sort of extra performance implies extra expense, size, weight and power, and, frankly, it probably isn’t being done that often, especially on lower-end cameras where all those factors are limited. Those are also the cameras most likely to be using intra-frame, Long-GOP compression where the task is most complex.

In the end, this is one facet of the fact that some codecs are better than others and we’re not talking about the fact that, say, H.265 produces better pictures for the bitrate than the MPEG-1 used on an early 90s video CD. Different implementations of the same codec can vary wildly, from the implementation of H.264 that’s used in cameras to the one that’s used on YouTube. In the end, bitrates are a target, not a guarantee.

Tags: Production

Comments