Replay: Restoring a classic series such as Star Trek: The Next Generation or Deep Space Nine, is far from easy. But that's exactly what one fan set out to do, with the help of AI based software.

For decades, drama was shot on 35mm and finished in standard definition. For an idea of how bad that could be, consider the earliest DVDs, which were often made from the same 4:3 Betacam SP masters as VHS tapes. These contrast hugely with current discs which are generally made in widescreen and derived from high-res film scans or camera original files. So, drama was often shot on 35mm and finished on a format that wasn't even particularly good for standard definition, especially given NTSC material made with 3:2 pulldown then standards-converted for 50Hz markets with comparatively primitive 1980s converters. It was rough.

However, one of the best things about having shot a production on 35mm film is that it's possible to go back and rescan that film at higher resolution for more modern distribution.

Going back and rescanning, say, Macgyver is one thing, and season one has already been done. Doing that to Star Trek, on the other hand, is a herculean task because so much of it involved visual effects. These came from a variety of sources: graphics workstations to do bluescreen composites and rayguns, model work for the spaceships, and increasing amounts of CG. The Next Generation series more or less epitomised the period during which that transiition occurred. Even where film elements exist for models and pyrotechnics, they're probably easier to lose than the first unit work.

As far as the computer generated stuff goes, it's quite possible that all of the project files, textures and models exist, nothing's succumbed to bit-rot, everything's findable and remains compatible with modern versions of the software. Let's leave an assessment of how likely that is as an exercise for the reader.

Upscaling software

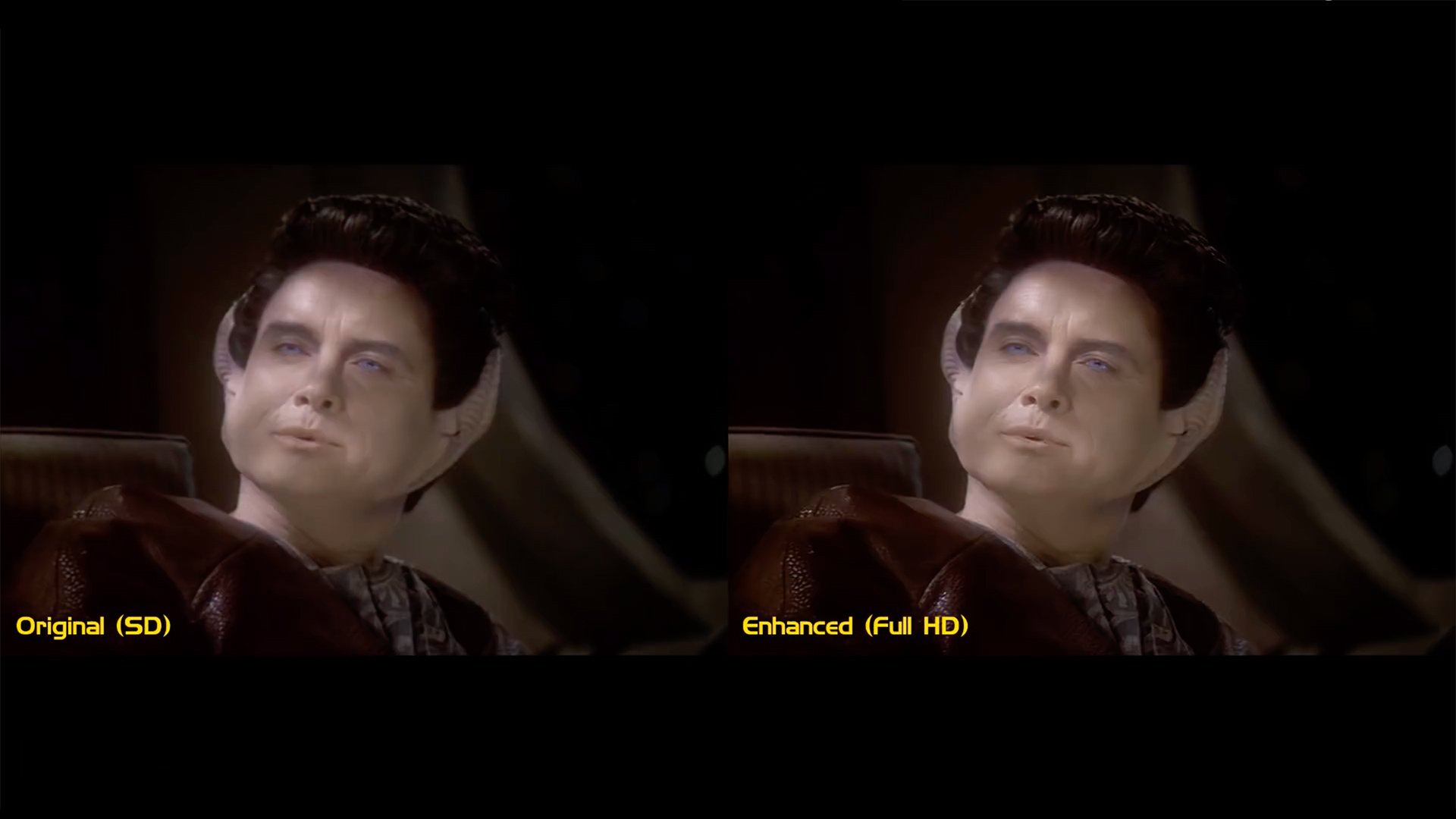

While all of that work has been done for Star Trek: The Next Generation (supervised by the multi-talented Michael Okuda) it seems unlikely that Deep Space Nine or Voyager will get the same treatment. All those problems make it an expensive process. It's perhaps fitting, then, that Star Trek fan Stefan Rumen chose Deep Space Nine as a target to try out some upscaling software. Ordinarily, this wouldn't be of much interest; every TV will scale things up, with various degrees of success, but it's always a poor seventh-best to a rescan of the original camera negative.

Rumen, however, chose Topaz Labs' Gigapixel, which uses machine learning in hopes of doing a much better job. This is at least theoretically legitimate: the software is intended to learn what the real world looks like and use that knowledge to retouch the frame with genuinely new data. It might not accurately represent what was actually in front of the camera, but it should look plausible as a picture of reality. Ideally, the thing knows what the real world looks like and is taking sensible decisions about what detail to add.

And yes, it does probably work better thanany previous attempt at scaling. The homepage has a useful before-and-after comparison slider which strongly suggests that almost any of the scaling algorithms we currently use are outperformed by Gigapixel, although they will presumably have picked an example image that works well and there are some small artifacts. Does it look like an original photograph at the higher resolution? No, it doesn't. But does it look better than the prior art? Yes, it does.

There is a further tripwire to dodge, though, for any machine learning algorithm intended to work on moving pictures – frame to frame consistency. There's not much point in making a brilliant set of decisions for frame x, then making entirely different (though still plausible) decisions for frame x+1; any one frame might look great but the animated result might be a flickering, unwatchable mess. Happily, Gigapixel does a good job here, judiciously adding detail to the image in a way that animates believably.

Deep Space Nine probably isn't a brilliant vehicle for this sort of thing, having been shot principally on 400-speed stock with prominent use of mist filters to produce a futuristic glow. As such, it wasn't shot with the intention that it be blazingly sharp to begin with, a problem then compounded by the standard-def finish. Equally, Gigapixel isn't perfect, and could reasonably be accused of adding a suggestion of the sort of artificial sharpness that a more naïve algorithm might use. Still, this is an early example of AI in a shipping product, and it offers at least something of a soluton to the issue of reassembling complex shows.

This sort of thing has all kinds of applications in the world of making old stuff look more like new stuff. The reality is, though, that once it becomes stable and well-understood, it'll probably just start appearing in televisions, and since it's likely to make one TV look a lot better than the other in the showroom, there'll be no stopping them doing it. How far that could go, in terms of distributing low res images and viewing beautifully upscaled ones, is more or less without theoretical limit.

Tags: Production

Comments