Put simply, the Bayer method of producing an image from a single sensor has been the backbone of video imaging technology in the current age of big-chip cameras. But there are new technologies that could define a new period of high performance imaging chips and banish the limitations of the Bayer system to the past.

It's worth being clear that this article discusses some very forward-looking technology which isn't likely to be available for a couple of years and probably won't hit cinema cameras for a bit longer than that. Still, it's a development that should work particularly well for large, high dynamic range sensors, and that's just the sort of thing we're interested in. What's really important about it is that this is, for the first time in a long time, an invention that could get us away from Bryce Bayer's wonderful but ultimately slightly compromised adjacent pixels of red, green and blue.

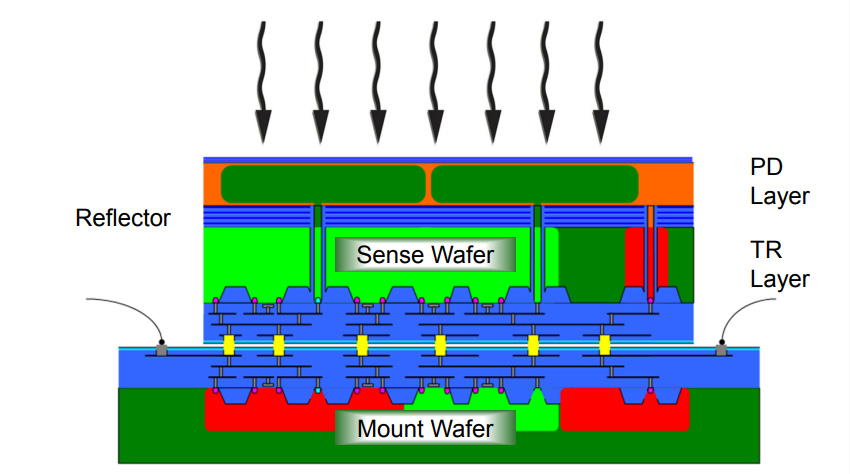

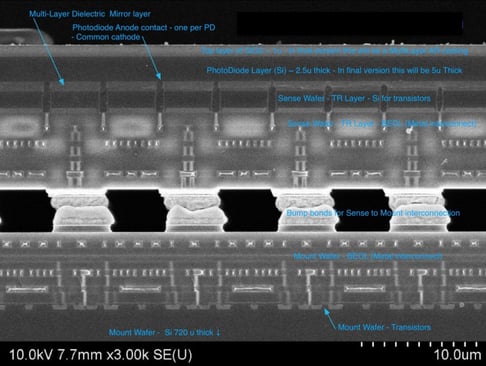

This image shows a cross-section of Lumiense's imager, with the bonds used to connect the layers together visible in the middle. Bonds from sensors to electronics are also visible

Sensors that have the RGB pixels in a single stack, as opposed to side by side like Bayer, have been suggested before. Foveon (which was bought in 2008 by Sigma) has been selling its X3 sensor technology for some time, but it's not perfect. The technology relies on the idea that higher energy (that is, bluer) light penetrates silicon to a greater depth than lower energy (that is, redder) light so that stacking up three photodiodes produces a pixel that can see in colour.

That's good, because it avoids the complexities of making up a colour image from a scattering of red, green and blue pixels, as with Bayer sensors. It's also bad, because the principle that higher energy light goes deeper is not absolute, and there's still a need for some post-processing that creates noise and colour precision problems for sensors using Foveon's technology. Neither approach is perfect.

The latest technology

The new technology comes from Lumiense Photonics, whose earlier technology we covered all the way back in 2013. Back then, the company was interested in techniques for stacking layers of silicon on top of one another. Processing electronics can then be made using well-established manufacturing processes, and attached to the back of the more difficult light-sensitive layer; the fineness of the connections between layers is a new advancement. Better yet, with the electronics elsewhere, the front face of the sensor can be more completely covered with the light-sensitive parts, as opposed to using some of the available space for electronics rather than photodiodes.

An example sensor with one layer of photosensitive parts on the front, and the electronics behind

Multi-layered computer chips of all kinds are now more common than they were in 2013, with stacks of silicon particularly being used to create high-density memory. One advantage of this is that metal layers, which are opaque, can be placed between the light-sensitive parts on top and the lower layers which store and process the results. All electronics are light-sensitive to some degree and if we want a global shutter, we need somewhere to do storage and processing that isn't exposed to light; this technology could create better global shutters, with fewer compromises.

Better yet, the light-sensitive photodiodes can be stacked one on top of the other, with coloured filters to be placed between them. That might create much better separation between the photodiodes intended to detect red, green and blue light, and possibly an escape from Bayer arrays. What's particularly important is that the filters are dichroic mirrors – that is, they're not just absorbing light they don't pass, like a lighting gel or the filters on a Bayer array.

Instead, they're reflecting light they don't pass, so that (for instance) the green-detecting photodiode is in front of the green mirror. Any green light it doesn't detect hits the mirror and is reflected back through the photodiode again, so it has a second chance at detecting it. This is good – very good – because it allows for the sensor to waste far less light than Bayer-patterned devices. It might also make for better sensitivity to fine variations in hue or lower colour noise.

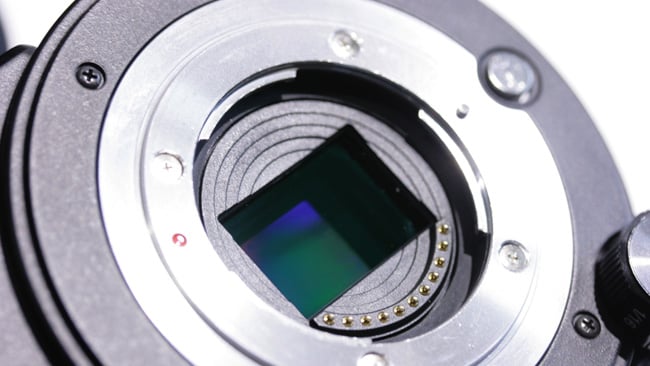

It may be a while before we see Lumiense's new technology behind a lens mount (Here we see an Altasens sensor in the GY-LS300)

Bayer limitations

That's a problem Bayer sensors can sometimes have because the manufacturer might feel like using pale, unsaturated filters, so as to reduce the amount of light lost and increase sensitivity. That makes for an unsaturated picture which must then be mathematically processed, much like increasing the “saturation” slider in Photoshop. That introduces noise and may lead to inaccurate colour. In Lumiense's new approach, less light is lost, so saturated filters can be used without affecting sensitivity so much.

Recently we've been able to keep increasing resolution until the problems with Bayer arrays are so small that they're not really very objectionable, but they're still there: there is noise, particularly colour noise, in modern cameras which might be improved by new techniques like this. Filling more of the space with photodiodes improves sensitivity, noise and dynamic range, and helps avoid aliasing. That means that if there are big gaps between the pixels, fine details may fall into those gaps, and be partly lost. If that detail lies across the sensor at an angle, it may be intermittently lost, creating a stair-stepped appearance. Correcting this problem - antialiasing - can only be done by blurring the image, so that the smallest details are no longer small enough to fit between the pixels. Make the gap between the pixels smaller, and the need for that is reduced, leading to sharper pictures with exactly the same number of pixels to store.

All of this might well be very, very nice. We're far from the point of having any idea how this technology might eventually start to appear on the show floor at NAB – a manufacturer would need to commission a large, high-resolution sensor design using the technique and build a camera around it – but it's certainly on the one-to-watch list. Bayer's technique has worked well for us for decades, but probably even Bryce himself would have seen the value in an even better approach.

Images courtesy the company, with thanks to David Gilblom at Alternative Vision Corporation.

Header image: Shutterstock - atdigit

Tags: Production

Comments