When we think of camera sensitivity it would be logical to think that brighter is better. But is this always the case?

A little while ago someone posted a video online proposing that a camera that produces brighter images isn't necessarily more sensitive. This is an interesting claim and from a very specific point of view, under very specific circumstances, it's sort of correct. Comfortingly, it's also wrong, from a more general point of view, but either way, it tells us something about cameras and how picture engineering works in electronic cinematography.

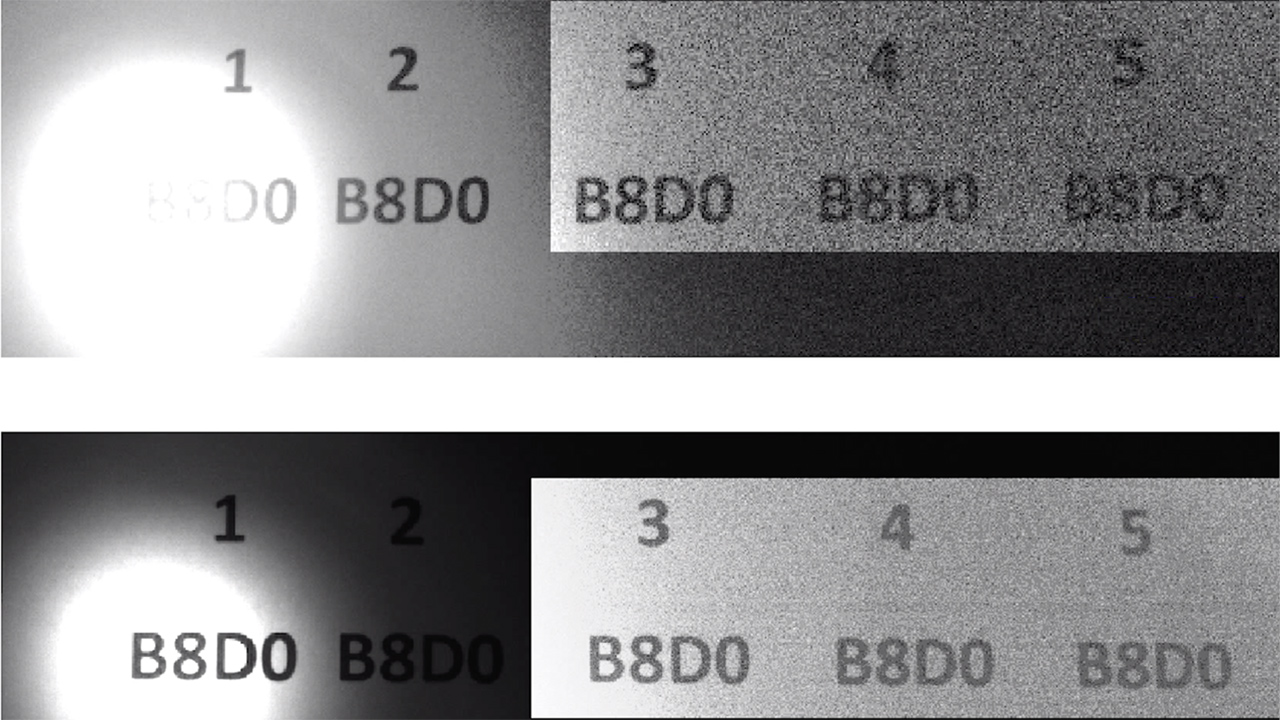

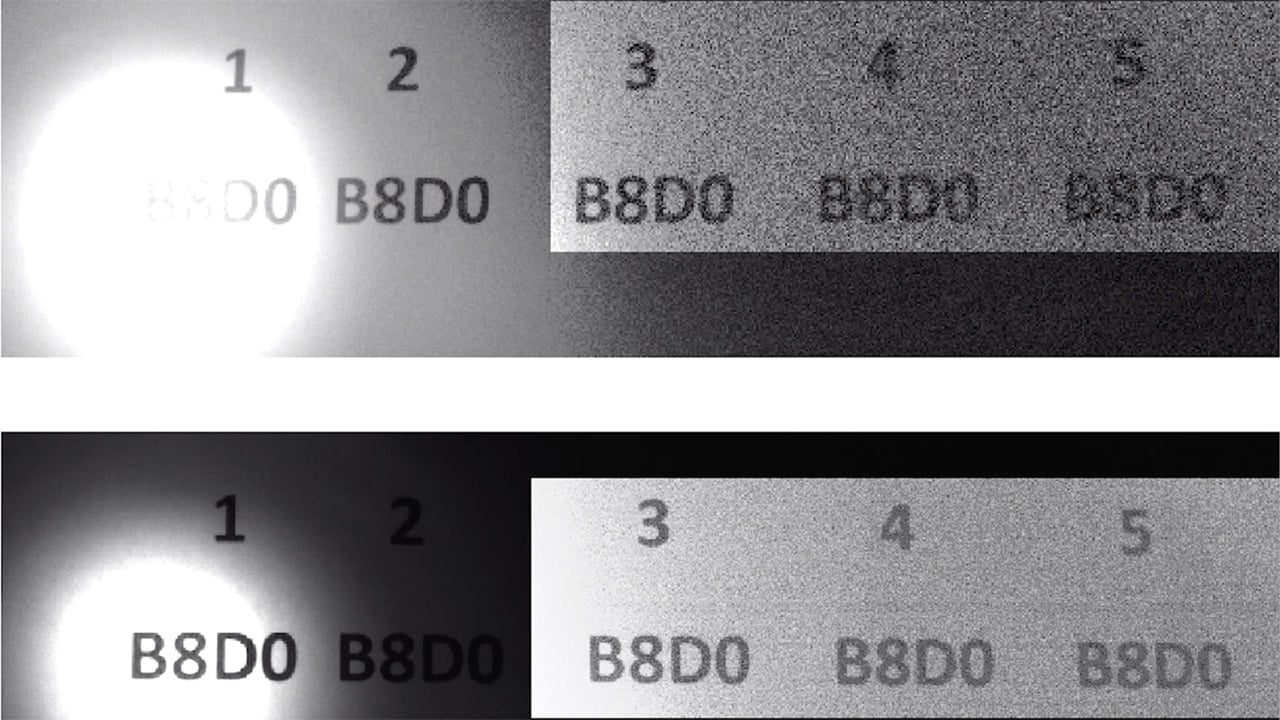

Here's the video in question, as cited in a paper promoting Sony machine-vision cameras:

How can a more sensitive camera be darker?

First things first – how do we arrive at the conclusion that a more sensitive camera might create a dimmer picture? Let's define what we mean by a “more sensitive camera.” Most people would agree that if we have a sensor with bigger photosites – so they're more likely to have photons hit them during the exposure – then that camera is more sensitive. It's not quite as simple as that, since a sensor of a given size would have a larger number of sites if they were smaller, so, give or take the gaps between the sites, the small-site camera would perhaps be about as likely to capture a given photon somewhere, but its individual photosites might be considered less sensitive. Do we care about the sensitivity of an individual site or the camera as a whole?

It's something of a semantic argument, but we'll stick with the idea that the big-site camera is more sensitive for now.

Larger photosites = larger light gathering capacity

The thing is, larger photosites will also be able to capture more photons than smaller photosites would, so they'll have a larger full capacity. If the big-site camera can store 100 electrons per site, we need 50 electrons to make it read 50%. If the small-site camera can only store 50 electrons per site, we only need 25 electrons to make it read 50% (real cameras capture vastly more than this.) In either case, the camera is outputting a level of 50% - say, 128 on an 8-bit monitor. But the camera with the larger photosites, that most people would agree is more sensitive, needs more light to get to 50% capacity than the one with the smaller photosites.

So the more sensitive camera produces a darker picture under the same light.

How this works with real cameras

Clearly, that's not the case with practical cameras in the real world; we would expect two different cinema cameras, or two digital stills cameras, to produce a reasonably similar picture under the same lighting. But the behaviour of the sensors in those cameras is fixed. They do what they do when light hits them and they produce an output accordingly. At the sensor level, it's true. A “more sensitive” camera, for some value of “more sensitive,” does produce a lower signal level as a proportion of the maximum. But the maximum is much higher. The actual signal level coming out of those bigger sites is actually larger than for the smaller sites. More photons means more electrons means, literally, more volts.

Raw data

As we know from raw recording, this raw sensor data isn't viewable without processing. One of the first things done to raw data in a camera is processing so that the camera has reasonable behaviour with respect to its ISO setting. With appropriate lighting, metered to the selected ISO, an 18% grey card should be shown as a grey level of 119, 119, 119 on an 8-bit sRGB display. The big-site chip will read it out as much less than 50% of its own maximum signal level. The small-site chip might get it closer to halfway, but the point is they're different: the two sensors will need different amounts of processing to simulate the same effective ISO. The big-site chip needs less amplification compared to the small-site chip, so the big-site chip is less noisy and our perception is that we can increase the amplification more before the picture becomes unacceptably grainy. So, the big-site chip seems, to us as photographers, more sensitive.

By the way, the mid-grey point in sRGB is not 128 because the relationship between the signal level and the light coming out of the monitor is not completely linear. This is an example of an “electro-optical transfer function,” which is a bit outside the scope of this article but we can discuss in the future.

No such thing as electronic ISO

A purist, an electronics engineer, would say that digital sensors don't really have ISO. Modern cameras simulate ISO, and the idea that a bigger photosite creates a brighter picture is caused by the assumptions of photographers. Modern ISO calculations are effectively a simulation of some mathematics which is done to very roughly characterise the behaviour of photochemical film. Imposing that on a digital camera doesn't make much technical sense, which is why the machine vision people don't generally think in ISO terms. ISO is, however, a pretty convenient approach for photographers and cinematographers, which is why we tend to assume that more sensitive cameras make brighter images.

But, as we've seen, there are some underlying realities which make a lot of this into a rather fuzzy matter of opinion.

Tags: Production

Comments