Do you want chiplets with that? How specialist modules and other innovations are revolutionising computer performance all the way down to the low-end.

Sometimes I read a headline in a technology publication that makes me sit up in disbelief. Last week I saw such an example, and it was truly astonishing.

Remember the Raspberry Pi? It was - and still is - a small computer about the size of a matchbox festooned with I/O ports and fully equipped to be a self-sufficient computer with the addition of a keyboard, mouse, monitor and an operating system.

I've often been surprised by the ingenuity of Raspberry Pi users to make quite fully-featured applications for the diminutive device and by the size of the community developing for it.

The Pi has been through several design iterations and remains a remarkably useful computer for a surprisingly low price.

So, when I read that you can now run a software emulation of Yamaha's legendary DX7 synth - the backdrop to so many '80s hits - on a Raspberry Pi, I was intrigued but not surprised. This wouldn't be the first time the Pi had hosted a virtual synth.

But then I read that the tiny device could run not just one but eight independent instances of a DX7, I started to question how it was possible. I'll tell you in a minute, but first, I want to make a broader point that explains a lot of what we're seeing now and what we're going to see in the next few years in computing and media technology.

Circumventing Moore's law

We're all accustomed to the idea that Moore's law is on its way out. In "traditional" terms, it's true: computer chips no longer double in power every 18 months or so purely because component sizes keep shrinking, and you can therefore fit more processing power into the same space. That actually ceased to be true a few years ago.

But if you zoom out from that framework and look at the wider picture, you'll see that computer chips are still getting faster, and in some fields, like AI, they're actually beating Moore's law by some margin.

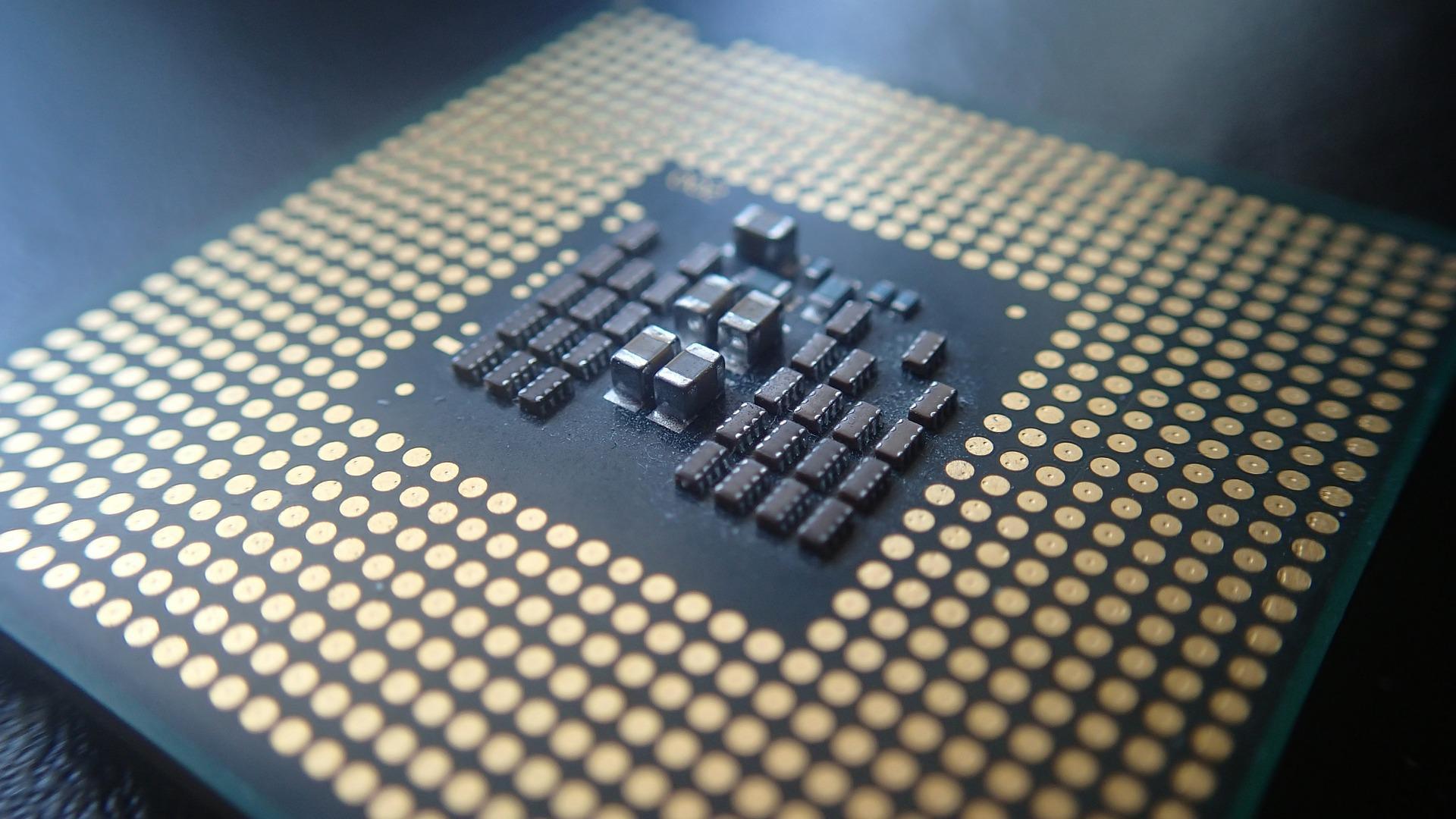

The question of how many components you can pack into the same 2D space is no longer the single governing factor dictating the processing capabilities of a chip. To keep the type of acceleration personified by Moore's law going in an era where we can't easily shrink components much more, we've had to look in other directions, literally, where one of those directions is upwards and not just sideways.

Why is New York's Manhattan Island the way it is, with breathtakingly tall skyscrapers crammed into every square inch of the landscape? It's because there's nowhere else to go than up. So if you want to fit more in, you have to invoke the third dimension. Once you do, you're all set up for incredible growth.

And it's like that with modern chips: by liberating designs from the constraints of two dimensions, the sky is literally the limit. But this is just one aspect of the new chip design. Others are arguably more important.

Heterogeneous design

One of the most powerful techniques is heterogeneous design. It means building chips from a wide variety of specialist modules. There are several advantages to this.

Conventional CPUs are generalists. Throughout the history of computing, that's been a colossal strength: a single machine can accomplish almost anything. All it takes is some software instructions (and the entire discipline of software development!). That's a powerful paradigm, and it's not going to go away. But specific tasks, like video encoding or decoding, AI and machine learning, or digital signal processing, crop up all the time, and are typically resource hogs if they're done on a CPU. These specialities can be sped up by orders of magnitude with dedicated silicon. You can think of these highly focused devices as co-processors: it's an old idea.

But what's almost entirely new is that it's now possible to build these specialisms into tiny modules that can be incorporated onto the processor chip itself. They sit alongside it on the same piece of silicon, tied together by extremely fast interconnects. When you put all of the modules together with a CPU and a GPU, you have a chip that's breathtakingly fast on aggregate, leaving traditional housekeeping to the CPU and the resource-hungry tasks to specialist and ultra-fast dedicated modules.

This design philosophy is really taking off. The tiny specialist modules are called "Chiplets", and I would expect to see a healthy ecosystem building up around the idea of an industry exchange or even a marketplace for chiplets made by multiple manufacturers. It should accelerate the design process and speed up the chips themselves.

Finally, we're seeing some extremely fast interconnects both on-chip and between chips. This type of technology is behind Apple's ability to chain multiples of their already-powerful chips to make an even bigger CPU. The latest example is the M1 Ultra, which is a union of two CPUs - complete with specialist modules - to give a compound device with over 100 billion transistors.

Coming back to that Raspberry Pi and how it can possibly run 8 instances of a real-time DX7 emulation: the answer is that the software synths were written directly to the hardware. There was no operating system to slow things down and impose painful latency on the process. It's clever, but obviously niche. But it illustrates an important principle: that the closer you get to the hardware ("to the metal" in software parlance), the faster it gets. But that approach is limiting, and the destination of travel for tech in general and media tech is explicitly in the other direction: towards virtualisation and abstraction. And that will be good for all of us.

We'll be unpacking that in soon in a future article.

Comments