Replay: We take GPUs for granted now, but their existence has roots that goes all the way back to the 1970s.

Even Gordon Moore, of the eponymous law Law, might have blinked at the performance of modern GPUs. Yes, they have an enormous number of transistors, as Moore so capably predicted, often about as many as a contemporaneous CPU. It's hard to avoid the reality, though, that their behaviour in use is very different, and it's reasonable to ask how we got here.

By the late 1990s it was becoming clear that the graphics processing done for video games would quickly exceed the capability of the hugely-expensive hardware that was being used for things like realtime colour correction in film and TV. Matrox's RT2000 was probably one of the first affordable ways to leverage a piece of graphics hardware in a video workflow, though its limitations were very visible; like most things of the time, its effects were clearly based on graphical primitives, a texture map on a plane.

Of course, the graphics processor underlying the RT2000, as well as the PlayStation and Xbox series, were descendants of a line of development reaching back into the 1970s. Much as the level of sophistication naturally varies massively over that thirty-year timespan, the crucial underlying assumption has often been the same: the idea that rendering operations for video games were extremely repetitive, and the CPU could be spared the donkey work.

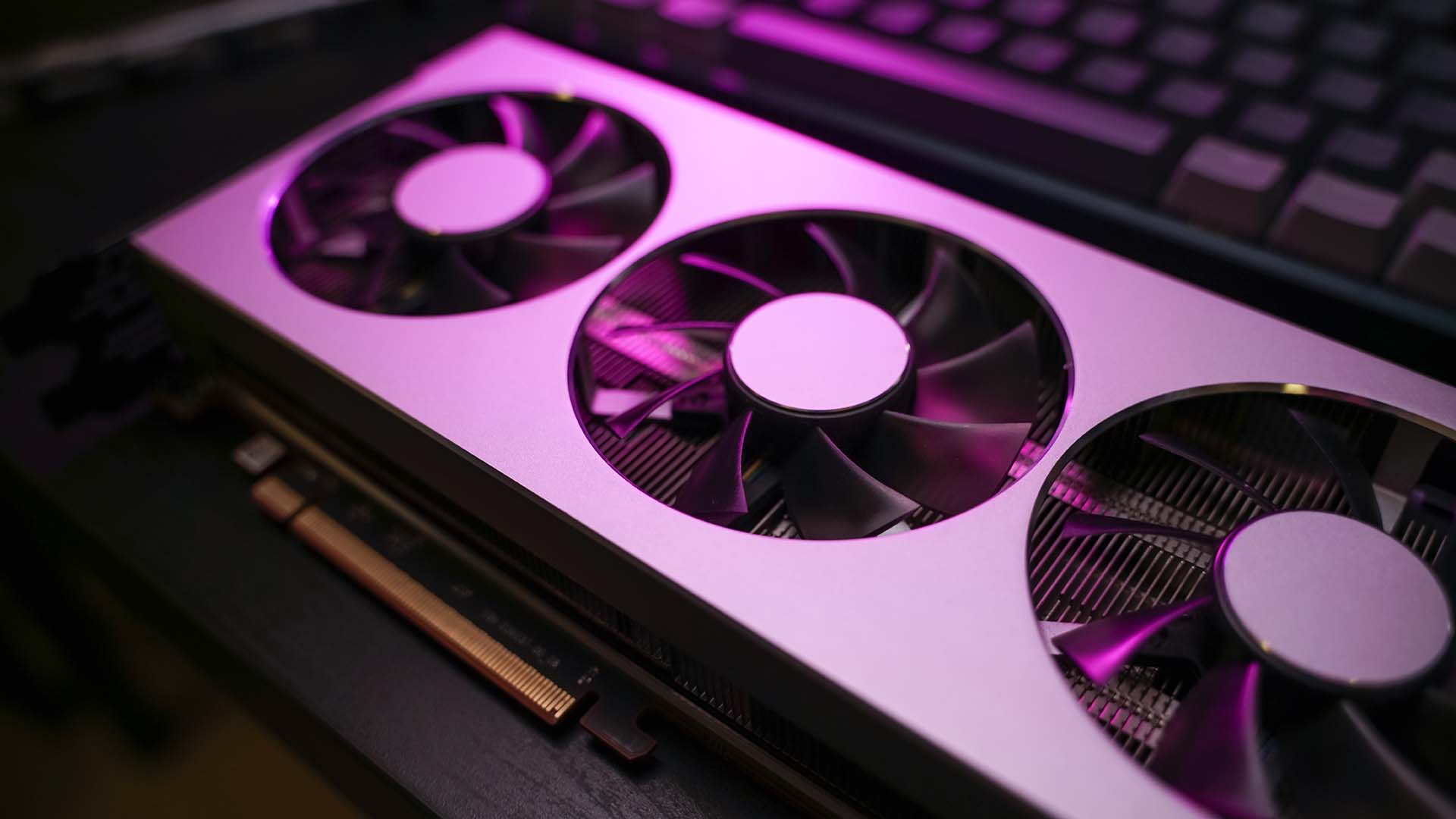

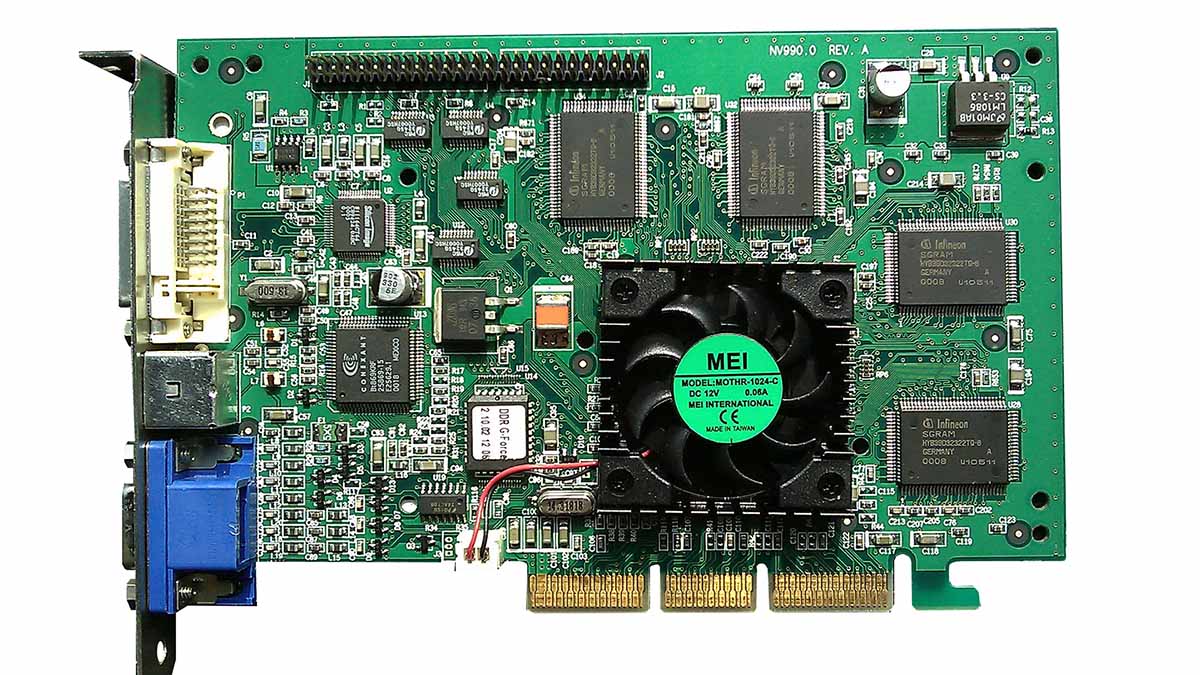

A VisionTek graphics card with Nvidia GeForce 256 GPU. Image: Hyins/Wikipedia.

Almost all computers since the 70s have had something approaching a dedicated graphics chip. It's not pretty when they don't: Clive Sinclair's extremely inexpensive ZX80 could only generate a video display when it wasn't doing anything else, so the display would flicker on every keystroke and blank completely during a long calculation. Its descendants include the seminal Spectrum, which did include a Ferranti uncommitted logic array, a recognisable forerunner of modern FPGA technology, which generated the frame data as it was scanned out.

That approach remained popular through the mid-1990s, on games machines which would hold sprite data in their limited memory, and fetch it pixel by pixel as the television's electron gun swept across the display. Even then, there were some programmable graphics devices which are recognisably forerunners of current technique; the Commodore Amiga line had a coprocessor which could be programmed to execute various graphics instructions. The Atari Falcon030 could also employ its DSP processor to calculate real-time 3D graphics too. However, the use of graphics hardware to do other work didn't become common until 3D graphics had become much more commonplace.

Even that started to happen a long time before anyone thought of leveraging the hardware to do non-graphics work. In 1994 two things happened: Sony released the PlayStation 1, and 3dfx Interactive was founded in San Jose, California. It produced the Voodoo series of plug-in graphics cards, arguably the first successful attempt to bring hardware-accelerated 3D to PC gamers. As with so many version-one attempts at anything, the Voodoo cards were idiosyncratic, requiring a pass-through cable from a conventional 2D graphics card and demanding software use the company's own Glide standard to communicate with the 3D graphics hardware.

(Author's note - Ah, Unreal on a Voodoo 2. Those were the days. And yes, that's where the Unreal Engine gets its name.)

By the end of the 90s, Nvidia's GeForce 256 and GeForce 2 and the ATI Radeon 7200 were snapping at 3dfx's heels, and the company essentially failed in late 2000, with Nvidia buying out much of its technology. What made the GPU market what it is today, though, is less to do with hardware, and more to do with Microsoft's introduction of its Direct3D software interface, which, alongside the more productivity-focused alternative OpenGL, meant that applications could use 3D acceleration without worrying (much) what they were running on.

Limited effects

At this stage, the hardware could actually do was rasterisation, the process of taking clouds of triangles in 3D space, and plotting those triangles on a two-dimensional display. Texture, bump and reflection mapping, rotation and translation of the geometry, lighting calculations and other refinements were now normal. Companies competed on the effects their hardware could create, but it was a fixed-function pipeline, capable only of the operations the designers had foreseen would be useful. Before long it became clear that making the hardware programmable was the only realistic way to maximise flexibility while controlling complexity.

Graphics terminology has often referred to the process of plotting texture and lighting effects on polygons as "shading," and the programs run by GPUs are still often called "shaders" even if they aren't built to draw graphics. The way in which shaders have been written for GPUs has changed several times, but by around 2005 it had been realised that given this programmability, a GPU could be persuaded - and that was the right term - to do non-graphics work. At first, it was necessary to package up the data to be processed as an "image" and send it to the GPU as a "texture," but it worked.

The reason it worked takes us back to the repetitive nature of graphics work. A scene in a video game includes thousands, even tens or hundreds of thousands, of triangles, many with several layers of texturing and lighting, all of which have to be handled when a frame is rendered. A GPU is like a row of a thousand calculators with a single lever arranged to press all of the "equals" buttons simultaneously, so it can do a huge amount of fairly simple things all at once. Scientists, in particular, quickly found ways to leverage this capability.

Doing this was regularised at the end of the first decade of the twenty-first century with systems such as Brook, RapidMind and CUDA, released in 2007, which continues making lots of film and TV applications much (much) faster to this day. The impact of this on certain workloads has been beyond revolutionary; it has destroyed and created companies, gifted the high end to the masses, and allowed people to run around shooting pretend aliens and ducking the flying giblets. And, before we suffer any delusions of grandeur, let's remember that without the giblets, none of it would ever have happened.

Tags: Technology Retro GPUs History

Comments