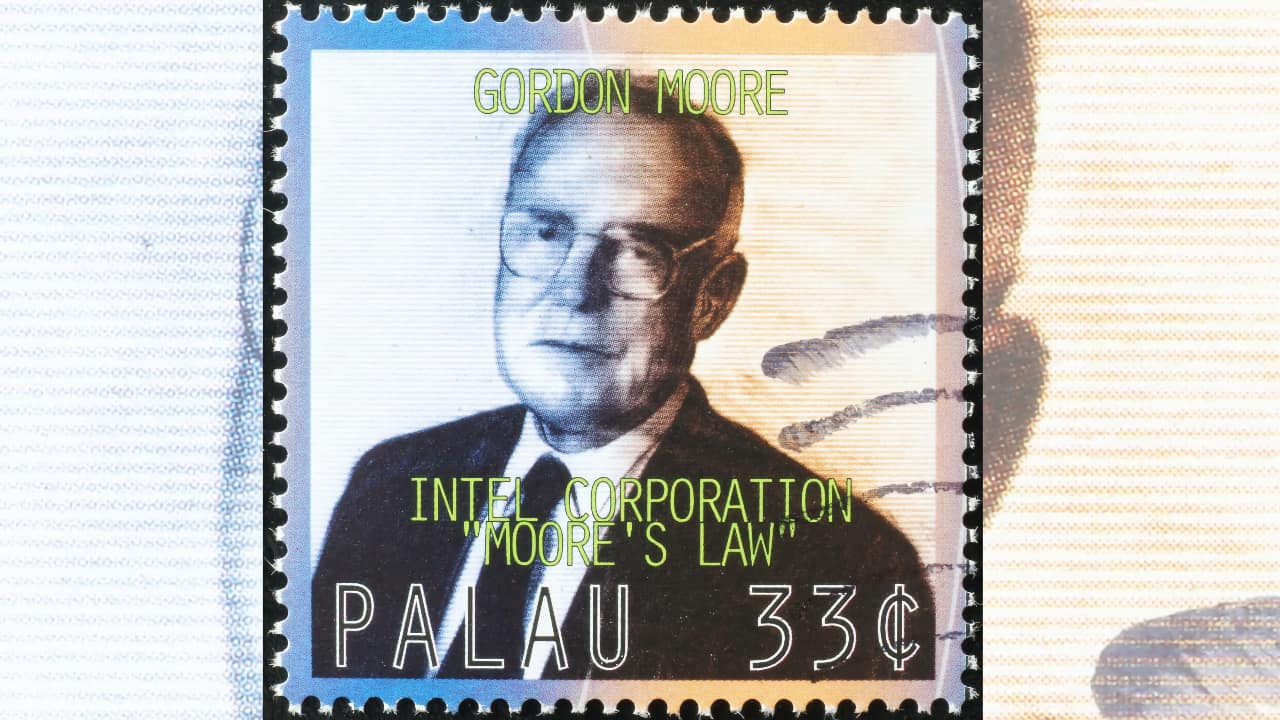

Gordon Moore, co-founder of Intel, may have died only just before his famous law that the size of transistors would halve every two years did as well. What comes next?

Gordon Moore died on March 24 2023, at the grand old age of 94. Perhaps one of the things that’s most remarkable about Moore's Law, his famous empirical observation (and that's what it is: it's not a physical law), is that it is just about the only accurate prediction of the path of technology in the late 20th and early 21st centuries.

The best way to understand the scope of Moore's Law is to consider what the world might be like if it didn't apply. One example:

Modern smartphones contain tens of billions of transistors. Before transistors, there were valves (tubes). These were physically similar to light bulbs but differed because they had metalwork that controlled the flow of electrons from an anode to a cathode. It's a topology that transistors mimic in silicon. Where you couldn't make valves much smaller, transistors still work even when shrunk through factors of millions. A single smartphone made with valves would be the size of a city like Milton Keynes (89 sq km / 34 sq mi - Ed).

While Moore didn't make Moore's law happen, his observation is so profound that it's hard to find any aspect of modern life that's not been affected by it. Perhaps a carpenter working in a garden shed could claim to be isolated from modern trends. But the minute they stepped outside, they'd at least have the possibility of an MRI scan if they'd broken a bone and would likely communicate with their equally old-fashioned clients by email.

A very different era

Moore was born on January 3, 1929, into a world that has seen more change and turmoil than any similar period before. He co-founded Intel in July 1968 with Robert Noyce and held official posts until 2006.

Intel built the chips inside the first PCs. The 8086, the 80286, 80386, 80486 and then the Pentium range were familiar to everyone through "Intel Inside" advertisements. For the technically-minded, they were an opportunity for standardisation. With a standard and backwards-compatible instruction set, these household-name processors provided a rock-solid foundation for software developers and companies to build their fortunes.

Some would say that the '86 architecture became dated and held back personal computing through a lack of innovation. That's probably true to an extent, but it would overall be an unfair conclusion because the existence of a reliable and standardised platform enabled more development than it held back.

Today, the longevity of his law is in question. Physical limits place rigid barriers in the quest for ever-smaller components. The continuing validity of Moore's law has often been questioned, especially since the turn of the century. That's unsurprising because it's incredibly hard to sustain exponential change over a long period, never mind one that depends on constant physical innovation to make components increasingly tiny yet still retain the crucial physical characteristics to keep working.

And yet, even now, it's likely that there are still one or two more generations of miniaturisation left before physical phenomena at the atomic level call an end to this exponential journey.

But it won't end.

The next wave

Exponential progress tends to follow overlapping "S" curves. We might be approaching the end of one and the beginning of another. Stacking chips vertically as well as horizontally is one way to continue the trend, and there is also massive potential to make software more efficient. One side effect of the sheer scale of progress driven by Moore's law is that there was no need to make software perform any better than absolutely necessary.

In the early days of computing, when literally every bit counted, knowing how to make a program small was as important as making it work. Today, with millions of times the resources, some code has become sloppy and wasteful. This is justifiable when you realise that today's software, despite its inefficiency, is remarkably powerful yet still gets written in reasonable timescales.

It's easy to forget that you'd need a small fraction of the resources if you optimised the software. Some experts estimate that even if hardware stopped at today's level, there are orders of magnitude of efficiency left to gain in software alone.

Who's going to do this work? Probably - and almost inevitably - AI.

And that's perhaps the most striking thing about Gordon Moore. When he was born, the state-of-the-art in consumer electronics was a valve radio. There was no television, no video, no computers, no IT industry, no internet, no social media, and artificial intelligence was the stuff of the wildest speculation in science fiction.

Today, just as we realise that we might be witnessing the first signs of Artificial General Intelligence, it's sobering to think that without the effects of Moore's eponymous law, we would still be living with the technology of the nineteen sixties.

Comments