With AI upscaling and baseline standards of 1080p and 4K, do you really need to shoot for the highest resolution possible?

“Fix it in post” has always been a dirty phrase. It’s one of those things that everyone pretends isn’t an option, but quite often ends up being a lifesaver in some form or another. Nobody likes to admit that they messed thing up during production. It could be an out of focus shot, or as I’m finding with the sheer number of options on modern cameras, accidentally filming in 1080p when I meant to have the camera set for 4K.

Luckily I no longer have a client breathing down my neck asking how brilliant the last shot was, having to muster all of my might to tell them it was excellent while knowing I’d cocked up something. I was a conciencious shooter, always wanting to give any editors the best material to work with. It was the ultimate insult to myself, and to others to mess things up, no matter how minor.

I don’t mind admitting now that I’m free from that world, that I right royally messed up on a shoot with one of RedShark’s contributors, Neil Oseman. Some shots of mine on an early shoot of ours were, shall we say, a little out of focus. That’s what you get with old camera flip out displays and ever so slightly knocking the focus ring while on a Glidecam… I would hope that we can both now laugh at the way Neil checked my focus for a long time afterwards, now matter how much more experienced I became!

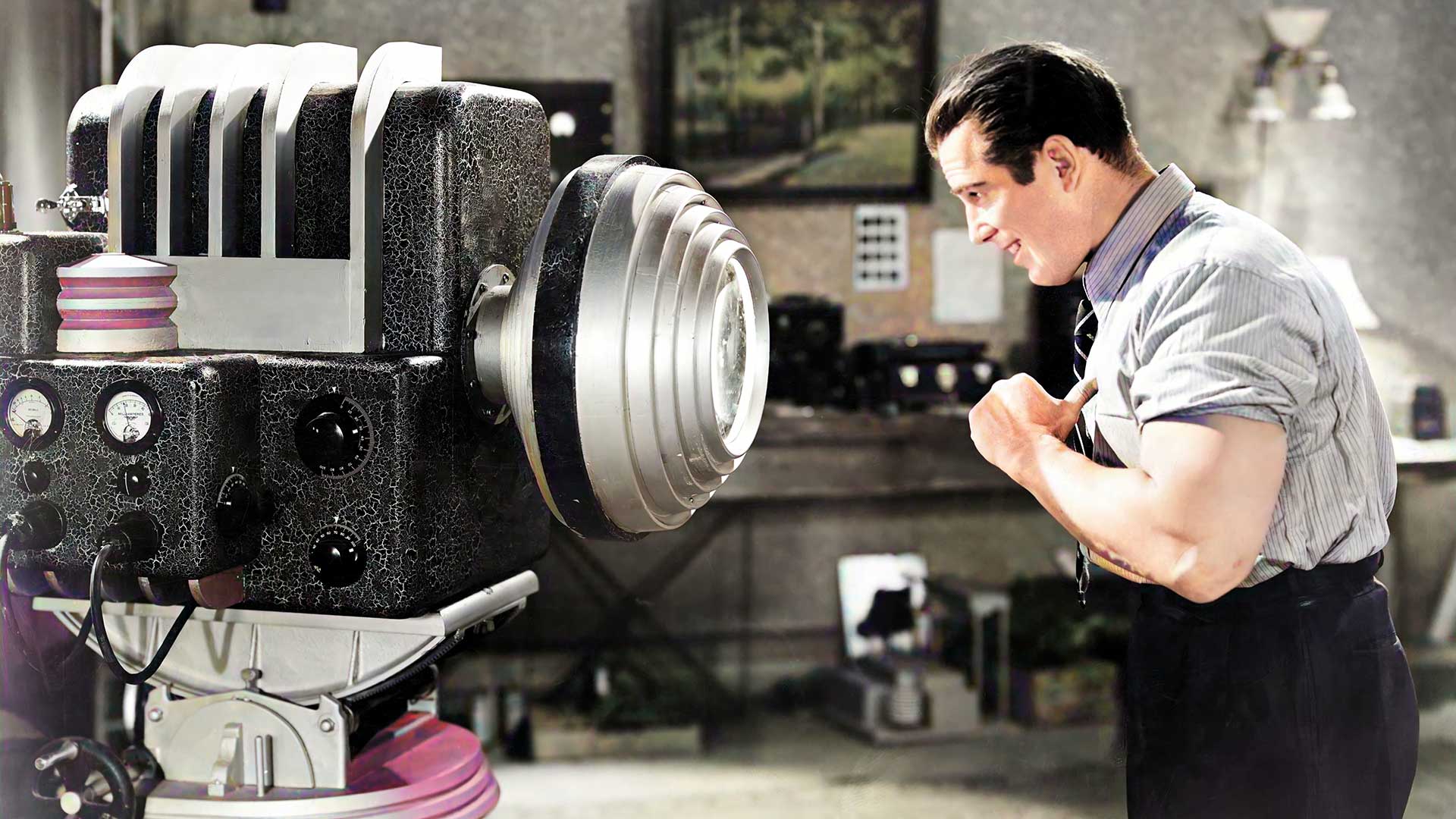

Back then such errors were footage ruining. Final Cut was in its infancy and many a video company was defaulting to the “book curl transition”. By today’s standards the cameras were pretty awful too. Although we were at least lucky enough to have cut our teeth on the last of the analogue cameras and actually be working in a digital age, just about.

AI enhancement

Move forward to today and it is now possible to convert even low quality YouTube videos to 4K. Yes, really. I reviewed the Topaz Video Enhance AI software a short while ago and found it to be extremely impressive. Now that I’ve had a chance to really get to know it and refine the settings, I am utterly blown away by what it can do.

I've recently been experimenting with some old, rare footage, for which the only copy exists in very highly compressed form at low resolution on YouTube. The original footage was taken on a Super8mm camera with not the best telecine transfer to begin with. For all intents and purposes the footage that exists in digital form could be put in the dictionary as the definition of "completely unusable"

So as a little experiment I decided to let Topaz loose on it. The first results were, I thought, impressive. But then I played around a bit and found even better settings, even though they didn't make logical sense. The final result astounded me. It's still pretty awful, with shake and dirt all over, with indistinct detail, but compared to what it was originally it's a night and day difference. It has recreated facial detail out of, well, nothing! And since I am familiar with the person in the film, it's actually incredible that the facial detail it has recreated is in fact accurate!

I have no idea how the system achieved it, but it did. I didn't stop there, however. I also started to use Deoldify, which also produced a result that was well above my expectations for such poor quality video. This is AI and machine learning at work, and yes, it seems to be working amazingly well.

I tried some of the stuff that I uploaded to Vimeo back in the late 2000s that I shot on a Sony EX3, and some of the results up-converted to 4K are so convincing that unless you looked under a microscope you'd never know it was uploaded originally at 720p. Remember, this isn't up-converting a 720p master, but a highly compressed video streaming service file. And yet the results are still incredible.

It has got to the point where I have dug out some of my old XDCAM discs, ready to have a go at the original footage rather than my social media uploads of old, to see what it can really do. And this begs a question. If I can get such good results up converting old, compressed SD and 720p footage to 4K, how does this bode when it comes to relatively modern 1080p and 4K footage?

Quite aside from the idea that we can already perform amazing up-conversions, in the not so distant future this might be part and parcel of in-camera processing. Already I’m looking at frame interpolation software such as DAIN, which currently requires huge resources, yet produces utterly incredible results. As processing gets better, will it matter if your camera only maxes out at 60fps? A conversion to 120fps or more could be done in software, or even within your camera, at full 4K or above resolution, even if your sensor has to drop resolution to actually record the initial footage. And although the resulting detail might be the end product of AI doing its work and not ‘real’, you would never, ever know.

Lightfield

At the point when processing becomes so fast that this stuff can be done in realtime, the question then becomes, is physical resolution and framerate important any more? Of course we cannot forget light field technology in all of this. With light field tech framerate really is irrelevant, but we’re a long way off this becoming something mere mortals can handle. There's also the idea of vector video. In the meantime technology will become smarter and make better use of the data that we have. Processing of that data will advance at a much faster rate than the alternative technologies, which means that the visuals we obtain and process will be increasingly ‘made up’.

It’s an odd phrase to use, and it could have quite massive repercussions when it comes to legal depositions, but with AI created images we are looking at an artificial creation. We might not ever notice it, but it will be there. The question is, does it matter?

Nobody takes note of every detail of every texture. As humans we simply cannot do that, and that’s why this AI based tech works so well. It can give its interpretation of a texture, and as long as it’s believable we will buy into it. The other question though, is how this works in a legal sense. If I was to video you performing a heinous act, any court of law could rule that a sufficient portion of the image was artificial to the point of being inadmissible. Now you could say “well, just use a camera that records real data”.

That’s a simple solution to a complex problem, because if most people’s smartphones end up using AI based video, then that is most likely to be the thing to capture somebody in the midst of a spontaneous illegal act. I don’t think that the legal system is ready for what very much is on the immediate horizon. Even if the action recorded is true, if half the picture is proven to be an AI interpretation of what is happening then that could cause very sticky problems indeed when it comes to legal proof.

In this instance real resolution really could matter. But the pace of technology is such that these are not problems that are a long way off, or that can even be prevented. Rather they are problems that need to be accounted for ahead of time. For the rest of us it could mean resolution independence and a new age of cameras where the limits are o the internal processing, not the sensor.

Tags: Technology Production Futurism

Comments