Replay: A steady stream of breakthroughs has changed the face of computing, but we might not have noticed all of them.

When you've been a technology geek as long as I have, it's educational to look back at the lesser-known technology triumphs.

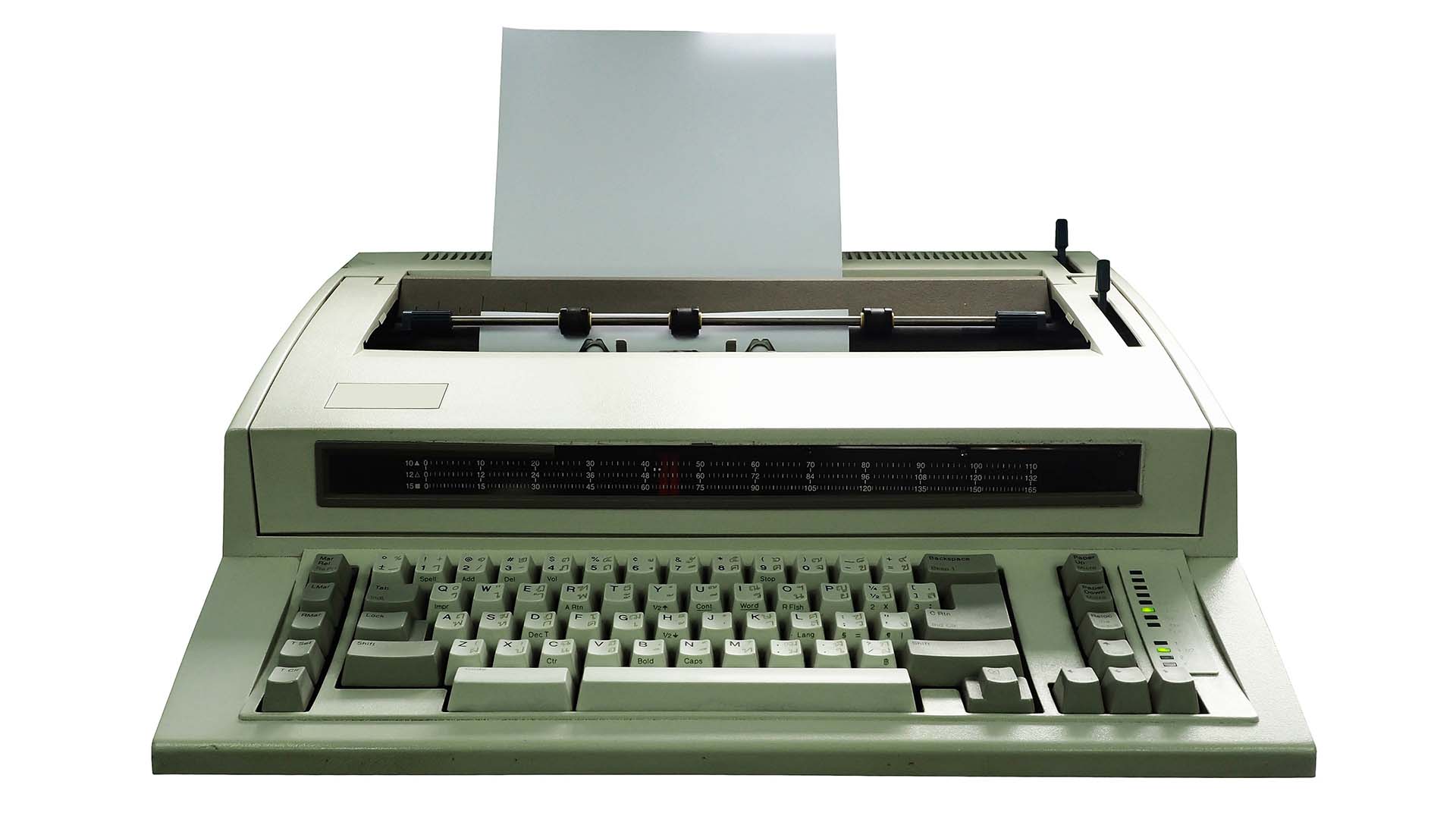

When in 1988 I left my first job, an administrative role in the music industry, my office had a word processor. It wasn't a computer - it was the person's job title using a bulky processor unit with a green screen and 8" floppy disks - and a daisy-wheel printer. It was as if my office had entered the space race. I was fascinated by it and the arcane operating system, which bore approximately no resemblance to anything we're used to today. I think it was one of these.

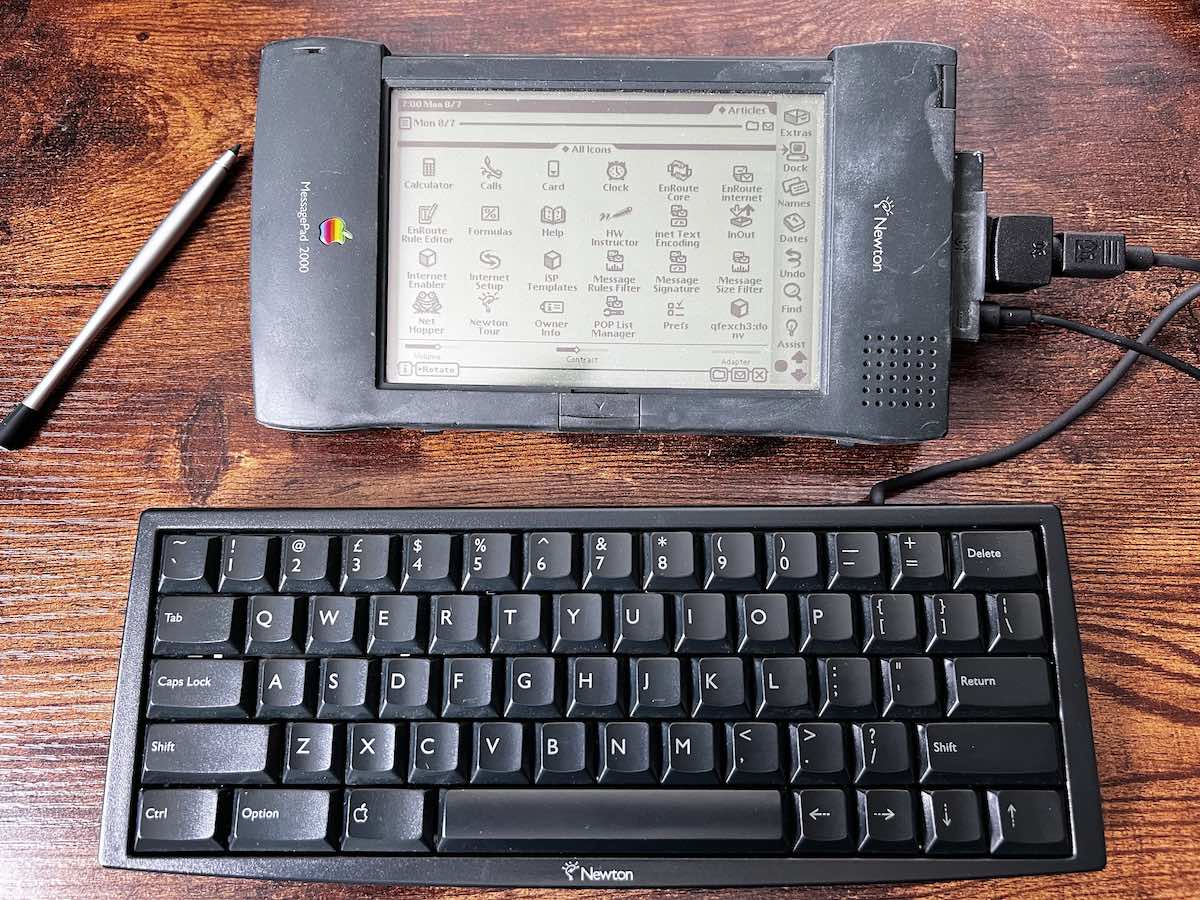

Just ten years later, I bought my own word processor. It fitted in my (admittedly large) pocket, came with an LCD screen, ran off batteries and had a primitive web browser. It could also convert handwriting to text and hand-drawn shapes to geometrically accurate ones. It could record audio but absolutely no video.

And the manufacturer? It was an obscure Californian company called Apple. The product was the Apple Newton MessagePad 2000.

The Newton wasn't exactly Apple's finest moment, but I wouldn't blame the Cupertino company for that. Some of the ideas were brilliant - like the in-situ modeless error correction: just scratch out any word or object with the pen, and it would disappear in a puff of cartoon smoke.

David Shapton's Apple Newton MessagePad 2000. It still works!

Solid State Storage is a significant advance

My ability to spot trends and back winning technologies deserted me with the Newton. I bought it shortly before Apple discontinued it. But, even so, I loved it. It was well worth the nearly £1000 it cost me in 1998 money (by the time I'd bought the keyboard and other accessories). With an ideal form factor to act as a mini laptop, it was extremely portable, and - best of all - it was entirely solid-state, with no fragile hard disks to worry about.

It was the predecessor to modern laptops and perhaps even smartphones in many ways.

I came across my Newton recently. From today's perspective, it was a remarkably prescient machine. And it still works. I can't plug it into my Macbook, though, because a serial connector doesn't fit into a Thunderbolt port.

How long has it been since most new laptops have come with solid-state storage? With Apple, it's probably around eleven or twelve years. Without most people even noticing it, solid-state drives have entirely transformed the laptop experience.

I bought my first Macbook Air in 2011. After years of unreliable and bulky laptops, it was a revelation. It wasn't fragile. It booted up quickly. And - something I couldn't know at the time - it lasted for a decade. What's more, until it finally gave up with a RAM error, it remained a decent computer: reasonably fast and joyfully portable.

I would nominate solid-state storage as one of the most significant advances in personal computing.

LED displays

Another substantial improvement you might not have noticed is when laptop and other LCD screens changed from fluorescent backlighting to LED. LED backlights rarely go wrong, unlike their predecessors, and they illuminate better. This, together with solid-state storage, means that laptops now last around three times longer than they used to. That's obviously fantastic, but a much bigger benefit is that it's changed the way people buy them too.

Using a computer longer before upgrading is clearly a boon for sustainability, but it disposes buyers to wait much longer before splashing out on a new machine. It makes them likely to spend more too, knowing that whatever they buy will last longer. Ever since a processor's clock speed ceased to be the primary factor in the overall performance of a computer, factors like multiple cores, memory speed and GPU power have become more significant. And it may be that the end of the race for greater clock speeds (which topped out around the year 2000) has actually benefitted computer design because it has spawned more imaginative architectures, like massively powerful GPUs, media accelerators and even AI processors.

Before the advent of GPUs - and still in the era of relatively slow processors by today's standards - video: any video! - on a computer was a thing to behold. Remarkable for its sheer existence as much as its execrable quality (64 x 64 pixels, anyone?), it quickly evolved from a curiosity to defining the raison d'etre for iPads and other tablets: content viewing. And this progress has never stopped.

Hard disk storage has never been ideal. Entrusting your precious data to a spinning platter and a terrifyingly fragile mechanism was always a compromise. Nevertheless, RAID brought a welcome degree of redundancy to mass storage and quickly became a means for video professionals to get the speed they needed to edit even standard definition video (never mind 8K, which is 85 times the data rate!). I should mention that the fastest RAID array has no redundancy at all!

But one of the bugbears of setting up a PC storage system - particularly with external drives - has long been solved. In the shape of FireWire, USB and Thunderbolt, Plug and play has revolutionised how we think about storage. To anyone (like me) who remembers parallel SCSI drives with cables the size of hosepipes, a shoelace-like Thunderbolt cable is a thing of absolute wonder.

WiFi

Around the beginning of this century, WiFi started to get noticed. Laptops are, of course, portable by nature, but can you imagine modern life if you had to plug your computer into a network socket? Most modern laptops don't even have one. I still find WiFi miraculous, and current, faster incarnations even more so.

And we've needed faster WiFi because broadband is now often as fast as a LAN connection from a couple of decades ago. I borrowed a Samsung 8K TV a while ago and watched 8K streams without a hitch.

The most recent innovation might be the most important when taken as a whole. It's Apple Silicon (and any other ARM-based tech that comes close to its staggering performance).

Yes, it's fast. But far more significant is that it sips power. The latest Apple chip - the M1 Ultra, has over 100,000,000,000 transistors: an almost unimaginable number. Divided between multiple CPU cores, GPUs, AI and media accelerators, they're not only fast but specialised. While most computers would struggle to play a single stream of 8K video, the new Mac Studio can simultaneously play up to nine streams of professional quality 8K video and supply up to five 4K monitors. If that's not impressive, I don't know what is.

We've seen all these innovations in the last thirty years. There's no sign that the pace of innovation is slowing down. So if you want to figure out what will happen in the next 30 years, I would advise looking at the more minor, seemingly less headline-grabbing advances. Because put together, they will change the way we live our lives.

Tags: Technology Retro

Comments