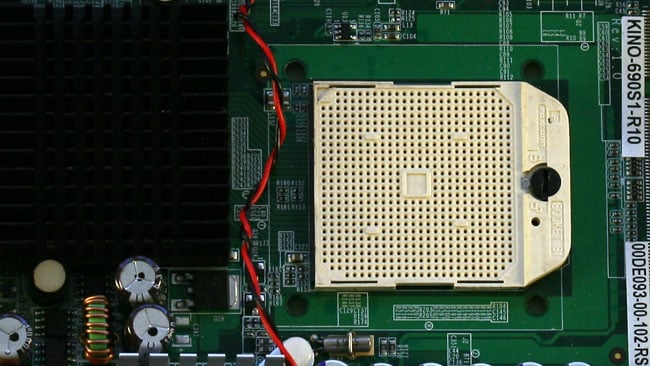

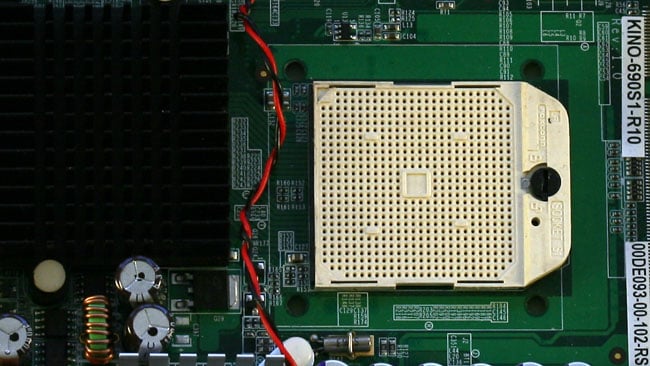

CPUs. We're used to a thousand pins, but not a thousand cores.

CPUs. We're used to a thousand pins, but not a thousand cores.

While still very much in the 'experimental' category, the one thousand core barrier has been broken by engineers at UC Davis and IBM.

One of the things we covered in our review of 2015 was the stagnation of workstation performance. While graphics cards, such as Nvidia's new GTX 1080, have flown ahead in terms of performance, and while cellphones and other low-power devices have come on leaps and bounds, the trusty desktop CPU has been left out in the cold to some extent. A large amount of this is probably down to market forces as much as it is technology, with pocket-sized portable computing clearly a massive breakout of the last decade or so, but there are technological limits too, especially on clock speed.

Core Independence

In many ways, the thousand-core experimental processor built at UC Davis (well, actually built by IBM, but nevertheless) more-or-less continues this trend. It doesn't offer any way around the roadblocks to increased clock speed, but it does offer an absolutely enormous amount of parallelism by offering a literal thousand CPU cores all at once. To some extent, this may sound like a graphics card, many of which have had more than a thousand cores for a while, but the key difference is in the independent nature of the new CPU's operations. On a graphics card or other vector processor, all of the cores do the same work on different data. This works beautifully for image processing, and the practicability of things like Resolve on desktop workstations is entirely reliant on it. Previously, colour correctors needed staggeringly expensive custom hardware racks or a lot of parallel CPU power. However, it doesn't do so much good if we don't want to apply the same mathematics (a blur, maybe) to a large amount of data.

One of the enormous logic emulators used by Nvidia in the development of GPUs

One of the enormous logic emulators used by Nvidia in the development of GPUs

The thousand cores on the Davis CPU don't work like that; they're fully independent, each running separate code of the programmer's choice. This independence runs deeper, too, with the ability to power up or switch off cores in order to tailor the availability of computing resources, and thus the power consumption, to the task at hand. This recalls the concerns of the mobile computing sector, of course, and hints that the purpose of the new device may be rooted more in its configurability than its sheer performance. It was also made on a semiconductor production line capable of 32-nanometre feature sizes, which is relatively mundane by the standards of the most recent 14-nanometre parts. This may be an issue simply of availability, but it means that the current design presumably won't realise the absolute best possible performance that might become available via a simple shrinking process.

Parallel management

As ever, the thousand core CPU is a science project. It isn't anywhere near market and it isn't clear what the intentions of the people behind it are. Perhaps the most important consideration, though, is how this sort of massively-parallel computing effort might best be put to use. Media work tends firmly towards tasks which can be broken down into smaller units to be performed simultaneously, but even in the world of film and TV, there are places where we would like to see better per-core performance. The analogy we've used before is that it's impossible to paint a wall faster by inviting some friends over to apply all four coats at once.

Some of the mathematics behind very popular software, such as video compression, does not easily break down into parallel operations, or at least not very many of them. With any multi-step process, where step two relies on the results of step one, it's impossible to do both steps at once. Until recently, CPU clock speeds kept growing and tasks continued to get faster. Even now, with CPU core counts significantly below the number of individual tasks (threads, in the language of computer science) available from something like H.264 compression, more cores have continued to be very helpful. At a thousand cores per CPU, however, things become rather more difficult.

The explosion of mobile devices has led to lots of research in the field, but have desktops suffered in comparison?

The explosion of mobile devices has led to lots of research in the field, but have desktops suffered in comparison?

Encouraging as it is, then, to see a thousand-core CPU demonstrated (and that's really all that we have here, a demonstration) what we need just as much is research into ways of automatically breaking down programs into parallel threads. Programmers currently have to do this more-or-less manually, and it's a time-consuming task that's notorious for introducing bugs. Making it an automatic part of the process of compiling conventional software is likely to be a key part of the multi-core future, but there are some difficult fundamental problems around a computer's ability to understand the intent of a programmer. Compiler theory is complicated, but regardless of whether the software issues can be overcome, and whatever the intentions of often mobile-centric designers, the future of computing is going to use multiple cores and those cores are going to have to be managed.

Tags: Technology

Comments