Video Levels

Video Levels

If you've ever shot material on a Canon DSLR and viewed the results in Quicktime on a Mac, chances are you've noticed that things look perhaps a little more contrasty than you'd intended. Phil Rhodes not only looks at why this happens and the means of avoiding it, but maintains that it is a real and genuine technical issue and it shouldn't be ignored.

The problem is as old as digital video, but really came to prominence with the sudden ascendance of the 5D Mk. II and the problematic luminance encoding behaviour interaction with Quicktime. That's some time ago, though, and the fact that the problem still occurs in post production work, and the fact that we're still talking about it here, is evidence that it remains partially solved at best.

The root cause of problems with video level shifts lie in decisions made by engineers working on the very first digital video systems. Much as with digital audio, it had been recognised that overshoots in signal level are poorly handled in digital systems, which have none of the intrinsic soft-limiting behaviour that is often a characteristic of analogue recordings. In audio, a nominal level of 20 decibels below the full-scale value is considered normal, leaving lots and lots of room for peaks to go well above that without hitting any limits.

The headroom in video is smaller but allowed for similar reasons – according to ITU-T Recommendation 709, white should be at luminance code value 235 in 8-bit digital systems. Audio doesn't have quite the same problem with undershoots as video, but black is defined as code value 16, to give some room for blacker-than-black signals to be maintained (typically these result from aggressive grading, mathematical glitches in digital filters, clever camera setups, and the like).

Strictly speaking – because I've been pulled up for glossing these things over in the past – the numbers are 16-235 for the luminance and 16-240 for the colour difference signals in a component video (that is, YcrrCb or similar) image.

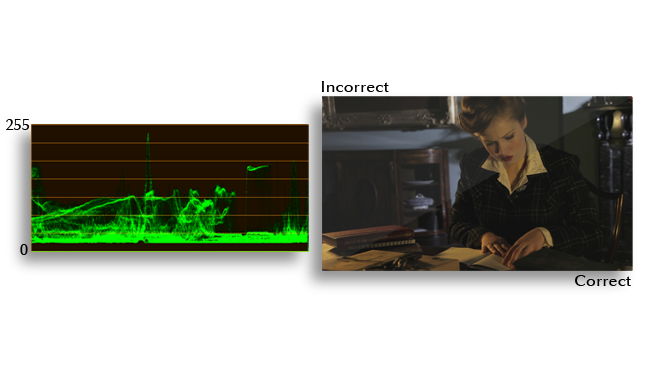

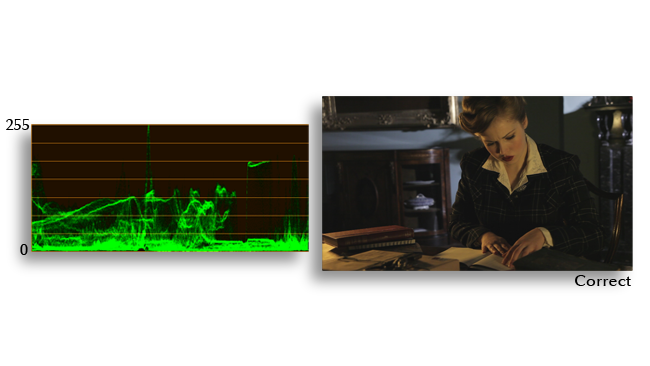

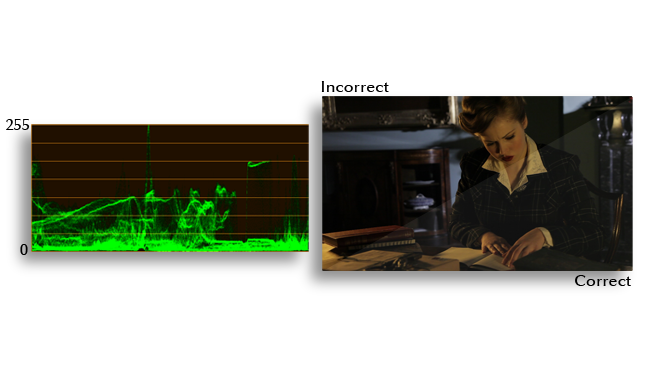

Here we can see an image on the right and the corresponding waveform monitor display on the left - notice the gap at the bottom of the waveform below value 16, and above the top of the peak representing the gleam on the picture frame. As displayed on your computer monitor the highlights (such as the picture frame in the background) look a little dull and the blacks a little grey. The problem image is split with the correctly-interpreted image for comparison.

Fig. 1 – Studio swing luminance example

Except when they aren't. Desktop computers generally work in the sRGB system, not Rec. 709. sRGB typically uses all of the 0-255 range for picture information, which is why, when you play back almost any piece of video that's been shot in the real world with a camera, blacks often look slightly grey, and no matter how brightly exposed a highlight is, the peak whites aren't quite as bright as, say, a file manager's window background as displayed on the same monitor.

Theoretically, it is possible for a video player such as Quicktime or VLC to rescale the brightness values in a file such that things appear correctly, but in order to do that, the software needs to know what the intention was when the video was encoded. Since Rec. 709 video may legally include overshoots and undershoots from code values 1 to 254, there's no reliable way to determine that intention by examining the image.

The following image fills the entire waveform from top to bottom, and could theoretically still be a reduced-range Rec. 709 image – but much more commonly, something like this would be a full-range image, and it looks normal when displayed directly on your full-range computer monitor. Notice there is no space below the shadows or above the peak white.

Fig. 2 – Full swing luminance example

Poor standardisation

You'd have thought that it would be relatively straightforward to include a flag in the video header indicating the luminance encoding intent of the pictures, but – and this is the real problem – this is poorly standardised.

To return to our original example, Canon DSLRs record video using the entire 0-255 range, which is good for luminance resolution. The problem is that much software fails to recognise this, and assumes that everything between 0 and 16 is blacker-than-black detail which can be cropped off, and likewise for whites beyond 235. The result is crushed shadows and clipped highlights in a way that's just barely possible to overlook on some types of content if you're in a rush or in a less-than-ideal monitoring environment, so it's an insidious problem that sometimes isn't caught, at least not until it's far too late.

Sometimes, the cropped-off information can be recovered in grading, but in other circumstances it is removed at some stage in the post pipeline and lost forever. The following image shows the results of incorrectly interpreting a full-range image as reduced-range, with clipped highlights and crushed shadows.

Fig. 3 – Lost luminance information example

Attempts to solve the problem in software have been haphazard at best. Some software even looks at the frame size, and assumes anything of HD-esque resolution is probably Rec. 709, which is terribly unreliable (it fails, for instance, on DSLR Canon footage). The only really dependable solution is to allow the user a checkbox to instruct the software as to the desired luminance handling behaviour, and a lot of people are reluctant to do that because it introduces an opportunity for error in itself.

Most post production software does now know how to handle Canon footage, although that may mean that it will fail on other, more conventionally-encoded material, depending on exactly how the changes have been implemented. Third-party transcoding software can solve this and other problems, including better chroma upsampling and transcoding to other codecs. But the moral of the story is simple: if things suddenly start looking contrasty, don't assume it's just a monitor settings issue, as real picture information is easy to lose.

Tags: Technology

Comments