Sony is moving away from hardware based data analysis to a software based subscription approach. The implications could be profound.

Image: Shutterstock

In May, Sony revealed the first two models of its intelligent vision sensors, which the company described as the world’s first image sensors with integrated AI processors. Now, the company plans a major shift from hardware sales to “software by subscription” for data-analysing image sensors on location.

While initially directed at CCTV, self-driving and other Internet of Things applications using object recognition, there is scope to extend the idea to photography and computer vision in media and entertainment.

As more and more types of devices are being connected to the cloud, it is commonplace to have the information obtained from them processed via AI on the cloud.

But when video is recorded using a conventional image sensor, it is necessary to send data for each individual output image frame for AI processing, resulting in increased data transmission and making it difficult to deliver real-time performance.

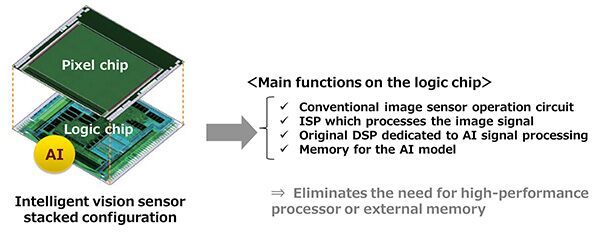

The new IMX500 image sensor products from Sony perform image signal processing and high-speed AI processing (at just 3.1 milliseconds) on the chip itself, completing the entire process in a single video frame. This design makes it possible, say Sony, to deliver high-precision, real-time tracking of objects while recording video.

Fierce Electronics reports that the chip’s AI processing capability came from placing the logic chips on the back of the sensor “thereby giving the sensor more pixels to improve the light sensitivity.”

The extracted data is output as metadata, “reducing the amount of data handled.” This, and the lightning speed of image recognition opens up the possibility of new applications, from the detection of face masks to differentiating between whether a human or a robot is entering a restricted area.

Sony says the sensor “captures the meaning of the information of the light in front of it,” performing image analysis and outputting it all within the chip. In the example used in an explainer video (below), Sony shows the sensor identifying a basketball and a guitar, for instance.

Upgradable sensor software

Just as intriguing is Sony’s business plan for an imaging division that makes U$10 billion a year and which has built dominance over rivals like Samsung and China’s OmniVision Technologies through hardware breakthroughs.

Reuters reports that the sensor software “can be modified or replaced wirelessly without disturbing the camera.”

Sony says it hopes customers will subscribe to the software via monthly fees or licensing, “much like how gamers buy a PlayStation console and then pay for software or subscribe to online services.”

“Transforming the light-converting chips into a platform for software - essentially akin to the PlayStation Plus video games service - amounts to a sea change,” Reuters says.

Analysis of data with AI “would form a market larger than the growth potential of the sensor market itself in terms of value,” explained Sony’s Hideki Somemiya, who heads a new team developing sensor applications.

In order to do that, Sony needs partnerships with internet giants that have an existing development community. And in mid-2019 Sony did just that by striking an alliance with Microsoft to implement some smart camera solutions using Microsoft’s Azure cloud platform.

“These products expand the opportunities to develop AI-equipped cameras, enabling a diverse range of applications in the retail and industrial equipment industries and contributing to building optimal systems that link with the cloud,” the company wrote in a blog post.

However, you won’t find this technology in phones and handheld cameras just yet. These new sensors, for now, will be restricted for commercial purposes such as surveillance cameras and smart retail spaces that demand complex computer vision architectures such as Amazon Go.

That’s not to say either the core technology nor Sony’s business can’t or won’t be applied to media and entertainment in future. Super-fast in-camera AI-driven processing of faces and objects might assist applications like performance capture, pre-vizualisation or mixed reality photography.

Future possibilities

Such a chip could have the smarts to reignite light field cinematography by enabling quicker lighter weight processing of volumetric data.

If a chip can send metadata to the cloud for processing, it can also send information back to the sensor to upgrade its capability without having to re-engineer it or buy a new body.

More generally, such chips attached might aid computer vision of augmented reality glasses which have stalled in their tracks.

It’s also another chipping away at the bricks and mortar of traditional hardware and black boxes sold into professional environments and all our homes.

Tags: Technology

Comments