Unreal Engine is traditionally used for games, but its power is now providing broadcasters with hitherto unseen real time graphics.

Unreal Engine is traditionally used for games, but its power is now providing broadcasters with hitherto unseen real time graphics.

AJA and Epic Games move games engines into mainstream production by incorporating support for Unreal Engine 4 directly into the Kona 4

The race is on to perfect virtual environments and virtual production. Everyone from VFX tools developer Foundry and Hollywood studios like Paramount to James Cameron and Weta Digital for the new Avatar movies are looking to evolve technology that permits a director to film photoreal CG assets with live action in real time on set.

Much of the research is centered on games engines, like those from Epic Games (maker of the Unreal Engine) or Unity, which were originally designed to render polygons, textures and specular lighting as fast as possible.

The tech is not confined to feature film. New TV entertainment formats mixing physical and virtual objects and characters and people in real time include FremantleMedia and The Future Group’s Lost in Time. Impossible without state-of-the-art real-time 3D rendering of a games engine, some call this concept ‘interactive mixed reality’.

Games engines can create a level of realism unrivalled by any broadcast character generator but they need to be reprogrammed first so that the games software matches camera-acquired signals like timecode.

TFG for example turned to Ross Video to rewrite the Unreal code to comply with genlock. This software is being sold by Ross as graphics engine Frontier.

NewTek has allied with Epic Games to bring live video sources into Unreal Engine over the NewTek NDI (video over IP) network without needing additional codecs or multiple video cards.

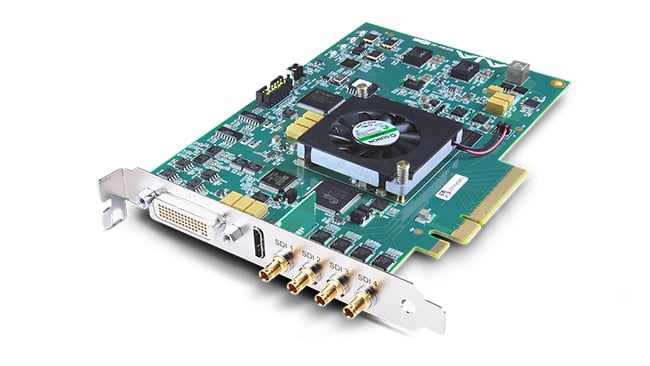

The AJA Kona 4

Unreal Engine built in

Now the convergence of live video production and gaming engines has gone a step further with AJA’s support of Unreal built into its KONA 4 and Corvid 44 video transfer cards.

Epic’s release of Unreal Engine 4.20 includes a plug-in based on the AJA SDK supporting HD/SDI video and audio input and output to the cards. This includes full support for timecode and fully gen-lockable video enabling integration of augmented reality and graphics in live broadcast transmissions.

Bill Bowen, chief technical officer at AJA is quoted saying, “There is a rising tide of momentum for Unreal Engine in broadcast across virtual sets for live broadcast, virtual production and eSports.”

His counterpart at Epic, Kim Libreri, claims, there’s been a growing demand from its customers working in live broadcast for video support in Unreal and hints that more traditional broadcast gear will follow and be hooked up to the engine.

Even Andy Serkis, performance capture pioneer and director of forthcoming Jungle Book adaptation Mowgli, is in on the game.

“Using game engine technology to achieve high quality rendering in realtime means that, once something is shot on set, there will be no post production,” he told IBC. “I believe that is where we are headed and with VR, AR and interactive gaming platforms emerging it’s the most exciting time to be a storyteller.”

The film and TV industry is evolving rapidly toward the real-time production of live action seamlessly blended with CG animated and performance captured characters, blended into virtual environments.

Tags: Production

Comments